Cracking the Voynich Code 2015 - Final Report

Acknowledgements

The project team would like to extend their deepest gratitude to our supervisor, Prof. Derek Abbott, and co-supervisors, Dr. Brian Ng and Maryam Ebrahimpour, for their continual support and guidance throughout the research project. The advice given throughout helped drive the project forward and allowed for basic investigations on a very interesting topic.

Abstract

The Voynich Manuscript is a 15th century document written in an unknown language or cipher or may be a hoax. This report presents the ideas and results into determining possible linguistic properties within the Voynich with the intent of determining possible relationships with other known languages. This is performed through basic data-mining and statistical methods. The document reviews previous research carried out by other researchers. The final method is given and the results obtained by the project team are detailed and evaluated. The project management is also briefly outlined.

Introduction

Background

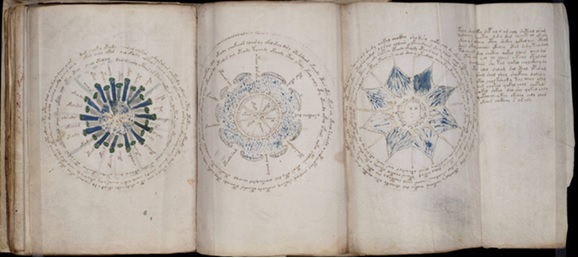

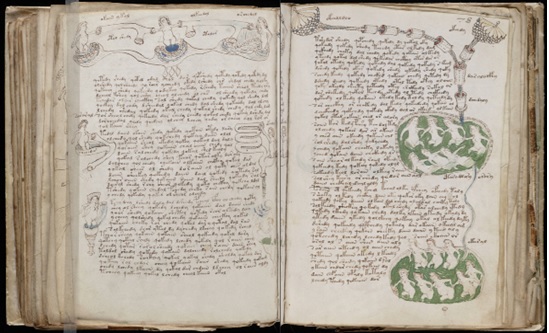

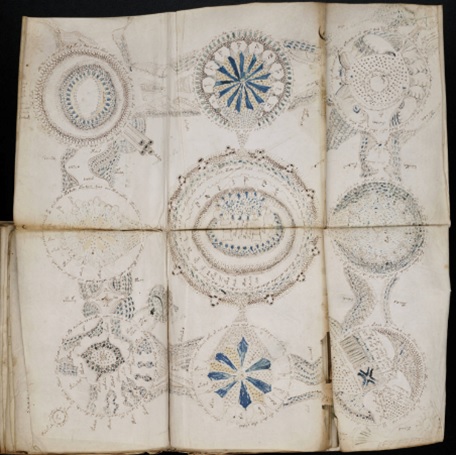

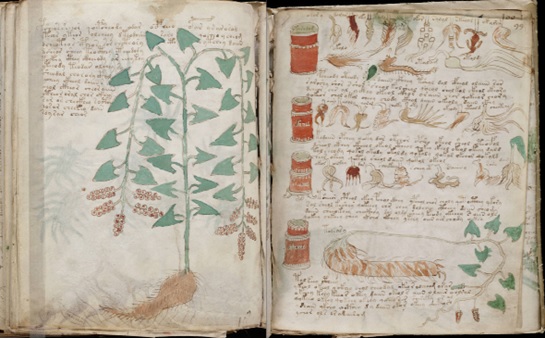

The Voynich Manuscript is a document written in an unknown script that has been carbon dated back to the early 15th century [1] and believed to be created within Europe [2]. Named after Wilfrid Voynich, whom purchased the folio in 1912, the manuscript has become a well-known mystery within linguistics and cryptology. It is divided into several different section based on the nature of the drawings [3]. These sections are:

- Herbal

- Astronomical

- Biological

- Cosmological

- Pharmaceutical

- Recipes

The folio numbers and examples of each section are outlined in Appendix A.2. In general, the Voynich Manuscript has fallen into three particular hypotheses [4]. These are as follows:

- Cipher Text: The text is encrypted.

- Plain Text: The text is in a plain, natural language that is currently unidentified.

- Hoax: The text has no meaningful information.

Note that the manuscript may fall into more than one of these hypotheses [4]. It may be that the manuscript is written through steganography, the concealing of the true meaning within the possibly meaningless text.

Aim

The aim of the research project is to determine possible features and relationships of the Voynich Manuscript through the analyses of basic linguistic features and to gain knowledge of these linguistic features. These features can be used to aid in the future investigation of unknown languages and linguistics.

The project does not aim to fully decode or understand the Voynich Manuscript itself. This outcome would be beyond excellent but is unreasonable to expect in a single year project from a small team of student engineers with very little initial knowledge on linguistics.

Motivation

The project attempts to find relationships and patterns within unknown text through the usage of basic linguistic properties and analyses. The Voynich Manuscript is a prime candidate for analyses as there is no known accepted translations of any part within the document. The relationships found can be used help narrow future research and to conclude on specific features of the unknown language within the Voynich Manuscript.

Knowledge produced from the relationships and patterns of languages and linguistics can be used to further the current linguistic computation and encryption/decryption technologies of today [5].

While some may question as to why an unknown text is of any importance to Engineering, a more general view of the research project shows that it deals with data acquisition and analyses. This is integral to a wide array of businesses, including engineering, which can involve a basic service, such as survey analysis, to more complex automated system.

Significance

There are many computational linguistic and encryption/decryption technologies that are in use today. As mentioned in Section 1.3, knowledge produced from this research can help advance these technologies in a range of different applications [5]. These include, but are not limited to, information retrieval systems, search engines, machine translators, automatic summarizers, and social networks [5].

Particular technologies, that are widely used today, that can benefit from the research, include:

- Turn-It-In (Authorship/Plagiarism Detection)

- Google (Search Engines)

- Google Translate (Machine Runnable Language Translations)

Technical Background

The vast majority of the project relies on a technique known as data mining. Data mining is the process of taking and analysing a large data set in order to uncover particular patterns and correlations within said data thus creating useful knowledge [6]. In terms of the project, data shall be acquired from the Interlinear Archive, a digital archive of transcriptions from the Voynich Manuscript, and other sources of digital texts in known languages. Data mined from the Interlinear Archive will be tested and analysed for specific linguistic properties using varying basic statistical methods.

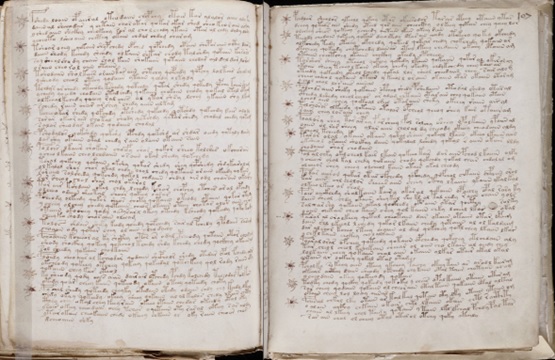

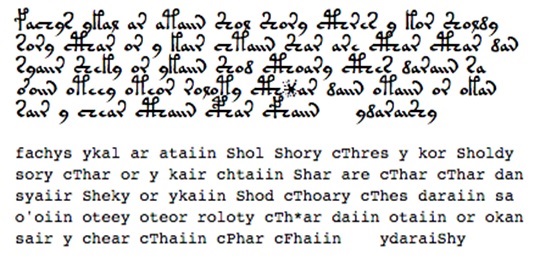

The Interlinear Archive, as mentioned, will be the main source of data in regards to the Voynich Manuscript. It has been compiled to be a machine readable version of the Voynich Manuscript based on transcriptions from various transcribers. Each transcription has been translated into the European Voynich Alphabet (EVA). An example of the archive in EVA and the corresponding text within the Voynich Manuscript can be seen within the Appendix A.3. The EVA itself can be seen within Appendix A.4.

Technical Challenges

Due to the difficulty of transcribing a hand-written 15th century document, no transcriptions within the Archive are completed, nor do they all agree with each other. Many tokens within the Voynich Manuscript have been considered as a different token, or even multiple tokens. Spacing between word tokens has also been a key ambiguity as one transcription may consider one word token to be multiple word tokens or vice-versa. It is also believed that the manuscript is missing 14 pages [7]. These uncertainties will make it difficult to effectively conclude on any linguistic analyses.

The statistical methods relating to linguistics are numerous, leading to many different possible approaches that can be used upon the Voynich Manuscript. However many of the intricate techniques require some form of knowledge of the language itself. This limits the possible linguistic analysis techniques that can be used. Despite previous research on the Voynich Manuscript, no current conclusion has been widely accepted [3]. Due to this the research will be focused on the basics of linguistics.

Requirements

It is not expected that the project fully decodes, or even partially decodes, the Voynich Manuscript. Nonetheless the project must show the following:

- A logical approach to investigating the Voynich Manuscript

- Critical evaluation of any and all results

- Testing on all code

- Hypotheses based on results

Literature Review

Over the years, the Voynich Manuscript has been investigated by numerous scholars and professionals. This has given rise to many possible hypotheses [4] through many different forms of analysis based on its linguistic properties [2]. These properties range from the character tokens to word tokens, to the syntax and pages themselves. The currently reviewed literature, which is of interest to the project, is summarized below.

A broad, albeit brief, summary of linguistic analyses that have been completed over the previous years is given by Reddy and Knight [2] and include some of their own tests. They perform multiple analyses on the letter, the word, syntax, pages, and the manuscript itself while giving reference to other works on the same property. Their work on the letter and word are of a particular interest of this project. They suggest that vowels may not be represented within the Voynich Manuscript and that Abjad languages have the closest similarities [2]. This is concluded through two-state hidden Markov models and word length distributions respectively. Reddy and Knight also suggest that there are some particular structural similarities within the words when using a minimum description length based algorithm [2].

Gabriel Landini’s [3] looks into the statistical characteristics of the manuscript and natural languages. Characterising the text through Zipf’s Law and performing analysis on entropy and character token correlation, Landini suggests that there is some form of linguistic structure behind the Voynich Manuscript [3]. In particular, the work reveals long range correlation, a modal token length, and periodic structures within the text.

Andreas Schinner [4] takes a different approach in the paper “The Voynich Manuscript: Evidence of the Hoax Hypothesis”. Schinner performs a random walk model and tests token repetition distances through the Levenshtein distance metric. It is concluded that while the results seem to support the hoax hypothesis more so than the others, it cannot rule out any of them [4].

Diego R. Amancio, Eduardo G. Altmann, Diego Rybski, Osvaldo N. Oliveira Jr., and Luciano da F. Costa [5] investigate the statistical properties of unknown texts. They apply various techniques to the Voynich Manuscript looking at vocabulary size, distinct word frequency, selectivity of words, network characterization, and intermittency of words. Their techniques were aimed at determining useful statistic properties with no prior knowledge of the meaning of the text. Although not aimed specifically at deciphering the Voynich Manuscript, they do conclude that the Voynich Manuscript is compatible with natural languages [5].

Jorge Stolfi’s website [8] gave multiple views and analyses of the Voynich Manuscript. Stolfi’s work on word length distributions and morphological structure [8] are of particular interest to the project. He displays a remarkable similarity in word length distributions between the Voynich Manuscript and Eastern Asian languages [8]. He also shows evidence of morphological structure, displaying prefix-midfix-suffix structure [9], and later displaying a crust-mantle-core paradigm [10].

In regards to research on the Voynich Manuscript carried out at the University of Adelaide. This is the second year that this project has been undertaken by students. Bryce Shi and Peter Roush provide a report on their results [11]. They carry out a multitude of tests on the Voynich Manuscript including:

- Zipf’s Law

- Word Length Distribution

- Word and Picture Association

- Word Recurrence Intervals

- Entropy

- N-Grams

- Punctuation

- Authorship

Shi and Roush give short conclusions to each of these tests but realise that further research is required for any to be considered conclusive [11].

English Investigation Literature Review

Previous research did not reveal any methods used to categorise English characters as either alphabet or non-alphabet tokens. However many papers did reveal possible statistics that could be used to perform said categorisation and also highlighted possible difficulties.

Solso and Juel [12] provided a count of bigram frequencies and suggest that they may be useful in the assessment of the regularity of any word, non-word, or letter identification. Unfortunately the paper is very outdated and what they consider as comprehensive is now far below what is possible using computational methods available today. It does however show that letter identification may be possible using bigrams.

Jones and Mewhort [13] investigated the upper and lowercase letter frequency and non-alphabet characters of English over a very large (~183 million word) corpora. They find that there is no equivalence between the relative frequencies between the lowercase and corresponding uppercase characters, noting that there is a low mean correlation between upper and lower case characters. Their non-alphabet character results show that particular non-alphabet characters have much larger frequencies than some regular alphabet characters but also note that these frequencies can vary widely. The non-alphabet characters are generally found as a successor to an alphabet character but also find that on rare occasions a non-alphabet character, which regularly appears as a successor to an alphabet character, may appear before an alphabet character. It is concluded that different writing styles can affect the statistics of bigram frequencies and that both letter and bigram frequencies can have an effect on corresponding analyses.

Church and Gale [14] investigate different methods of determining the probabilities of word bigrams by initially considering a basic maximum likelihood estimator. This gives the probability of an n-gram by counting the frequency of each n-gram and dividing it by the size of the sample. Unfortunately this is very determinant on the sample but also state that these bigram frequencies could be used for the disambiguation of the output of a character recognizer. They therefore investigate two other methods, good-turning and deleted estimation methods, and compare with the results obtained from using the maximum likelihood estimator over a large corpora of 44 million words. The results show that these different methods for determining probability provide possible strengths over basic methods but note that their corpora may not be a balanced sample of English. They also state that the writing style of the texts can affect the results so particular care must be taken when selecting text for a corpus.

In terms of the Voynich Manuscript, Reddy and Knight [2] use an unsupervised algorithm, Linguistica, which returns two possible characters, K and L, as possible non-alphabet characters. The algorithm shows that these character tokens seem to only appear at the end of words, however the removal of these character tokens results in new words. Using a traditional definition of punctuation, which is punctuation only occurs at word edges, the removal of these character tokens should result in words already found within the Voynich. They therefore suggest that there is most likely no punctuation in the Voynich.

Morphology Literature Review

Over the past years, many researchers have examined multiple different techniques of extracting different forms of linguistic morphology from various different languages [15]. Both unsupervised and supervised techniques have been used. Hammarström [16]presents a particularly simple unsupervised algorithm for the extraction of salient affixes from an unlabelled corpus of a language. This is particularly of interest as the Voynich Manuscript does not have any universally accepted morphological structure [2]. Hammarström’s algorithm assumes salient affixes have to be frequent and that words are simply variable length sequences of characters. This is a naïve approach to handling the complex nature of morphology by restricting itself to concatenated morphology of which do not necessarily need to be frequent [16]. His results show that it includes many affixes that would be considered junk affixes where a junk affix is defined as a sequence of characters that, once affixed to a word, do not change the word in any meaningful way. He states that his results can only give guidelining experimental data and did find that the writing-style, even in the same language, could give significant differences. More informed segmentation and peeling of affix layers was beyond the scope of the paper.

Aronoff and Fudeman [17] provide the basics behind morphology and morphological analysis. In particular, they give two basic, complimentary approaches through analysis and synthesis. The analytic approach is of interest to this project as it deals with breaking words down into their smallest 'meaningful' sections. They also provide four basic analytic principles used in morphology to aid anyone that attempts to undertake any morphological analysis. Note that Aronoff and Fudeman also highlight potential issues with morphological structure when comparing between different languages, showing direct comparisons between English and other various languages.

Durrett and DeNero [18] introduce a supervised approach to predicting the base forms of words, particularly those base forms within morphologically rich languages. Using a data-driven approach, Durrett and DeNero develop software that learns transformation rules from inflection table data. The rules are then applied to a data set to extract the morphological data. While this method can be extended to any language unfortunately it requires a substantial number of example inflection tables [18] making this unsuitable for use on the Voynich Manuscript. However the method may prove useful if performing tests on the English language.

Trost [19] delves into computational morphology, providing the fundamentals behind linguistics, real-world applications, and various forms of computational morphology analysis. The two-level rules for finite-state morphology outlined by Trost are of concern to this project as they show that an affix may change the structure of the word. Trost gives small examples of English plurals. Due to the unknown nature of the Voynich Manuscript, any morphological analysis will need to take the possibility of these small structural changes into account.

Goldsmith [20] reports on the use of a minimum description length model to analyse the morphological structure of various European languages. He attempts separate a word into successive morphemes, where possible, corresponding to more traditional linguistic analysis [20]. Goldsmith outlines the minimum description length approach, in great detail, and various other approaches that have been attempted by previous researchers. The results obtained are good but not perfect, noting that a number of issues are still present within his approach and the various other approaches. He concludes that his algorithm is highly likely different than that of a human language learner and that the determination of morphology is a complex task.

Eryiğit and Adalı [21] offer two different approaches by using a large Turkish lexicon. One approach was to initially determine the root words which allows for these to be stripped from other words, leaving the possible affixes. The other approach used the reverse order by initially determining the affixes which could then be stripped from the words leaving only root words. Both approaches used rule-based finite state machines as Turkish is a fully concatenative language that only contains suffixes [21]. This approach would not work with the Voynich Manuscript as there is no known lexicon that can be used with the Voynich. However the paper does give evidence on how rule-based approaches can be utilised to determine morphological structure.

Minnen, Caroll and Pearce [22] show a method for analysing the inflectional morphology within English. This did not use any explicit lexicon or word-base but did require knowledge of the English langauge as it used a set of morphological generalisations and a list of exceptions to these. This method is available as software modules which could be used in future experiments to compare with other possible methods to determine inflectional morphological structure.

Snover and Brent [23] present an unsupervised system for the extraction of stems and suffixes with no prior knowledge of the language. The system is designed to be entirely probabilistic that attempts to identify the final stems and suffixes for a given list of words. They state that the results and analysis are conservative, showing only a number of possible suffixes but, due to this, appears to be more precise than other morphology extraction algorithms. However this system requires a large corpus to determine a list of common words to use. In particular, when testing English Snover and Brent use the Hansard corpus which contains approximately 1.6 billion words. Other tests show that it has particular issues with languages that use more complex morphology.

Another paper shows extraction of morphology through the extension of the Morfessor Baseline, a tool for unsupervised morphological segmentation. Kohonen, Virpioja and Lagus state that the number of unique word formed from morphology can be very large in a given corpus [15]. They show that by adding the use of labelled data, which is data that is known as its corresponding morphological category, to unlabeled data the results of the extraction significantly improve. However this means that knowledge of the language is required to give such labelled data. They note that by using labelled data they can bias the system to a particular language or task and that it is difficult to avoid biasing across different languages. The morphemes themselves may be higher or lower depending on the language.

Morphology tests and experiments have also been carried out previously on the Voynich Manuscript. Several hypothesis of the basic structure have been given [2], these include:

- Roots and Suffixes model

- Prefix-Stem-Suffix model

- Crust-Mantel-Core model

Reddy and Knight [2] perform a test on the Voynich Manuscript by running Linguistica, an unsupervised morphological segmentation algorithm, to segment the words into possible prefixes, stems and suffixes. They conclude that the results suggest there is some form of morphological structure within the Voynich Manuscript.

Jorge Stolfi’s [8] website “Voynich Manuscript stuff” gave multiple views and analyses of the morphological structure within the Voynich Manuscript. He also shows evidence of a possible prefix-midfix-suffix structure [9], and later displaying a crust-mantle-core paradigm [10].

Collocation Literature Review

Collocation statistics have been found to be domain and language dependent [24]. Therefore texts within a corpus should be of the same domain to be able to compare results between languages. This also does not mean that the statistics will be the same as the recurrent property of words are typical to different types of languages [25]. This makes them difficult to translate across languages but, by using word association metrics, may show if a text in a similar domain has any relationship between different languages.

There are multiple different types of collocations which range from basic phrases to strict word-pair collocations [25] such as the collocation defined within this investigation. The word association metrics can also vary significantly and have a range of different statistical methods to assign a metric [24]. These include but are not limited to:

- T-Score

- Pearson’s Chi-Square Test

- Log-Likelihood Ratio

- Pointwise Mutual Information (PMI)

Thanopoulos, Fakotakis and Kokkinakis [24] compare these various word association metrics, defining their collocations as strict word-pairs. Their results show that the values of the metrics can vary significantly and that, depending on the choice of association metric, will rank the same collocations in different orders. However, despite these differences, the resulting curve from the metrics are generally quite similar.

Wermter and Hahn [26] also investigate different word association metrics while making comparisons to a simple frequency based metric. While it is generally assumed that using a statistical association measure will produce more viable results [26], Wermter and Hahn argue that this type of association may not necessarily produce better results than a simple frequency association if not including additional linguistic knowledge. Like Thanopoulos, Fakotakis and Kokkinakis [24], Wermter and Hahn also show that using different metrics can return similar output assuming the metric ranks the most-likely collocations at the higher ranks while non-collocations are ranked last.

Pearce [27] states that with no widely accepted definition on the exact nature of linguistic collocations there is a lack of any consistent evaluation methodology. Many proposed computer based collocation definitions are based around the use of N-Gram statistics. An issue with this is that a dependency in a collocation may span many words, giving an example of French where a collocation may span up to 30 words. He shows different methods of giving a metric for word association and states that pointwise mutual information has so far been widely used as a basis. It is also stated that despite a universally accepted definition for a collocation, comparative evaluation is still useful.

Reddy and Knight [2] show summarized information on the word correlations of the Voynich. In particular they show that the word association of word-pairs within the Voynich at varying distances do not show any significant long-distance correlations and suggest that this may arise from scrambling of the text, generation from a unigram model, or the interleaving of words.

Shi and Roush [11] of the previous final year project group also carry out a collocation investigation using word-pairs and again found that the Voynich displayed a weak word association measure when compared to other languages. They suggest this could indicate that the manuscript is a hoax or some type of code, further stating that ciphers are designed to have weak word order.

Method Overview

The methods used during the project are outlined here. They are split into different phases where each phase will be considering a specific linguistic feature or property and attempting to relate it to Voynich Manuscript while building onto what was learned in the previous phase(s). Many techniques may replicate previous research outlined in Section 3. The results within these documents will be used to compare and complement results where possible.

All phases will be coded and will therefore include testing as all code must be verified for results to be considered accurate.

Completion of each phase is considered a milestone.

Phase 1 - Characterization of the Text

Characterization of the text involves determining the first-order statistics of the Voynich Manuscript. This first involves pre-processing the Interlinear Archive into a simpler machine-readable format.

The pre-processed files are then characterized through MATLAB code by finding and determining:

- Unique word tokens (Vocabulary Size)

- Unique character tokens (Alphabet Size)

- Total word tokens

- Total character tokens

- Frequency of word tokens

- Frequency of character tokens

- Word token length frequency

- Character tokens that only appear at the start, end, or middle of word tokens

A 'unique' token is considered a token that is different than any of the other tokens. In terms of character tokens, difference is attributed to the token itself being visually (machine-readable) different than another. In terms of word tokens, difference is attributed to the structure of the word.

Resulting statistics are then be compared with other known languages through using the same code on the various translations of the Universal Declaration of Human Rights. Unfortunately the Universal Declaration of Human Rights is, by comparison, a small document which will limit results.

Phase 2 - English Investigation

The English investigation looks into the elementary structure of English text. It specifically examines the representation of the English alphabet and how the alphabetical tokens can be extracted from an English text using statistics. This is done to grasp a better understanding on how character tokens are used within text and how data and statistics relating to these character tokens can be used to characterize each token.

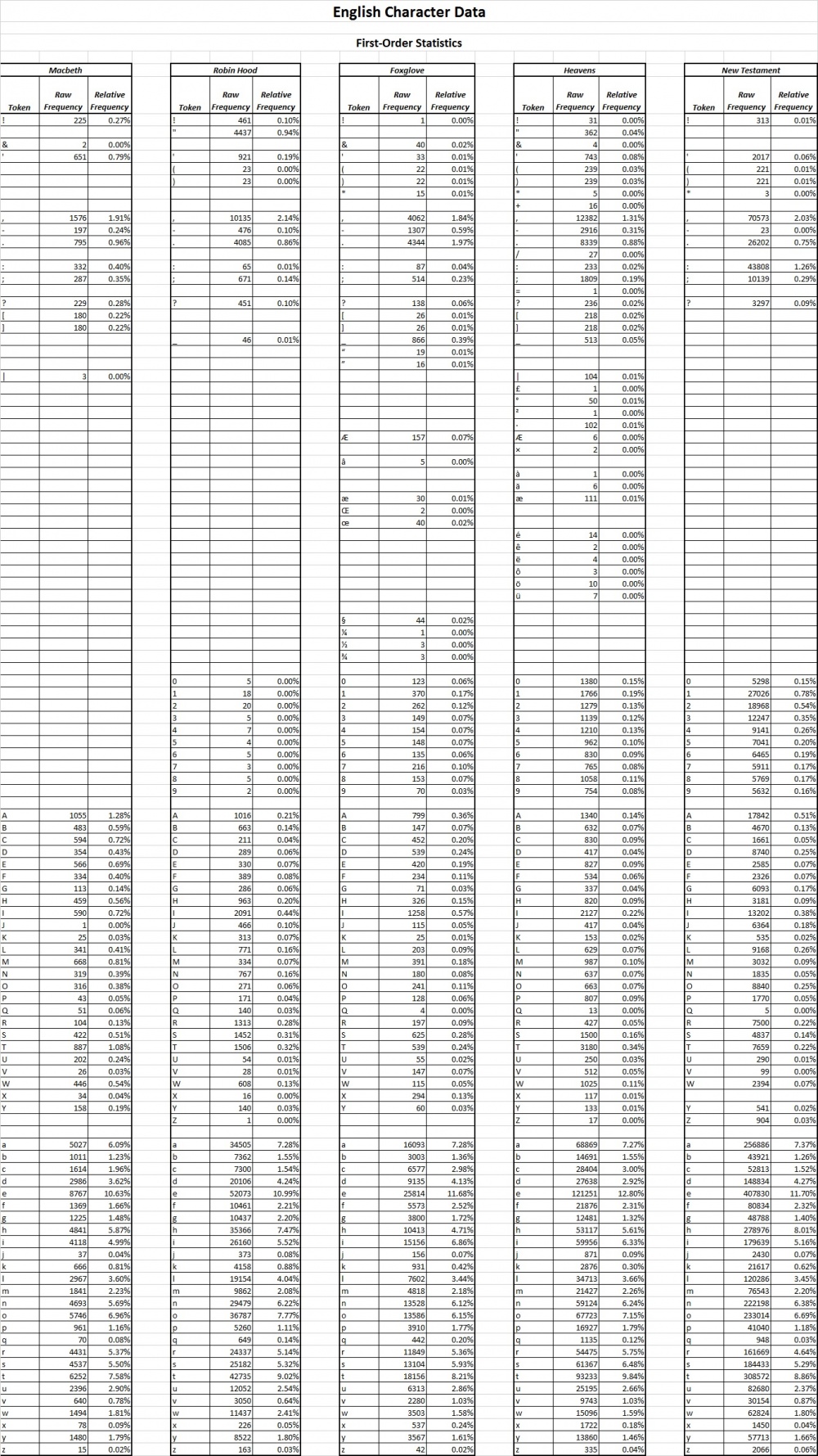

Initially, a corpus of English texts (see Appendix A.9) shall be passed through the characterization code of phase 1 to determine the first-order statistics of each text. These will be compared to grasp a basic understanding of how each of the tokens can be statistically represented and how these statistics differ between texts. These tokens include alphabetical, numerical, and punctuation tokens.

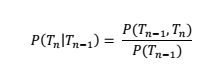

The characterization code will then be expanded upon to include character token bigrams to further define the differences between character tokens. Bigrams give the conditional probability, P, of a token, Tn, given the proceeding token, Tn-1. This is given in the following formula:

It was expected that the probability of the different tokens along with the first-order statistics, obtained through the phase 1 code, will show definitive differences between alphabetical, numerical, and punctuation tokens.

Code will be written that takes these statistical findings into account to attempt to extract the English alphabet from any given English text with no prior knowledge of English itself. This will be used to examine the Voynich Manuscript to search for any character token relationships.

Phase 3 - Morphology Investigation

Linguistic morphology, broadly speaking, deals with the study of the internal structure of the words, particularly the meaningful segments that make up a word [28]. Specifically, phase 3 will be looking into the possibility of affixes within the Voynich Manuscript from a strictly concatenative perspective.

Previous research has found the possibility of morphological structure within the Voynich Manuscript [2]. Within this small experiment, the most common affixes in English are found and compared with those found within the Voynich Manuscript. Due to the unknown word structure and small relative size of the Voynich, this experiment defines an affix as a sequence of characters that appear at the word edges. Using this basic affix definition, a simple ranking of the affixes of various lengths could reveal potential relationships between the Voynich and other known languages.

The basis of the code extracts and counts each possible character sequence within a word for a specific character sequence length. These are then ranked, according to frequency, and compared with all languages tested. By comparing the relative frequencies and their difference ratios, we are able to find if any of the tested languages share a possible relationship with the Voynich Manuscript.

Results found here are assume concatenative affixes within the Voynich Manuscript and the other languages. As shown in many languages, morphology is full of different ambiguities dependent on the language. As such the results here can only give very baseline experimental data and will require further research.

Code is written for MATLAB which will allow for use on the Interlinear Archive. The code will also be used on English and other Languages texts to provide a quantitative comparison of the differing languages.

Phase 4 - Illustration Investigation

The illustration investigation looks into the illustrations contained in the Voynich Manuscript. It will examine the possible relation between texts, words and illustrations. The different sections in the Voynich Manuscript are based on the drawings and illustrations in pages. Almost all the sections are texts with illustrations except recipes section.

In Phase 4, the basis of the code will be achieving the following functions:

- Find out unique word tokens in each pages and sections

- Determine the location of a given word token

- Determine the frequency of a given word token

- Compare two given texts, find out common words and frequency of the common words of the texts.

The resulting statistics from the code can then be used into investigation. However, it should be noted that the manuscript may have been written by multiple authors and in multiple languages [29]. Sections of the manuscript will need to be investigated separately, particularly those written in different languages, along with the manuscript as a whole.

Phase 5 - Collocation Investigation

Collocations have no universally accepted formal definition but deals with the words within a language that co-occur more often than would be expected by chance. Natural languages are full of collocations and can vary significantly depending on the metric, such as length or pattern, used to define a collocation.

In this research experiment, the definition used for a collocation is that of two words occurring directly next to each other. As collocations have varying significance within different languages, by extracting and comparing all possible collocations within the Voynich Manuscript and the corpus, a relationship based on word association could be found or provide evidence of the possibility of a hoax.

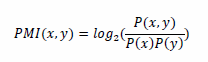

Initially collocations are ranked by frequency, from most frequent to least frequent, followed by a Pointwise Mutual Information (PMI) metric. PMI is a widely accepted method to quantify the strength of word association and is mathematically defined as:

Where P(x,y) is the probability of two words appearing coincidentally int the text and P(x) and P(y) are the probabilities of each word appearing within the text.

Implementation and Testing

As described earlier, the vast majority of the project uses Data Mining. This is done through various self-developed software code, mainly in C++ and MATLAB languages, for each phase. To ensure the integrity of any data and/or results the code is verified through testing. The implementation and testing procedures of the software code for each phase is briefly detailed below.

Phase 1 - Characterization of the Text

Pre-Processing of the Text

The Interlinear Archive is written in such a way that each page stored within the archive contains the transcription of the page by the various transcribers. The transcriptions can be identified through an identifier at the start of each line (as can be seen in Appendix A.5). To simplify the required processing for the characterization of the text, and other phases to be investigated, the pre-processing software code re-organises the Interlinear Archives transcriptions into their separate transcribers.

The software code also removes, or replaces, any unnecessary formatting. This includes the transcriber identifiers, spacing labels, and any in-line comments. Depending on what character tokens are to be investigated, the software can either keep or remove any extended EVA characters found.

Implementation

Implementation of the pre-processing software code required an understanding of the layout and formatting used within the Interlinear Archive. This is detailed in the initial pages of the archive but a brief summary can be found in Appendix A.5.

Using these details, software was written to read each page of the Interlinear Archive, searching for each specific transcriber through the identifier tags. Upon finding a transcribed line by the specified transcriber the software replicates the page file and the lines within a separate folder designated for the transcriber. This process is repeated until the entirety of the Interlinear Archive has been read.

A secondary software module is then run to remove, or replace, the unnecessary formatting by reading the pages contained within the now separated and re-organised version of the archive.

Testing

Initial testing of the software code was carried out on small English sentences. Each sentence testing a specific feature of the layout and formatting used within the Interlinear Archive before a final sentence combining all of these features. Once these tests have been passed, the pre-processing software was run on specified pages of the Interlinear Archive. These specified pages are also re-formatted by hand and cross-checked with the output.

This was to ensure that the software itself can identify a transcriber and perform any necessary reformatting within the text.

Characterization of the Text

The software code for the characterization of the text is the main objective of Phase 1. It tabulates all relevant first-order statistics of the Voynich Manuscript as detailed in the Proposed Method. As pre-processing of the Interlinear Archive is completed first, the characterization software was developed to be simple and assumes that pre-processing has occurred.

Implementation

Initially, a specific transcriber will be chosen based on the completion of the transcription. The pages transcribed by this specific transcriber will then be read by the software code where it will store all the relevant first-order statistics. These are summarised into an output text file.

Testing

As with the pre-processing software, the testing of the characterization software is initially completed using English. A small paragraph was written and hand-counted for the relevant data. The output of the characterization software was then compared with that which was hand-counted. This is again repeated with a section of the Interlinear Archive.

This was to ensure that the data received by the characterization software was correct.

Phase 2 - English Investigation

Character Token Bigrams

It was found that basic first-order statistics would not allow for the full extraction of the English Alphabet from a random text. The character token bigram software aimed at giving further data which would help with the extraction of the English Alphabet.

The software does not perform any formatting and does not distinguish any tokens aside from their 'uniqueness'.

Implementation

The software initially finds every unique character token within the given text and generates every possible bigram from that set of unique character tokens. It then reads the text counting every occurrence of a bigram and outputs the results to a text file.

Testing

Testing of the character token bigram software was completed using the same English test paragraph used for the characterization software. The process described within the implementation for generating the bigrams was completed by hand and cross-checked with the output of the software.

This was to ensure that the software correctly generated the bigrams and made no distinctions between tokens. These distinctions included lower-case and upper-case alphabetical tokens, numerical tokens, and punctuation tokens.

English Alphabet Extraction

The English alphabet extraction software was the main objective of Phase 2. The software itself is developed to use the currently found statistics to extract the English alphabet from a random English text.

Implementation

Implementation of the English alphabet extraction software involved analysing the current data and building up from the basics in an attempt to characterize a list of unknown character tokens. This meant that the software would initially look at the first-order statistics and attempt to use those as a means of extracting possible alphabet tokens or non-alphabet tokens. Further data would them be included to help extract tokens that could not be characterized or refine tokens that had been improperly characterized.

A second implementation is currently in development as it was found that the first implementation had a large error deviation when used to analyse different types of English text and when using small sample sizes. This second implementation will be generalising the bigram data further to decrease both the error rate and deviation.

Testing

Testing of the English alphabet extraction software is completed through inputting texts that had been previously used to extract first-order data and bigram data and texts that have not yet been analysed. This allows the team to check for the error rate of the software and determine if the accuracy and precision is sufficient enough to be used on the Voynich Manuscript.

Phase 3 - Morphology Investigation

Basic Morpheme Identification

The basic morpheme identification software reads the text from a chosen text file within a folder and extracts all the combinations, and corresponding frequencies, of sequences of characters for a specified length that occur at the start of a word (prefix) or end of a word (suffix). This only gives a very basic interpretation of possible affixes and does not attempt to determine what type of affix, if any, the extract character sequence is.

Implementation

The current implementation reads a text file from a chosen folder, extract all the combinations, and corresponding frequencies, of sequences of characters for a specified length, and outputs the data to a specified text file.

Testing

Testing of the basic morpheme identification software is done by first writing basic English text that includes possible examples that should be identified. The results are manually found and compared with the output of the software for verification.

Small sections of the Voynich Manuscript are also used. The same manual procedure is used to verify the software output for Voynichese text.

Phase 4 - Illustration Investigation

Search and Analysis Word Token

The software in the Phase 4 is a search engine, the functions is to search the unique word token of the Voynich Manuscript in each section and each folio as well as compare the texts between folios. And also the software will allow the user to search a given word token, find out the location (section, folio, paragraph) and frequency of the word token. Again, the database for phase 4 is Takahashi's transcription, and the given word token which is searched is in Takahashi's format.

Implementation

The first step of Phase 4 is reading through the original Voynich Manuscript, choose a certain folio which content text with illustration. Recording all the word tokens occurring in the folio, then analyse the word tokens using the Matlab code from Phase 1 and Phase 4, recording the following features:

- Number of word tokens in this folio

- Number of unique word token in this folio

- Location of each word token in this folio

- Frequency of this folio’s word token in the whole Voynich Manuscript.

- Find out the location of this folio’s word token that also appear in others folio.

When the statistical computations have finished, perform a comparison between different folios on which word tokens occur, determine any similar and/or different illustrations in each of their folios and then analyse.

The second step is counting the basic feature of the Voynich by folios and sections.

- Word token, number of word token, most frequency word token on each folio and section.

- Unique word token, number of unique word token, most frequency unique word token on each folio and section.

When the statistical computations have finished, perform a comparison between the folios on which unique word tokens occur by section, determine any similar and/or different illustrations in each of their folios and then analyse.

Testing

Some Matlab code are same as phase 1 which have tested already. To testing phase 4’s Matlab code, first choose a certain word token from the statistic in phase 1, the frequency of the word token should be around 10 to 20 in order to easy for manually check. Then input the word token to the Matlab code, the result will be each location of the word token in the Voynich Manuscript and the frequency of the word token. Comparing the result with the statistic from phase 1, to see if they are same. And also read though the location results in the original Voynich Manuscript, to see if the word token is in the right place.

Phase 5 - Collocation Investigation

Collocation Extraction and Word Association Metric Calculator

The MATLAB code used in the collocation investigation provides all the found collocations (strict word-pairs) within a given text and calculates the corresponding frequencies and pointwise mutual information metrics.

Implementation

The extraction section of the code initially tokenizes all of the words within a chosen text file. These words are then paired together in the order they are found to provide all the found collocations. During this time the code is determining all the relevant metrics (frequency, relative frequency, PMI) based on the total collocations found and the probability of each single word occurring.

The results are then output into a text file.

Testing

Testing of the collocation and association metric software is done by first writing basic English text which is then processed through the software. The results are manually found and compared with the output of the software for verification.

Phase Results and Discussions

Phase 1: Choice of Transcription and Characterisation of the Text

Introduction

Statistical characterisation of text can be handled through multiple different methods [30]. Characterisation of the Voynich Manuscript was handled through the identification of the basic statistics within the text. These included:

- Total Word Token Count

- Vocabulary Size

- Word Length Distribution

- Total Character Token Count

- Alphabet Size

- Longest Word Token

- Word Frequency Distribution

These statistics were used to examine the general size of the alphabet and words, and to determine if the data followed Zip’s Law.

The various translations of the UDHR was also used to compare the word length distributions of other known languages to that of the Voynich.

Results

The project team begun research by pre-processing the Interlinear Archive into separate simple files containing the transcriptions of each unique transcriber. All unnecessary data, such as comments, were removed from each of these transcriptions. In-line formatting was also converted to follow a simpler, machine-readable standard (see Appendix A.5 for an example).

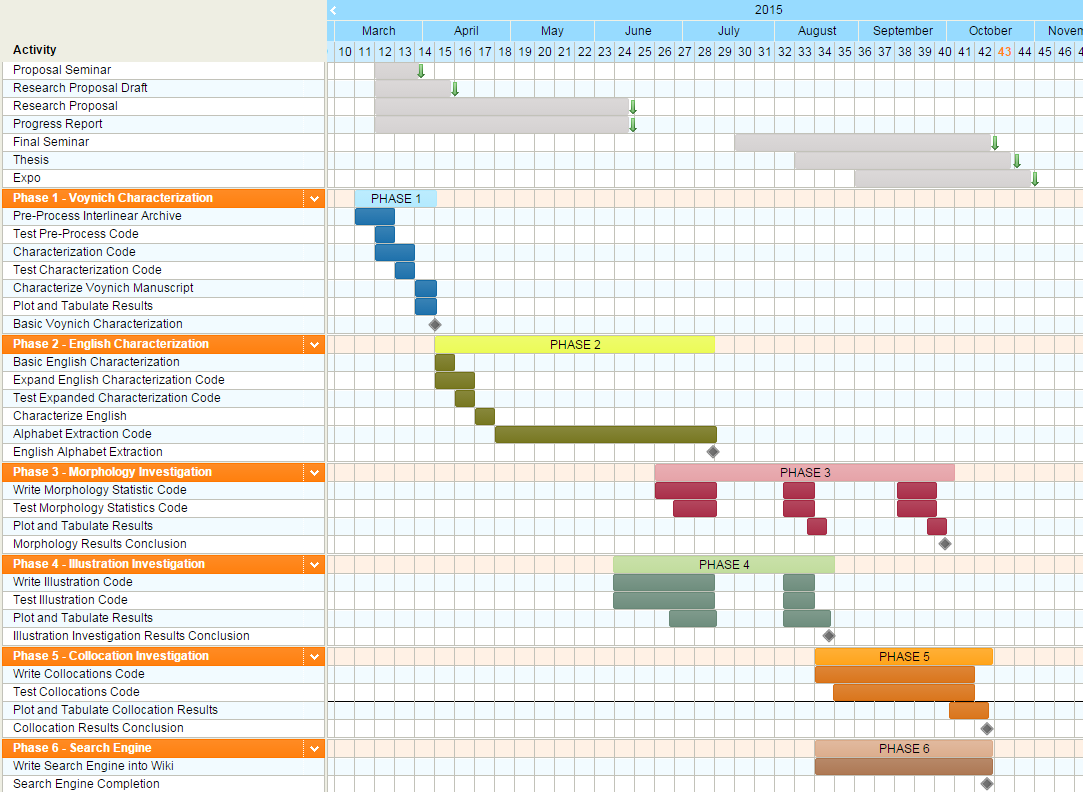

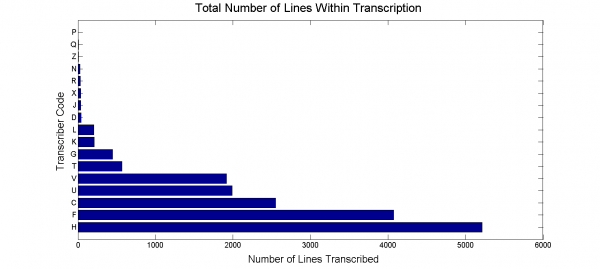

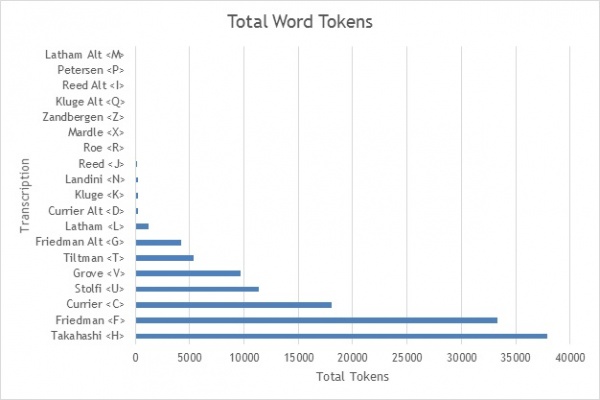

To get the most accurate results the team must look into which transcriptions are the most complete. With any statistical research, the sample size is an important factor [31]. A larger sample size will give a broader range of the possible data and hence form a better representation for analysis. Shi and Roush [11] suggest that the Takahashi transcription was the most complete transcription by checking the total number of lines transcribed. A test on the amount of transcribed lines per transcriber and amount of transcribed word tokens per transcriber is performed giving the results within Figure 3 and 4 (see Appendix A.6 for a complete list of transcriber codes and their meaning).

This shows that Takahashi has the most complete transcript, following the same conclusion of Shi and Roush [11].

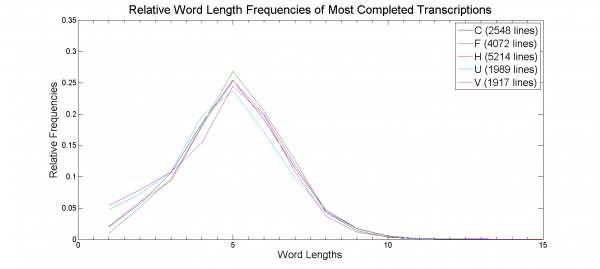

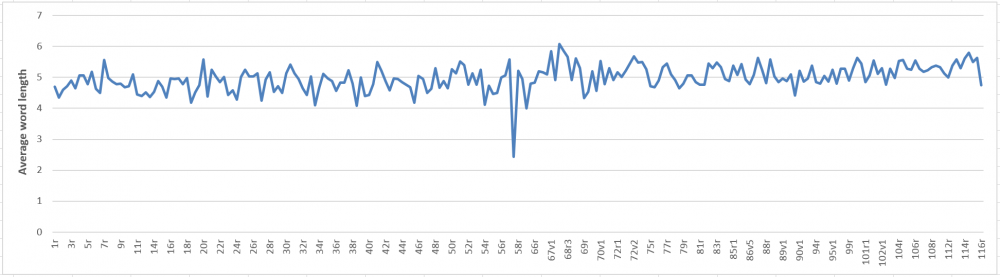

A comparison of the top five most completed transcriptions word-length distribution was then carried out. Takahashi’s transcription showed an unusual peculiarity with a single word token of length 35 with the next highest being of length 15. However, this word token was composed of mainly unknown ‘*’ characters and was therefore removed from our data set. This resulted in the following word-length distribution plot in Figure 4.

This result, again, conforms to the results found by Shi and Roush [11], showing a peak word length distribution of 5 and giving an unusual binomial distribution. This can also be seen in Reddy and Knight [2]. However Reddy and Knight specifically investigated the word lengths of language B within the Voynich Manuscript.

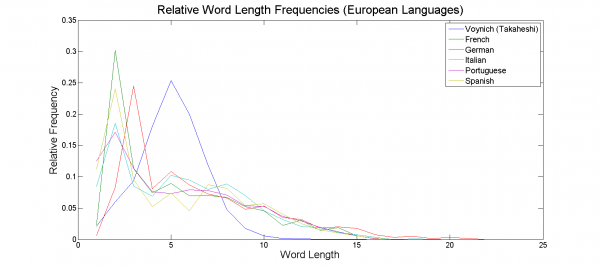

The Universal Declaration of Human Rights was also mined for relative word length distributions. This is, unfortunately, limited to a much smaller amount of tokens than that of the Voynich Manuscript but shall give a good indication as to which languages to investigate further.

As it is believed that the Voynich originated from Europe [2], European languages were initially compared with the results found above. Using the Takahashi transcription, as it is the most complete, resulted in the following word-length distribution plot in Figure 5.

Many European languages were removed from the plot to make it more readable. Regardless, the resulting conclusion was the same as no tested European language appeared to fit the peak word length and binomial distribution of the Voynich Manuscript. Shi and Roush [11] found similar results, but also showed that the language within the manuscript had a closer resemblance to Hebrew. Reddy and Knight [2] tested Buckwalter Arabic, Pinyin, and ‘de-voweled’ English, resulting in much closer relationships. All gave the appearance of a binomial distribution much like the manuscript, with Buckwalter Arabic being very similar to Voynich Language B. This leads to the hypothesis that the manuscript may be in the form of Abjad [2].

Looking specifically at the Takahashi transcription, the following first-order statistics of the full transcription were found (as shown in Table 1).

| Section | Result - Excluding Extended EVA Characters | Result - Including Extended EVA Characters |

|---|---|---|

Total Word Tokens

|

37919 | 37919 |

Total Unique Word Tokens

|

8151 | 8172 |

Total Character Tokens

|

191825 | 191921 |

Total Unique Character Tokens

|

23 | 48 |

Longest Word Token

|

15 | 15 |

Table 1: First-Order Statistics (Takahashi)

The Takahashi transcription was also characterized based on the different sections, as outlined in Appendix A.2, and is summarized in Table 2 and Table 3 below.

| Section | Total Word Tokens | Total Unique Word Tokens | Total Character Tokens | Total Unique Character Tokens | Total Longest Word Token |

|---|---|---|---|---|---|

Herbal

|

11475 | 3423 | 54977 | 23 | 13 |

Astronomical

|

3057 | 1630 | 15777 | 20 | 14 |

Biological

|

6915 | 1550 | 34681 | 20 | 11 |

Cosmological

|

1818 | 834 | 9289 | 21 | 13 |

Pharmaceutical

|

3972 | 1668 | 20168 | 21 | 15 |

Recipes

|

10682 | 3102 | 56933 | 21 | 14 |

Table 2: Takahashi First-Order Statistics By Section - Excluding Extended EVA Characters

| Section | Total Word Tokens | Total Unique Word Tokens | Total Character Tokens | Total Unique Character Tokens | Total Longest Word Token |

|---|---|---|---|---|---|

Herbal

|

11475 | 3441 | 55040 | 44 | 13 |

Astronomical

|

3057 | 1630 | 15781 | 23 | 14 |

Biological

|

6915 | 1550 | 34684 | 22 | 11 |

Cosmological

|

1818 | 834 | 9290 | 22 | 13 |

Pharmaceutical

|

3972 | 1668 | 20180 | 24 | 15 |

Recipes

|

10682 | 3102 | 56946 | 29 | 14 |

Table 3: Takahashi First-Order Statistics By Section - Including Extended EVA Characters

From Table 1, 2, and 3 above it can clearly be seen that the majority of character tokens, 99.95%, used are those of the basic EVA. It can also be observed that the majority of the extended EVA character tokens are found within the Herbal section of the Voynich Manuscript (from Tables 2 and 3).

All word and character tokens of each transcription have also been recorded along with the frequency that each occur.

Looking further at the Takahashi transcription, the following first-order statistics were found.

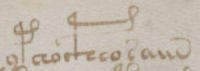

The longest word token appears in folio f87r in the pharmaceutical section. The word within the Takahashi transcription is “ypchocpheosaiin”, the original in the Voynich Manuscript is shown in Figure 6 on the right.

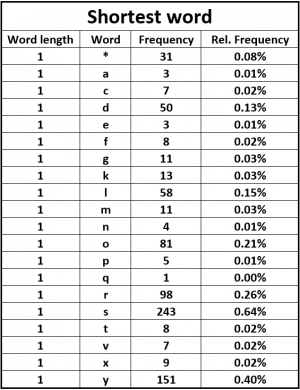

The length of shortest word tokens in the Voynich Manuscript is 1, which means they are single character token words, Table 4 below shows the statistics of all the single character word tokens in the Voynich Manuscript.

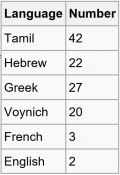

As it is believed that the Voynich originated from Europe [2], some Europe languages have more single letter words. The table left shows the number of single letter word in different languages.As can be seen from the table, the total number of single letter word Voynich is close to Greek and Hebrew and quiet different from French and English. In Greek and Hebrew, the single letters are used to represent numbers. There are two typical folio 49v and 66r which have more single letter words than most of the other folios. In these two folios, there both are a column in the left margin of paragraphs. The pictures of the two folios are shown above. Converting the letter by using Takahashi transcription, the letters are "f o r y e * k s p o * y e * * p o * y e * d y s k y" on folio 49v, and "y o s sh y d o f * x air d sh y f f y o d r f c r x t o * l r t o x p d" on folio 66r. In English written habit, the order of paragraph is represented by a series of number or letter in left margin of paragraphs. The single letters in these folios are follow this habit, so the letters may represent numbers. This also shows that the language within the manuscript had a closer resemblance to Hebrew.

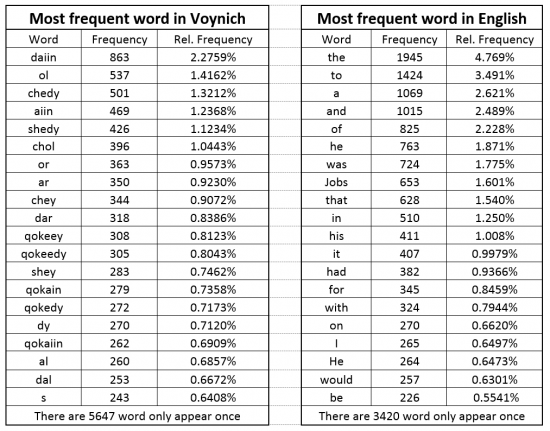

The frequency of words is helpful when investigating unknown languages, the most frequent word tokens in the Voynich Manuscript were found as shown in Table 5 below. To analyse the data, English literature is also investigated, the data was also recorded in Table 5.

The literature for the English investigation is chosen based on the Voynich Manuscript’s statistic. The total word tokens within the Voynich Manuscript is 37919, the total word token count for the English literature is 40786. Although the total word number is similar, the word token which only appear once is quite different, the number for Voynich is 5647, and for English is only 3420.

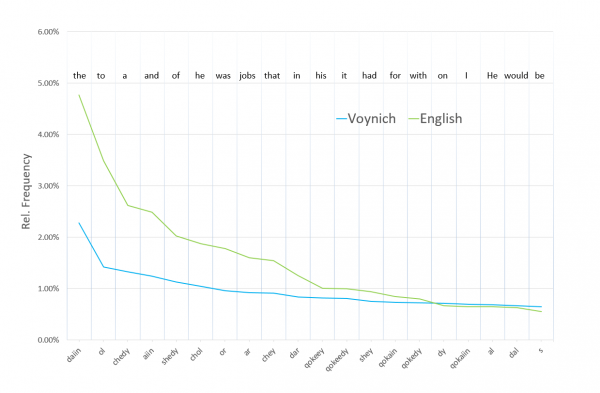

From Figure 7 it can be clearly seen that the shape of the two data is similar, and their gap narrowed after the 10th most frequent word.

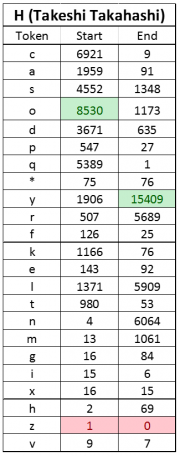

The Table 6 on the right shows the statistics of characters tokens only appear at the start and end of word tokens within the Takahashi transcription. It can be found that the token z appears the least times at the start and end. Token o appears most at the start, the frequency is 8530, and token y appears most at the end, the frequency is 15409. In almost all written systems in the world, punctuations always occur at word edge, most of them can only be found at the end of words. In Voynich language, it can be seen from Table 6, there is no word tokens only appear at the end of word. In traditional sense, there is likely no punctuation in Voynich.

All word and character tokens of each transcription have been recorded along with the frequency that each occur. Note that the character tokens are currently limited to the basic EVA characters (see Appendix A.4) but is currently being expanded to include the extended EVA characters. All the data and statistic from phase 1 are basic, they can be used in the rest phases.

Phase 2: English Investigation

Introduction

Characters within a text can be divided into various different categories. Within the English language, characters can be broadly divided into:

- Alphabet Tokens

- Numerical Tokens

- Punctuation Tokens

This experiment aimed to expand on the basic character statistics found in Phase 1. By incorporating character bigrams, the data could be used to attempt to categorise the characters from texts into possible alphabet and non-alphabet tokens. Utilizing MATLAB code written to determine the basic character frequencies and character bigrams, English text would be passed into MATLAB and categorised into the two different categories.

The statistics and extraction code could then executed over the Voynich Manuscript to determine if any possible characters within the Voynich that may fall into the possible non-alphabet character category. Note that the extended EVA characters were ignored as they are characters tokens which rarely appear, hence not enough data would be available to be properly categorised.

Method

The Alphabet extractor has gone through multiple different attempts to improve the performance and reliability. In general, the extractor used simple rules to determine if the character token is of a specific category. These include:

1. Does the character token only (or the vast majority) appear at the end of a word token?

Tokens that only appear that the end of a word token are generally only punctuation characters when using a large sample text or corpus. However, depending on the type of text, some punctuation characters may appear before another punctuation character, hence majority was taken into account.

2. Does the character token only appear at the start of a word token? Does this character have a high relative frequency when compared to others only appearing at the start of a word token?

In English, character tokens that only appear at the start of a word token are generally upper-case alphabet characters. Some punctuation characters may also only appear at the start of a word token, hence the relative frequencies were also taken into account.

3. Does the character token have a high relative frequency?

Tokens with a high relative frequency are generally alphabet characters, with the highest consisting of the vowels and commonly used consonants.

4. Does the character token have a high bigram ‘validity’?

Over a large English corpus, alphabetical characters generally appear alongside many more other tokens than non-alphabetical characters. Validity is defined as a bigram that occurs with a frequency greater than zero. Low validity suggests the character token is probably a non-alphabet character.

Results

Investigating the frequency statistics alone of each text within the English Corpus (see Appendix A.7) only a small amount of tokens can be characterized as either alphabet or non-alphabet tokens exclusively. It also shows that the token data of some texts differed and that the majority of character tokens could not be exclusively characterized. The statistics of each text were combined to give the frequencies found over the entire corpus. Tables 7 and 8 below summarizes the boundaries found using the combined case which was used to give threshold values for the extraction software.

| Character Token | Relative Frequency | ||

|---|---|---|---|

1

|

Non-Alphabet Token | , | 0.018952262 |

2

|

Numerical Token | 2 | 0.005601521 |

3

|

Upper-Case Alphabet Token | A | 0.004233199 |

4

|

Lower-Case Alphabet Token | e | 0.118199205 |

Table 7: Highest Relative Frequency

| Character Token | Relative Frequency | ||

|---|---|---|---|

1

|

Non-Alphabet Token | = | 0.000000190 |

2

|

Numerical Token | 0 | 0.001239706 |

3

|

Upper-Case Alphabet Token | Q | 0.000040890 |

4

|

Lower-Case Alphabet Token | z | 0.000503139 |

Table 8: Lowest Relative Frequency

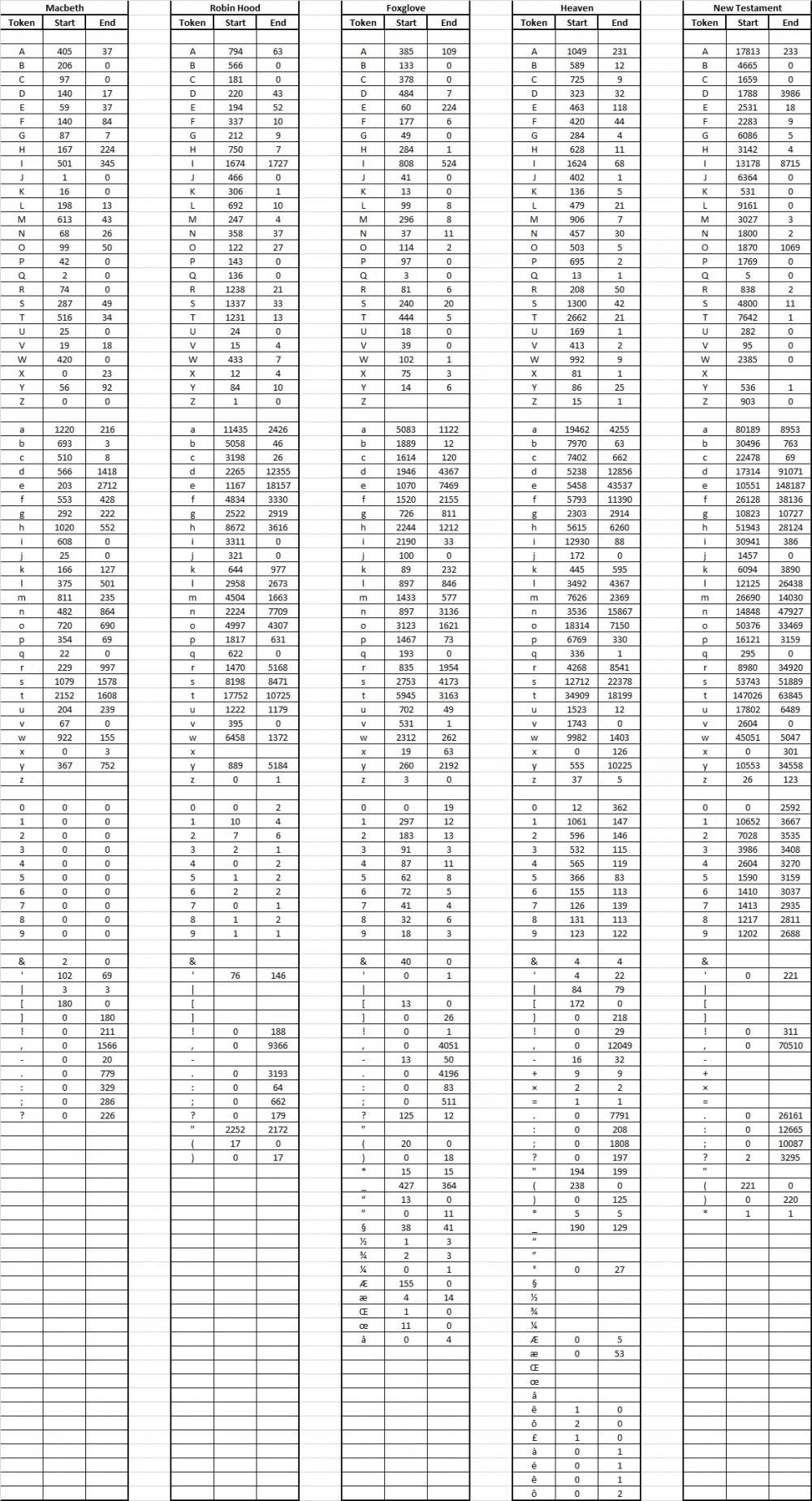

The frequency of a character token appearing at the beginning or end of a word token was then analysed to determine if any character tokens can be characterized through this data. A table of the this data can be viewed in Appendix A.8. It was found that using this data alone did not allow for accurate characterization as specific characters that may only appear at the beginning or end of a word token could be either alphabet or non-alphabet characters.

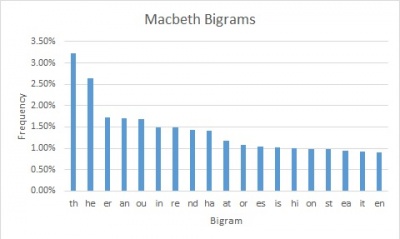

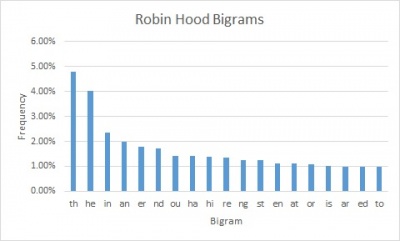

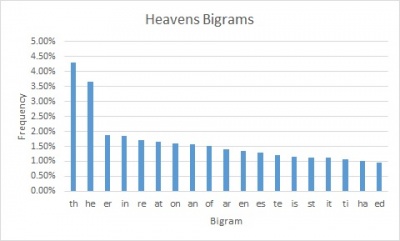

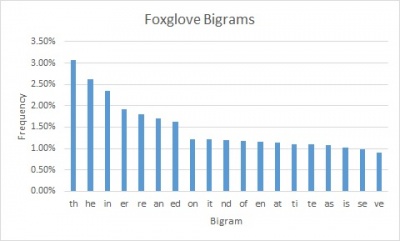

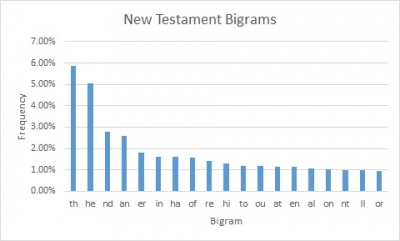

To further extend the two basic types of data used above, bigrams of the English corpus were generated and analysed. These led to much more prominent results, showing specific characters rarely appearing next to one type of character and more frequently next to another. The most frequency bigrams of each English text within the corpus are shown below in Figures 8 to 12.

As shown, 'th' and 'he' were the most frequent bigrams regardless of text.

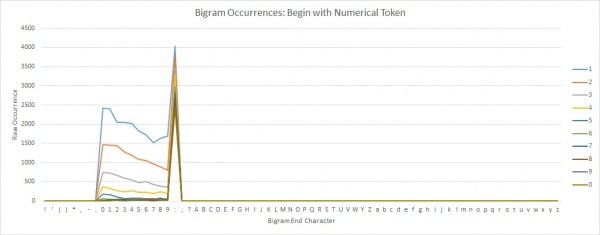

Other results found numerical tokens generally only appear next to other numerical tokens with the rare occurrence of non-alphabet tokens. This is shown in Figure 13 below. Note that the high occurrence of a bigram beginning with a numerical token and ending with a colon is attributed to the writing style within the New Testament.

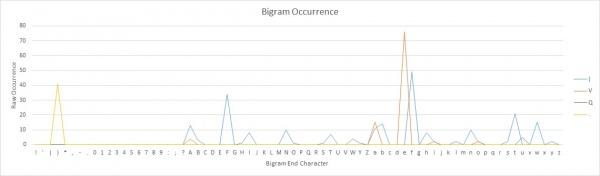

Some non-alphabet tokens proved to be more difficult to characterize due to their lower frequencies and high probability of appearing beside an alphabet token. A small example is shown below in Figure 14.

As can be seen, the bracket token appeared more often than that of the upper-case alphabet tokens. This is a specific example as the upper-case tokens were much rarer within the text than that of the more common alphabet tokens. However it does show that care must be taken during the analysis and extraction to ensure these tokens are characterized correctly.

The extraction software was used on different English texts. The initial text, not included in the English corpus, was Robert Stevenson's book Treasure Island. Running the software over the text produced the following lists in Table 9.

| Results | |

|---|---|

Possible Alphabet Tokens

|

A B C D E F G H I J K L M N O P Q R S T U V W Y a b c d e f g h i j k l m n o p q r s t u v w y z |

Possible Non-Alphabet Tokens

|

! " ' ( ) * , - . 0 1 2 3 4 5 6 7 8 9 : ; ? X _ x |

Table 9: Treasure Island Alphabet Extraction Results

Clearly the results from Table 9 above are exceptional. Only two character tokens, 'X' and 'x', were characterized incorrectly giving an error rate of 2.703%.

However it was found that a small sample of a given text could result in high errors. The worst text tested, included within the English corpus, with the English extraction software was The New Testament (King James). This produced the following lists in Table 10 when using a sample of 10000 words.

| Results | |

|---|---|

Possible Alphabet Tokens

|

' ( 0 1 2 3 4 5 6 7 8 9 A B C D E F G H I J K L M N O P R S T U W Y Z a b c d e f g h i j k l m n o p q r s t u v w x y z |

Possible Non-Alphabet Tokens

|

! ) , . : ; ? |

Table 10: The Merry Adventures of Robin Hood Alphabet Extraction Results

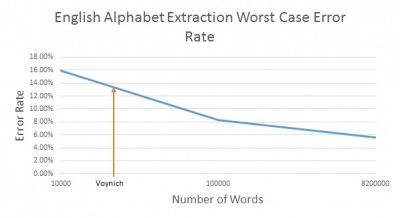

The results here are much worse than initially received. This large increase in errors shows that the algorithm used within the software does not return accurate results over a small sample. The error rate was then plot on various word lengths of show what could be expected at various different sample sizes as seen in Figure 15 below.

As can be seen, the Voynich is expected to have a high error rate. Regardless of this, the Voynich was still passed through that version of the extraction code and returned the following results in Table 11 below.

| Results | |

|---|---|

Possible Alphabet Tokens

|

a c d e f g h i k l m n o p q r s t x y |

Possible Non-Alphabet Tokens

|

v z |

Table 11: Voynich Extraction Results

Discussion

Based on the worst-case error rate graph, it can be clearly seen that the Alphabet extractor does not function with an acceptable error rate when using texts of small sample sizes. This can be attributed to higher variability in both the frequencies and bigrams. As mentioned by Church and Gale, bigrams can be very determinant on the sample size [14]. This means that bigrams that may not appear in one sample may appear in another which, based on the rules being used, can affect the results. through examining the character data, the character ‘X’ never appears within the tested New Testament meaning that all bigrams that were generated never included the character ‘X’. Using smaller sample sizes may result in other missing characters.

It was also found that the Alphabet extractor seemed to give less error to basic novels (Robin Hood) than that of the New Testament, the worst cast tested. A simple comparison of the regular lower-case alphabet and numerical tokens show a significant difference in statistics. Hence the different writing styles having an effect on the extractor by varying in corresponding relative statistics [13].

With this knowledge, the results given from the Voynich are likely to be error prone, as shown by the error rate graph in Figure 15. When examined, the two reported possible non-alphabet characters had very low frequencies within the text which and neither character token only appeared at the start or end of a word token. Therefore there is not enough data to reliably say that either character token are non-alphabet characters. Based on these results and the small alphabet size, it is highly likely that the basic EVA characters do not represent any non-alphabet characters. This does not mean non-alphabet characters are not represented in the text as they may be represented using combinations or sequences of character tokens similar to numerical representations in Roman and Greek.

As the rules used to determine the categorisation of a character token are generalisations made from data obtained using relatively small English texts, the categorisation of the possible alphabet characters may also have errors. Any categorisation of other languages needs further analysis.

Further modifications to the categorisation rules or the addition of more statistical measures may lead to better results in the future. However as writing style can affect the results, any alphabet extraction on different languages will be based on the data obtained from English and may cause further errors. Note that other investigations into character frequencies and bigrams used significantly larger corpora [14] [13] which should give more accurate statistics that those obtained here.

Conclusion

The output of the Alphabet extractor shows that it is possible to distinguish between alphabet and non-alphabet characters based on simple character and bigram frequencies to a certain extent. However the current implementation does require a large sample size to give an acceptable error rate and did show significant variations depending on the writing style of the input text. This was highlighted in other research papers as an area to be aware of [14] [13].

The extraction of the Voynich characters does show that it is likely that the Voynich does not contain any distinct punctuation characters as no character tokens appeared only at the start or end of a word token. As the extractor is biased towards English these results only give a very basic indication of this and relies on the Voynich character and bigram data following similar relationships to that of English.

Phase 3: Morphology Investigation

Introduction

Linguistic morphology, broadly speaking, deals with the study of the internal structure of words [32]. It can be divided into several different categories, depending on the grammar, with the most basic being between inflection, the changes to the tense, gender, number, case, etc. of a word, and word-formation, the derivation and compounding of separate words [33].

English and many other languages contain many words that have some form of internal structure [28] that can fall into these categories. These internal structures can have multiple different forms, depending on the language itself, with the most common structural units as suffixes and prefixes [23]. This is also known as concatenative morphology.

Within this small experiment, the most common affixes in English are found and compared with those found within the Voynich Manuscript. Due to the unknown word structure and small relative size of the Voynich, this experiment defines an affix as a sequence of characters that appear at the word edges. Using this basic affix definition, a simple ranking of the affixes of various lengths could reveal potential relationships between the Voynich and other known languages through the use of the language comparison corpus.

Methods

The affix extraction method exploits the simple definition given to affixes in this paper. That is, an affix is a sequences of characters that appear at the word edges. Text is read into a MATLAB code which is set to find all character sequences that begin at the start or end of a word, of a set length, and compute their relative frequencies. Any word that contains the same amount or less than the set length value is ignored. An example of extracted suffixes of character length 3 is given below.

Word Token: example Extracted Suffix: ple Word Token: testing Extracted Suffix: ing

The extracted affixes are then ranked by frequency, with the most frequent ranked as 1 to the least frequent, and kept in their corresponding character lengths. These are plotted and compared with those found in the Voynich Manuscript and other languages. The expectation of the comparisons is to determine if any of the languages within the corpus show any similarities in affix frequency.

All punctuation within the any of the texts was also removed. The extraction of Voynich Manuscript used the simplified Takahashi transcription with the extended EVA characters removed. As the results from the previous investigations suggested that there was no punctuation within the Voynich Manuscript any texts used had punctuation removed through a simple C++ code.

Results

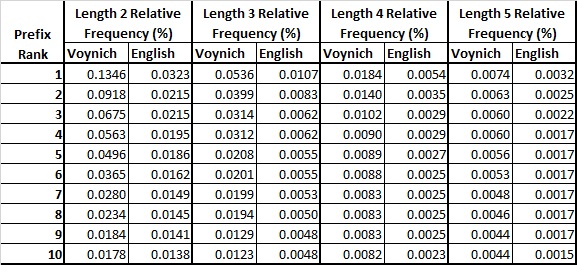

The initial results compared the affixes of character lengths from two to five from a section of the English text Robin Hood and those found with the Voynich. The results of the prefix extraction and ranking can be seen in Table 12 below.

The results of the suffix extraction and ranking can be seen in Table 13 below.

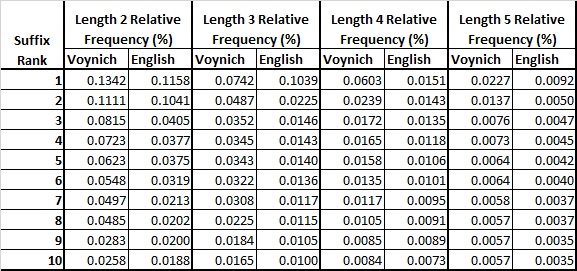

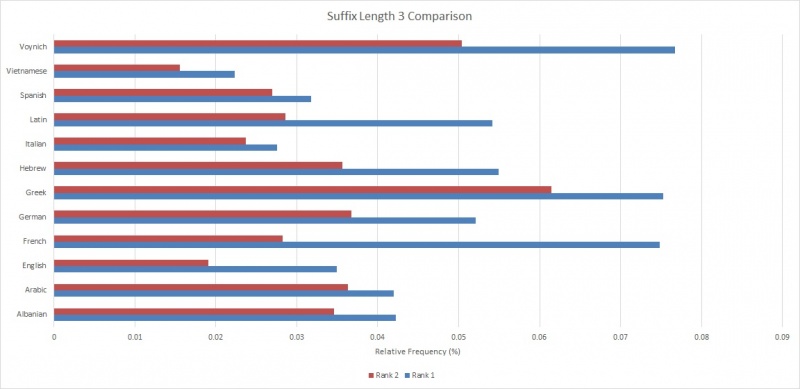

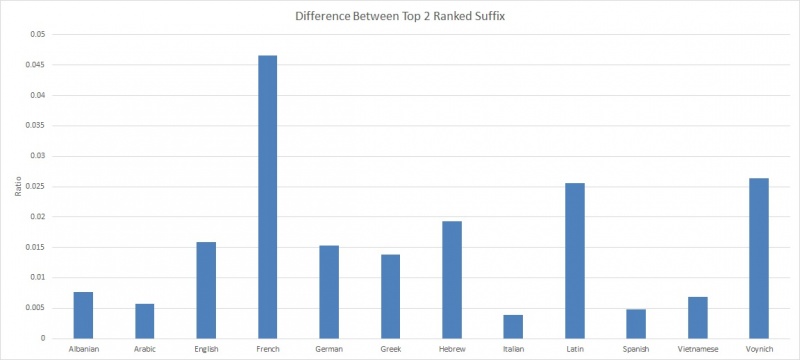

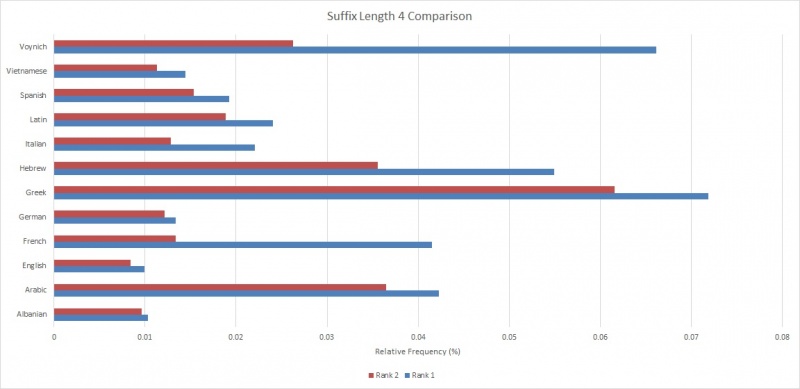

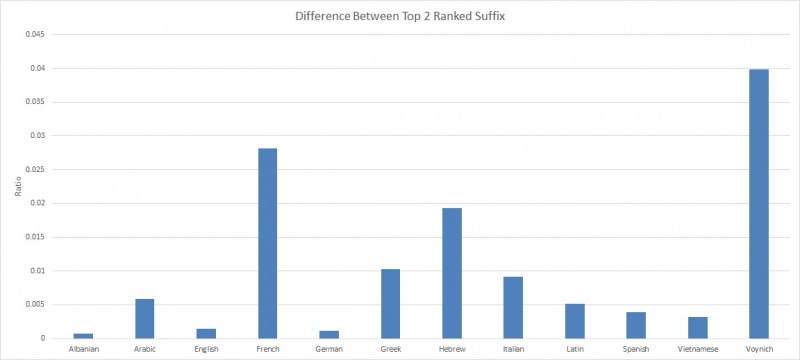

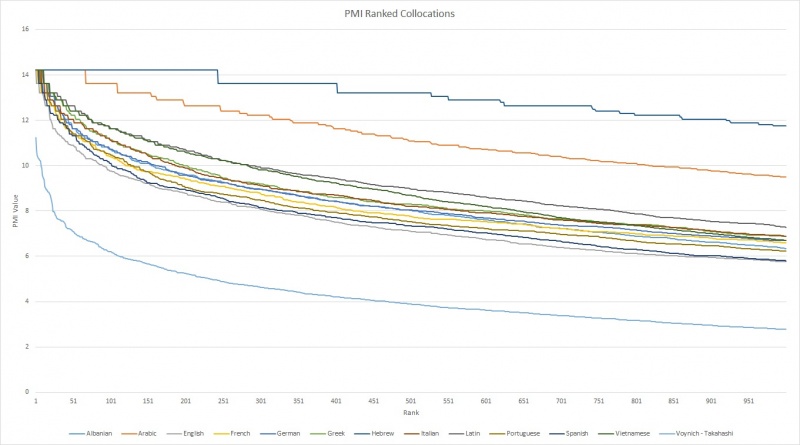

Further testing was completed over the suffixes of character length 3 and 4 as these showed the greatest variability between the Voynich Manuscript and English. In particular, there are significant differences between the top two ranked suffixes, hence the top two ranked frequencies of the various languages within the corpus were found and compared. The results are of character length 3 suffixes are shown in Figures 16 and 17 and the results of character length 4 suffixes are shown in Figures 18 and 19 below.

Discussion

The initial findings of the prefix and suffix comparisons between English and the Voynich do not appear to give any definitive relationships. From the prefix data in Table 12, it can be clearly seen that the Voynich contains many more frequent prefixes over the entire range when only considering the top 10 frequency ranked prefixes. This relationship does not appear to change significantly as the prefix character length is increased.

The suffix data in Table 13 provides a more significant difference in that English begins with very similar values to that of the Voynich at length 2, a higher ranked 1 suffix at length 3, and much lower values at lengths 4 and 5. The range of values given at length 4 for English even show an almost linear relationship while the Voynich shows an exponential decay. With such a significant difference between the length 3 and 4 suffixes the team decided to focus on these two lengths over the various languages within the corpus.

Examining the results of the length 3 and 4 comparisons of the corpus in Figures 16 to 19 show that, at a relative frequency comparative level only Greek appears to have a relationship with the Voynich Manuscript showing a similar rank 1 suffix relative frequency but having a larger rank 2 suffix relative frequency. French also appears to have a similar rank 1 suffix but has a significantly lower rank 2. When comparing the difference between the two ranked suffixes, Latin has a very similar difference ratio to that of the Voynich.

At length 4 Greek, again, appears to have a possible relationship to that of the Voynich, showing a similar rank 1 but a much higher rank 2. However, when comparing the difference ratio between the two ranked suffixes, French has the closest relationship but is, still, significantly different.

It should be noted that morphology is full of different ambiguities [23] and are dependent on the language. These findings only give very baseline experimental data that assumes a very basic definition of an affix and is completely restricted to concatenative morphology. Much more precise data may be obtained by using a stronger definition for an affix that takes into account proper word stems or roots. Unfortunately the small sample size available from the Voynich does not allow for accurate morphological extraction based on previous research on known languages requiring significantly large sample sizes.

Conclusion

The data here does not give any conclusive findings. It does show that there may be possible morphological relationships, albeit weak, in the Voynich to other languages, in particular Greek, Latin and French, when using a naïve definition of an affix as a sequence of characters at the edge of a word.

It may also suggest that there is some form of morphological structure within the Voynich Manuscript but these results are unable to definitively conclude on that also. Further research is required.

Phase 4: Illustration Investigation

Introduction

The Voynich manuscript is an illustrated manuscript and written in an unknown language. No one can understand the language but some of the illustration can be identified. This Phase will examine the possible relation between texts, words and illustrations.

Results

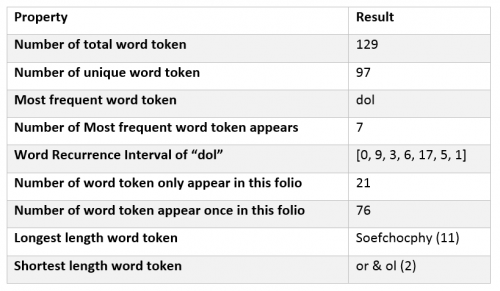

First a certain folio f102r2 were selected.

It is a breakthrough point when start to investigate. The reason of choosing folio f102r2 is there is a “frog” in the top right corner, as shown in Figure 20. The language in the Voynich Manuscript is an unknown language, therefore it is helpful to find and use possible known stuff when investigating. The image of folio is in Appendix A.9.

As shown in Table 14, the following statistic were found in folio f102r2:

There are 21 word tokens that only appear in folio f102r2 and only appear once, they are unique word tokens, and these do not appear in any other folios within the whole Voynich Manuscript. The word tokens are:

airam, chefoly, dethody, desey, eeey, kockhas, kolor, lsais, olockhy, opolkod, qkeeey, qkeeod, qoochey, qyoeey, skekyd, soeees, soefchocphy, sossy, ydeeal, ykeockhey.

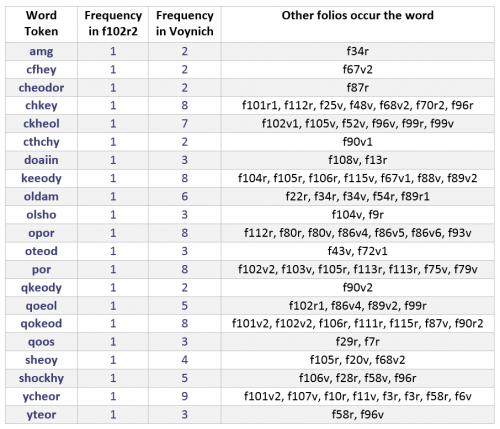

There are also 21 word tokens appear once in folio f102r2 and appear less than 10 times in other folios. Table 15 below shows the statistic of these word tokens.

The unique word in the folio may be the description of unique illustration in the folio. In folio, there are 21 unique word token and 12 illustrations. It can tell nothing without further information.

There are 2 word token which appear in folio f102r2 once and also appear in other folios once. These are first selected to be investigated: amg, cheodor. They occur in folios f34r and f87r respectively. The images of the three folios are in appendix A.9.

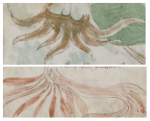

Word token amg occurs in folio f102r2 and f34r, word token cheodor occurs in f102r2 and f87r. The three folios are both text with illustrations. The illustration in f34r is shown in Figure 21 on the right and illustration in f87r is in Figure 22. They appear to be a form of plant with leaves, roots and corollas. In f102r2, there are plants too, however the plants in f102r2 do not have corollas.

The similar between the f102r2 and f34r are:

- They both occur the word token amg

- They both occur plant illustration

- The plants in the folio both have roots,

The figure 21 on the right shows the similar roots part of two folio’s illustration, the top one is folio 102r2, the bottom one is f34r. Through the comparison, perhaps the word amg in the Voynich Manuscript is used as a description for the plant roots as shown in figure 23. Again, this is just a crude conclusion, further information is needed for the investigation.

The figure 24 below shows number of total word and unique word on each folio.

The folio which has most unique word is 58r, number of total word on 58r is 367, number of unique word is 100. Folio 65r has no unique word and the number of total word on 67r is only 3. Number of total word in Voynich is 37919 and unique word is 5647. The average number of total words per folio is 168.52, and the average number of unique words per folio is 25.09. Most of the folios only contain one illustration, maybe the unique words in that folio are all related to the illustration, but the number of unique word per folio is to high. It is impossible to find out which unique words are only used to describe the illustration.

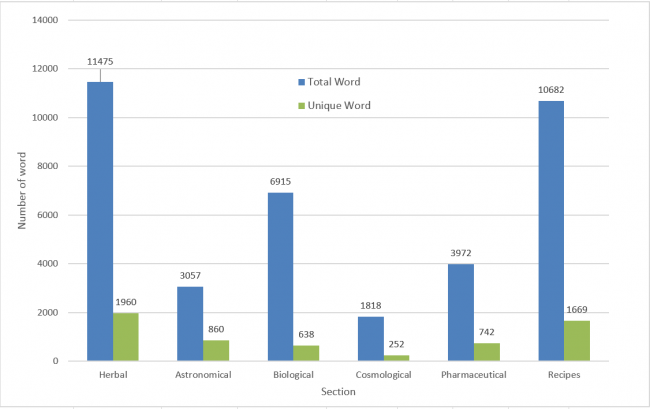

The figure 25 below shows number of total word and unique word of each section

The number of unique words of each section is also too high to analyse.

The figure 26 below shows the average word length on each folio, it can be seen from the figure, the average word length on each folio are all around 5 which is reasonable.

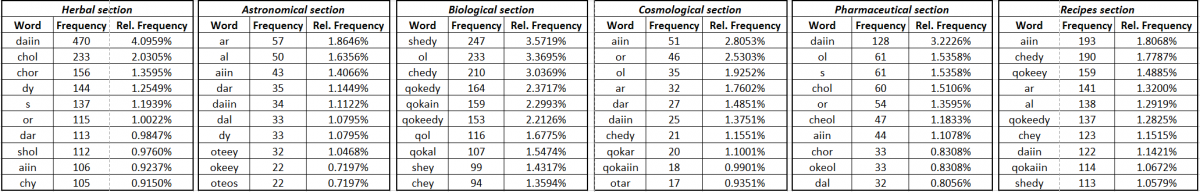

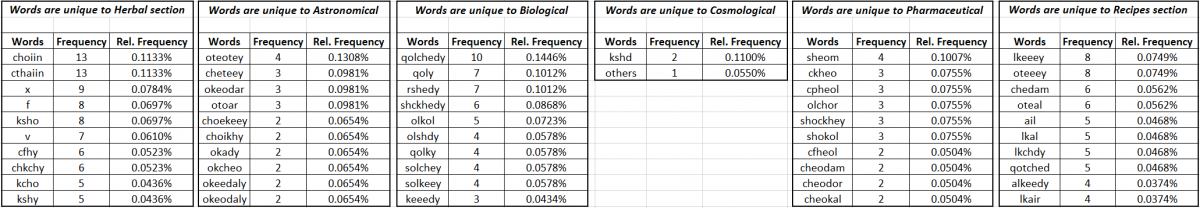

The table 17 below shows the most 10 frequent words of each section

The table 18 below shows the most 10 frequent unique words of each section

Methods of Analysis

There are two basic methods have been used through the investigation.

Method 1:

- Find out the unique words in this section

Figure 27: "choiin" - Select one unique word to analyse

- Analyse the folios which contain this word

- Find out the similar illustrations between these folios

- If the illustrations are similar, the word may used to describe the similarity.

The most frequent unique word of Herbal section "choiin" is selected to be analyse first. The Figure 27 on the right is the original "choiin" in the Voynich manuscript. The following folios all contain the word "choiin".

|

It can be seen form the folios above, although the folios all contain the word "choiin", there is no clear similarity can be found.

For further information about the folios contain a given word can be found in Search engine. In the search engine, all the words appear the Voynich Manuscript which frequency are between 5 to 15 are listed in a table by initial.

Method 2:

- Find out similar illustrations first.

- Analyse the texts in the folios which have similar illustrations.

- Find out the common word appear in all of the folios.

- If the common words are unique to these folios, the words may used to describe the similar illustrations.

|

The pictures above are from folio 17v, 99r and 96v. As shown in the pictures, the illustrations in the three folio are quite similar. Folio 17v is from Herbal section, folio 96v and 99r are from Pharmaceutical section. Table 19 on the right shows the common words of the three folios. It can be seen from the table, frequency of common words in the three folios are very high and they are not unique to these folios. The common words appear most of folios in the manuscript which is difficult to tell any relation between the words and the similar illustration.

Conclusion