Final Report/Thesis 2015: Difference between revisions

| Line 102: | Line 102: | ||

===Task 4: Statistical Frequency of Letters Reanalysis=== | ===Task 4: Statistical Frequency of Letters Reanalysis=== | ||

====Aim==== | |||

Towards the end of the project, a decision was made that for Task 4, rather than analysing the mass spectrometer data from the Somerton Man's hair, we would focus our efforts on reanalysing the letter frequencies of various European languages. This was decided upon since our initial analysis performed in Task 1 produced inconsistent and varied results. This was brought about due to the limited sample size of the Universal Declaration of Human Rights as a base text, causing the frequency of particular letters to appear 0 times in particular languages. Due to this, these letter frequencies had to be altered by choosing arbitrary numbers for their frequency in order to perform our Chi-Squared testing and thus reduced the accuracy and validity of the test's results. | Towards the end of the project, a decision was made that for Task 4, rather than analysing the mass spectrometer data from the Somerton Man's hair, we would focus our efforts on reanalysing the letter frequencies of various European languages. This was decided upon since our initial analysis performed in Task 1 produced inconsistent and varied results. This was brought about due to the limited sample size of the Universal Declaration of Human Rights as a base text, causing the frequency of particular letters to appear 0 times in particular languages. Due to this, these letter frequencies had to be altered by choosing arbitrary numbers for their frequency in order to perform our Chi-Squared testing and thus reduced the accuracy and validity of the test's results. | ||

the limited sample size caused the chi-squared values for all languages, including English, to be reasonably large. This caused the resulting calculated P-values to be extremely small numbers, or in most cases 0. Because of this, these chi-squared values were not usable to use P-values to perform our initially proposed hypothesis testing from Task 1. | the limited sample size caused the chi-squared values for all languages, including English, to be reasonably large. This caused the resulting calculated P-values to be extremely small numbers, or in most cases 0. Because of this, these chi-squared values were not usable to use P-values to perform our initially proposed hypothesis testing from Task 1. | ||

This caused us to question the validity of the Universal Declaration of Human Rights as a base text and so we sought to increase our sample size using alternate base texts. | This caused us to question the validity of the Universal Declaration of Human Rights as a base text and so we sought to increase our sample size using alternate base texts and extend our original statistical analysis. | ||

====Method==== | |||

It was decided that for the reanalysis, we would use Project Gutenberg to increase the sample size for as many of the 21 most popular European languages used in Task 1 as possible by collecting novels from the time before the Somerton Man's death. This was chosen to be used as our base corpus in an attempt to obtain a more accurate representation of the initial letter frequencies of words in these languages. Novels in each language were concatenated and their letter frequencies were determined, until each letter appeared at least once in each language. | It was decided that for the reanalysis, we would use Project Gutenberg to increase the sample size for as many of the 21 most popular European languages used in Task 1 as possible by collecting novels from the time before the Somerton Man's death. This was chosen to be used as our base corpus in an attempt to obtain a more accurate representation of the initial letter frequencies of words in these languages. Novels in each language were concatenated and their letter frequencies were determined, until each letter appeared at least once in each language. | ||

The 2013 group’s decoding toolkit and initial letter frequency count code were able to be utilised for this task. The decoding toolkit's 'format texts' function was used to remove all non letter characters and symbols as well as punctuation and accented letters, and the initial letter frequency counter was run on all of our base and benchmark sample texts in order to obtain the data we needed to perform our statistical analysis. | |||

Of the top 21 most popular European languages from Task 1, only 12 of the languages were able to be used in the reanalysis due to insufficient usability or availability of texts on Project Gutenberg. The languages used in the analysis can be seen in the graph in Figure X. The omitted languages included Greek, Russian, Serbian, Kurdish, Uzbek, Turkish, Ukranian, Belarusian and Kazakh. | |||

Validation | Validation | ||

The same test that was run on the declaration was also run on the new Gutenberg base text | The same test that was run on the declaration was also run on the new Gutenberg base text | ||

ie. | |||

In addition to this, the Somerton man code, and 44 letter samples from a Thomas Hardy novel acting as an English control as well as a French sample, German sample, and Zapoteco sample from the Universal Declaration of Human Rights were all compared to both the statistics obtained from the English translation of the Universal Declaration of Human Rights as well as statistics gathered from the novel used as our English base text found on Project Gutenberg. | |||

Results are... Figure X. | |||

Validation 2 | Validation 2 | ||

| Line 137: | Line 144: | ||

Take average of these | Take average of these | ||

Use this as our significance level to perform hypothesis testing | Use this as our significance level to perform hypothesis testing | ||

Ie. Rather than choosing some arbitrary significance level of for example 0.05, we have calculated what we deem to be an acceptable | Ie. Rather than choosing some arbitrary significance level of for example 0.05, we have calculated what we deem to be an acceptable significance level for which we can confidently say that the most likely language of the Somerton Man code is English. | ||

talk about how chi-squared method is still not entirely mathematically accurate since the minimum expected frequency of the code should be fide, this was not the case, but since this was constant against all languages, the method can still be used as a means of comparing ‘goodness of fit' of letters in each language. | |||

When reanalysing with new base texts, still not mathematically perfect since chi-squared test rule of minimum of 5 frequency, but is definitely an improvement over the declaration as a base text since we were able to increase the sample size until each letter appeared at least once. | When reanalysing with new base texts, still not mathematically perfect since chi-squared test rule of minimum of 5 frequency, but is definitely an improvement over the declaration as a base text since we were able to increase the sample size until each letter appeared at least once. | ||

====Results==== | ====Results==== | ||

Revision as of 20:45, 17 October 2015

Executive Summary

Introduction

Motivation

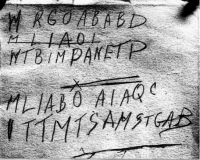

On the 1st of December, 1948, there was a dead body found at Somerton Beach, South Australia [1]. There was no evidence to show the man’s identification and the cause of death [2], however, there were 5 lines of capital letters, with the second line struck out, that were found on a scrap of paper in the dead man’s trouser pocket [3]. A photo of the paper containing the letters can be seen in Figure 1. It was later discovered that the scrap of paper was torn from a book known as the 'Rubaiyat of Omar Khayyam' [4]. These letters are considered vital to the case as it is speculated that they may be a code or cipher of some sort. As engineers, we have the ability to help investigators in solving the case. With that in mind, this project is being undertaken to attempt to decrypt the code in order to help solve the cold case.

The South Australian Police stand to benefit from this project not only from the decoding technology developed for this case, but it also may be able to be applied to solve similar cases. Historians may be interested in gaining further historical information from this project since the case occurred during the heightened tension of the Cold War, and it is speculated that this case may be related in some way [5]. Pathologists may also be interested as the cause of death may have been an unknown or undetectable poison [6]. This project stands to benefit the wider community as well as extended family of the unknown man to provide closure to the mysterious case. Professor Derek Abbot also stands to benefit as he has been working closely with honours project students for the past seven years in an attempt to decipher the Somerton Man code.

Previous Studies/Related Work

Previous professional attempts to decipher the code were limited since they did not use modern techniques or have access to modern databases. Another limitation is that some of the characters in the code appear to be ambiguous and previous attempts made fixed assumptions on particular characters [7]. The Australian Navy’s response was that the letters were “neither a code nor a cipher” [8]. The Australian Department of Defence analysed the characters in 1978 using the computer technology available of that era and concluded:

- a) “There are insufficient symbols to provide a pattern”

- b) “The symbols could be a complex substitute code or the meaningless response to a disturbed mind”

- c) “It is not possible to provide a satisfactory answer” [9]

Other previous studies into deciphering the code include Honours Projects at the University of Adelaide from 2009-2013. The previous work undertaken by these groups includes: multiple evolutions of letter frequency analysis of the code on a variety of base texts in a number of languages, initial letter and sentence letter probabilities, the probabilities of known cypher techniques, the likelihood of the code being an initialism of a poem, the use of various one-time pad techniques, the design and implementation of a web crawler, the analysis of text type and genre of the code’s likely plaintext, the implementation of pattern matching software into the web crawler, a 3D generated reconstruction of the bust of the Somerton Man (see Figure 2) and the analysis of mass spectrometer data taken from the Somerton Man’s hair [10] [11] [12] [13] [14]. The main conclusions that past groups have come to in their projects are: that the letters are unlikely to be random, the code is unlikely to be an initialism, it is likely that the Rubaiyat of Omar Khayyam was used as a one-time pad, the language of the code is likely to be English, the code is unlikely to be an initialism of a poem and that the Rubaiyat of Omar Khayyam was not used as a straight substitution one-time pad [15] [16] [17] [18] [19]. The analysis and extension upon specific elements of previous work that are directly related to the 2015 group’s project are discussed in the 'Method – Specific Tasks' section.

Aims and Objectives

The key aims and objectives in this project included the aim to statistically analyse the likely language of the plaintext of the code. Another aim was to design and implement software in order to try and decipher the code. This was to be implemented by using the 'Rubaiyat of Omar Khayyam' as a one-time pad in conjunction with a new key technique, and by developing a search engine to try to discover possible n-grams contained within the code. The third aim was to analyse mass spectrometer isotope concentration data of the Somerton Man’s hair. Finally, the ultimate aim was to decrypt the code in order to solve the mystery, however this was somewhat unrealistic as the code has remained uncracked for many years. Despite this, computational techniques were to be utilised to attempt the decryption, and at the very least, the past research into the case was to be furthered for future Honours students.

Significance

Considered “one of Australia’s most profound mysteries” at the time [20], this case still remains unsolved today. As the development of decoder technology and the related knowledge progresses, this project poses the opportunity to uncover further case evidence. The skills developed in undertaking this project were also of great significance in a broader sense, as these can be transferrable to possible future career paths. The techniques developed include: software and programming skills, information theory, probability, statistics, encryption and decryption, datamining and database trawling. The job areas and industries that these skills can be applied to are: computer security, communications, digital forensics, computational linguistics, defence, software, e-finance, e-security, telecommunications, search engines and information technology. Some possible job examples include working at: Google, ASIO, ASIS and ASD [21].

Technical Background

P-Value Theorem Explanation

Chi-Squared Test Explanation

Universal Declaration of Human Rights Explanation

Project Gutenberg Explanation

N-Gram Model Explanation

One-Time Pad Explanation

Knowledge Gaps and Technical Challenges

Method - Specific Tasks

Task 1: Statistical Frequency Analysis of Letters

Aim

Method

Results

Evaluation and Justification

Task 2: N-Gram Search

Aim

The aim of Task 2 was to create a search engine to look for regular expressions that could be linked to the Somerton Man Code. Numerous studies from previous groups showed that it is statistically likely for the Somerton Man code to be an English initialism (see Previous Studies/Related Work section). Based on this, for this task an assumption was made that the code is an initialism. A search engine was to be developed that used the letters of the code as initial letters of words in commonly used English phrases. This concept was to be explored further using a technique that accesses a larger database in much shorter time than the web crawler developed by groups in previous years (see Previous Studies/Related Work section). Using an n-gram database, rather than crawling the whole web for grams, had the advantage that the crawling had already been done and all grams had been recorded. This was to drastically increase the speed at which the gram combinations on the web could be found. The search engine was required to output a list of possible gams from the input letter combinations. An assumption that was to be made in order to complete this task was that the letters in the code, and thus the words in the grams, were order relevant. Another assumption was that all variants of ambiguous letters in the code were to be included.

Method

Research upon the available databases was conducted and it was found that the two largest databases that most suited our needs were 'Microsoft Web N-Gram Services' [22] , and Google N-Gram [23]. The use of Microsoft Web N-Gram Services was initially considered more favourable due to its larger database [24] , better provided documentation and tutorials [25], and lower initial cost (see Budget Section). Despite the Microsoft alternative's advantages, upon further research and after consulting the Microsoft Web N-Gram, it was discovered that it could be used for our purposes using a best-first DP search, with a very large number of calls to the N-gram service's generate method. Also, through consulting our project supervisor Dr. Berryman, there was a concern that a combinational explosion would occur, meaning that for a 5-gram search, if the Microsoft database was say 50,000 words, the search engine would have to make 50,000^5 + 50,000^4 + 50,000^3 + 50,000^2 + 50,000 calls to the database to complete the search. Due to all the network calls required to use this method, the Microsoft Web N-Gram Service was deemed unfit for purpose in our application, since the method would not be fast enough to complete all of the searches that we required. Due to this, the Google alternative was considered.

The search engine was initially to be implemented via web application using developer tools such as Microsoft Visual Studio or Eclipse [14], for use with Microsoft's Web N-Gram Service. The application could be programmed in native languages including Visual Basic, Visual C#, Visual C++, Visual F# and Jscript [26]. Instead, the programming language of Python was chosen to be used in conjunction with the Google N-Gram database through advice from Dr Berryman and due to its ease of use and efficiency with text processing.

Upon deciding to use the Google N-Gram database, a decision was to be made whether to purchase the University of Pennsylvania's Linguistic Data Consortium version or to obtain it for free directly from Google. The Linguistic Data Consortium's version of the database was initially chosen as an alternative since it had the advantage of a concise and clean nature, with all total n-gram frequencies summed and collated. Upon discussion with supervisor Dr. Berryman, it was decided that it was not worth purchasing the cleaned version of the database since we could extract the data we needed from the raw database and the outputs could be cleaned up easily enough through writing some simple codes in Python. Due to this, it was decided that the database provided by Google was to be used for our purposes (See budget section for further details).

Due to the size of the database, although being able to be physically stored locally, the local computing power available would have been insufficient to run the search engine code through the database within the time frame of the project. Instead, a cloud based computing service with increased processing power was sought out to be able to complete the database search within the time restrictions of the project. Upon considering a number of options, it was decided that 'Amazon Elastic Compute Cloud' was to be used due to its robust storage and processing options [27] and Dr Berryman's prior experience in using this service. The Amazon EC2 free tier was assessed for use but had a 30GB storage limit[28], which was insufficient to store the Google N-gram database on. In addition to this, the instance sizes provided by the Amazon EC2 free tier were t2.micro instances, meaning that they provided 1 vCPU, 1 Gib of RAM and only 20% of each vCPU could be used [29]. Based on this, it was estimated that using this version of the Amazon Elastic Compute Cloud would have taken approximately 20 months to complete, which was far too long to complete within the project timeframe. Instead, it was proposed to use the high input/output Amazon i2 tier to provide the performance needed to store and process the database. After some experimentation with different tiers, two i2.xlarge instances run on Amazon EC2 were proposed to be used, providing two sets of instances, each containing 4 vCPUs, 30.5 GiB of RAM, and 2 x 800 GB SSD Storage[30]. Using this tier allowed for parallelisation by running separate processes for each group of n-gram inputs from n=1-5.

The initial n-gram search code was written in Python and submitted to our GitHub repository for review. Based on advice from project supervisor Dr Berryman, it was discovered that the code would work on a small data set, but since our data set was so large (1.79 Tebibytes when compressed), the code was modified to fit the suggested workflow and run in parallel on Amazon instances.

The maximum number of n provided by the gram database was five. Due to this, a maximum of five letter gram groups from the code could be processed at a time. This was achieved by writing a code in Python to generate all possible 5-gram initialisms from all code variants, including the crossed out line, and output them into a corresponding text file. The same was done for 4, 3, 2 and 1-grams and stored in their respective text files. These were to be used as input files from which the search engine was able to perform searches to query the database.

The search engine code was also written in python, and took in the initialism combinations in text files created by the intiialism generator code, ...

by counting unique entries? etc. bug in code caused frequency of occurrance of grams in each year to be lost, so count of number of years was used as measure of frequency

Running our code on the Google N-Gram database stored in the i2.xlarge instances in parallel for each group of n-gram inputs from n=1-5 took approximately two weeks. These raw results were then small enough to be stored and processed locally and so the Amazon EC2 service was no longer required.

Once the raw results were obtained, some grams contained words followed by an underscore and the corresponding lexical category of the word (ie. noun, verb, adverb, adjective, pronoun etc.). This was desired to be removed and so another python code was written to remove everything but the words themselves from each line in the results.

Upon processing the raw results, the output of the lexical category removal results showed multiple identical results with individual frequencies for the numbers of years in which they occurred. This was brought about since previously the database considered these entries to be unique results, but now with the lexical categories removed, some results became identical. This was rectified by writing another code in Python to process these cleaned results to combine identical entries and sum their frequency of years in which they occurred. This code was then duplicated and modified into two codes, the first output the results sorted alphabetical order, and the second in order of frequency of years in which each result occurred from highest to lowest. The alphabetically sorted outputs were used as a means of comparison to the cleaned inputs since these were also sorted alphabetically, in order to check that the code was functioning correctly. The frequency sorted outputs were more useful since they able to be used to generate a condensed list of the top 30 most popular initialisms that could be generated from the letters from all variations in the Somerton Man code, seen in the results section in Figure X.

Finally, a code was written in Python to generate all possible combinations of the top 2 5-gram group results for each variant of the code, where the top 2 results were based on frequency of years in which they occurred. This was achieved using a non-overlapping sliding window of 5 letters in length. This was used as an exercise to see if any interesting or useful results could come about using this simple method. Unfortunately, this produced nonsensical results due to the disjoint between each 5-gram group's search results, these can be seen in the results section in Figure X. Due to the time constraints of the project, the code was not able to be developed any further, but the code and the results it provides can be used as a first step towards obtaining meaningful or useful combinations of n-grams from the results obtained using the search engine developed throughout this project. This code could be improved by using a sliding window that progresses by less than 5 letters for each search, for example, using a step size of 1 letter would create the maximum possible overlap of 4 letters between each input gram group. More information on this and other suggested improvements can be found in the future work section.

Results

Evaluation and Justification

Smaller database than Microsoft option (ref), but did not suit our application A limitation of using Google N-Gram database is that it only includes grams that have appeared more than 40 times [31].

Task 3: Rubaiyat of Omar Khayyam as a One-Time Pad

Aim

Method

Results

Evaluation and Justification

Task 4: Statistical Frequency of Letters Reanalysis

Aim

Towards the end of the project, a decision was made that for Task 4, rather than analysing the mass spectrometer data from the Somerton Man's hair, we would focus our efforts on reanalysing the letter frequencies of various European languages. This was decided upon since our initial analysis performed in Task 1 produced inconsistent and varied results. This was brought about due to the limited sample size of the Universal Declaration of Human Rights as a base text, causing the frequency of particular letters to appear 0 times in particular languages. Due to this, these letter frequencies had to be altered by choosing arbitrary numbers for their frequency in order to perform our Chi-Squared testing and thus reduced the accuracy and validity of the test's results.

the limited sample size caused the chi-squared values for all languages, including English, to be reasonably large. This caused the resulting calculated P-values to be extremely small numbers, or in most cases 0. Because of this, these chi-squared values were not usable to use P-values to perform our initially proposed hypothesis testing from Task 1.

This caused us to question the validity of the Universal Declaration of Human Rights as a base text and so we sought to increase our sample size using alternate base texts and extend our original statistical analysis.

Method

It was decided that for the reanalysis, we would use Project Gutenberg to increase the sample size for as many of the 21 most popular European languages used in Task 1 as possible by collecting novels from the time before the Somerton Man's death. This was chosen to be used as our base corpus in an attempt to obtain a more accurate representation of the initial letter frequencies of words in these languages. Novels in each language were concatenated and their letter frequencies were determined, until each letter appeared at least once in each language.

The 2013 group’s decoding toolkit and initial letter frequency count code were able to be utilised for this task. The decoding toolkit's 'format texts' function was used to remove all non letter characters and symbols as well as punctuation and accented letters, and the initial letter frequency counter was run on all of our base and benchmark sample texts in order to obtain the data we needed to perform our statistical analysis.

Of the top 21 most popular European languages from Task 1, only 12 of the languages were able to be used in the reanalysis due to insufficient usability or availability of texts on Project Gutenberg. The languages used in the analysis can be seen in the graph in Figure X. The omitted languages included Greek, Russian, Serbian, Kurdish, Uzbek, Turkish, Ukranian, Belarusian and Kazakh.

Validation

The same test that was run on the declaration was also run on the new Gutenberg base text

ie. In addition to this, the Somerton man code, and 44 letter samples from a Thomas Hardy novel acting as an English control as well as a French sample, German sample, and Zapoteco sample from the Universal Declaration of Human Rights were all compared to both the statistics obtained from the English translation of the Universal Declaration of Human Rights as well as statistics gathered from the novel used as our English base text found on Project Gutenberg. Results are... Figure X.

Validation 2 Using 20 44 letter excerpts from English novels from before the Somerton man’s death as controls.

Preliminary Results significance level • Comparing the chi-squared and p-values of actual English samples and the code against our English section of the Gutenberg corpus: • Rather than choosing some arbitrary value of significance level, we have chosen to calculate a significance level using real English texts • Using this method, our preliminary results show that our null hypothesis is accepted and that English is the most likely language of origin of the code, assuming that it is an initialism.

English p-value benchmark

hypothesis test: H0: The group of letters are from the English language H1: The group of letters are from another language

Second attempt: Increase English sample size by collecting 20 novels and concatenating them to form our base sample corpus Take 100 44 letter samples out of this corpus Calculate chi-squared value and p-values for each Take average of these Use this as our significance level to perform hypothesis testing Ie. Rather than choosing some arbitrary significance level of for example 0.05, we have calculated what we deem to be an acceptable significance level for which we can confidently say that the most likely language of the Somerton Man code is English.

talk about how chi-squared method is still not entirely mathematically accurate since the minimum expected frequency of the code should be fide, this was not the case, but since this was constant against all languages, the method can still be used as a means of comparing ‘goodness of fit' of letters in each language.

When reanalysing with new base texts, still not mathematically perfect since chi-squared test rule of minimum of 5 frequency, but is definitely an improvement over the declaration as a base text since we were able to increase the sample size until each letter appeared at least once.

Results

Evaluation and Justification

Project Management - Planning and Feasibility

Work Breakdown/Deliverables

The workload for this project was broken down into its main tasks. These can be seen in list form in the Final Project Gantt Chart (see Timeline section). The key deliverables are represented as milestones on the Gantt Chart. The dependencies of the tasks and deliverables can be seen in the Gantt Chart as black arrows, these are as follows: The Research Proposal and Progress Report have dependence on the Draft Research Proposal, which has dependence on the Proposal Seminar. Of the specific project tasks, Task 1 was completed first, and Tasks 2, 3 and 4 were completed in parallel. The Final Seminar Presentation, Project Exhibition Poster, Final Performance, Youtube video and Dump of final work are all dependent on the completion of the specific project tasks. The Final Report/Honours Thesis was completed in parallel with the rest of the work from the Research Proposal and Progress Report hand-up, onwards.

Timeline

The timeline for this project was created in the form of a Gantt Chart. The proposed Gantt Chart can be seen in Figure X.

The final Gantt Chart after all revisions and updates can be seen in Figure X.

Changes made from the originally proposed Gantt Chart to the final revised Gantt Chart include the renaming of Tasks 2 and 4 to N-Gram Search and Statistical Frequency of Letters Reanalysis. Task 2 was completed earlier than expected, but cleaning up results for presentation and finding meaningful combinations of the results proved to take longer than expected, and so the second part of Task 2 was extended. Task 3 was also extended so that Jikai was able to complete this task. Task 4 was commenced earlier than proposed since the bulk of Task 2 was completed early. Due to this, Task 4 was completed in parallel with Tasks 2 and 3 towards the end of the project timeline. The dump of final work and project youtube video were moved to be completed after the due date of the Final Report/Thesis upon discussion with our supervisors. Overall, our initially proposed Gantt Chart estimated our project timeline quite accurately and only minor changes needed to be made.

Task Allocation

The workload for the tasks within this project were allocated based on the strengths and skillset of each member, as well as the estimated time taken and complexity of each task. A table of the project task allocation can be seen in Figure X. The key allocations were that Nicholas Gencarelli undertook the tasks of Project Management, N-Gram Search and the Project Exhibition Poster. Jikai Yang undertook the tasks of the use of the Rubaiyat of Omar Khayyam as a One-time Pad, and the project Youtube video. The allocations did not require changing throughout the project life cycle apart from the decision for both members to perform a statistical reanalysis for Task 4 rather than both analysing the mass spectrometer data from the Somerton Man's hair.

Management Strategy

A number of management strategies were adopted for use throughout the project. One of which was frequent face-to-face contact through regular meetings every 2-3 weeks. Another was regular communication between group members via text message and email. Collaboration is another strategy that was useful, if one member required assistance on a particular task, the other was able to step in and help. This was achieved through the use of flexible task allocation. The group was able to make use of collaborative software including Google Drive for working together on project documents, and Git Hub repository for working together on code for software development. The project Wiki page was updated in real time including the weekly progress section to monitor and review work completed by each member every week, as well as plan tasks for the upcoming week. Finally, the use of a Gantt chart was used as a management strategy to incorporate clearly defined task and goals and established a critical path through use of task dependencies.

Budget

The project budget for this honours group was set at 500 dollars at the commencement of the project. It was initially proposed for the budget to depend on the n-gram database chosen to be used for the search engine in Task 2. As discussed in the Method section of Task 2: N-Gram Search, a variety of options were considered and the main two largest databases were found to be Microsoft Web N-Gram Services[32], and Google N-Gram [33].

The Microsoft alternative was found to be free to use for academic purposes after applying for a user token, and is stored for free on Microsoft’s web server, hence there was no need to purchase storage upon which to store the database[34].

The Google alternative was available for free when obtaining the raw dataset, or at a cost of 150 dollars for a student license when purchased from the University of Pennsylvania Linguistic Data Consortium [35]. Unlike the Microsoft alternative, if the Google N-Gram option was chosen, a portion of the budget would have had to be dedicated to storing the database. It was initially proposed to store the database on a hard drive at a cost of approximately 100 dollars.

The proposed budget can be seen in the tables highlighting the key costs of each option in Figure X.

For reasons discussed in the Method section of Task 2: N-Gram Search, upon deciding to use the Google N-Gram database, a decision was to be made whether to purchase the University of Pennsylvania's Linguistic Data Consortium version or to obtain it for free directly from Google. A decision was made to utilise the free database provided by Google as it was not deemed justifiable to spend $150 on the processed data from the Linguistic Data Consortium since it was proposed that the raw dataset could be cleaned up through writing software.

The initial budget was based on the assumption that the Google N-Gram database could be stored locally, although this was feasibly possible in its compressed form, the local computing power available would have been insufficient to run the search engine code through the database within a the time frame of the project. As discussed in the Method section of Task 2: N-Gram Search, a cloud based computing service called ‘Amazon Elastic Compute Cloud’ was utilised to store and process the database. The free tier was considered but did not provide the specifications required to meet the needs of our task, and so instances on Amazon EC2 were hired at a rate of 0.853 dollars per hour [36]. Upon storing the initial full database, running our search code, and downloading our results generated from the outputs of the code, the total cost of utilising the service came to 576 dollars. This caused our project to exceed the initially proposed budget. The reason for the additional project expenditure was that despite our efforts, it was difficult to predict the precise time that it would take to upload, store and process the database on the cloud service. The initially proposed budget did not include the need or costing for the Amazon server since this was not something that could be reasonably foreseen at the start of the project since it was initially thought that the Microsoft N-Gram Service would be suitable for the needs of the project, and if this was not suitable, that the Google N-gram alternative would be able to be stored locally.

The final revised budget can be seen in Figure X.

In conclusion, despite going over budget, the additional funds were kindly provided by the school of Electrical and Electronic engineering upon sending an application for funding including justification of our purchases. The project work has benefited through the purchase of the Amazon service since we were able to complete a search of specific n-gram combinations of the code on the full Google N-Gram database. It has provided us with results to present as part of our thesis and allowed us to meet the requirements set out in the aim of Task 2.

Risk Analysis

A risk assessment was undertaken for this project to include risk identification, analysis, evaluation and treatment strategies using the Adelaide University risk matrix procedure [34][37]. This can be seen in Figure X. One of the risks that occurred during the project was the inaccurate estimation of time and resources. This occurred since the group and supervisors were unhappy with the results obtained from the initial analysis of letter frequency performed in Task 1. This was rectified by implementing the flexibility of our schedule and by replacing the initially proposed Task 4: Mass Spectrometer Data Analysis, with a new Task 4: Statistical Frequency of Letters Reanalysis. Another risk that occurred throughout the project was Illness. This was able to be dealt with relatively easily through working from home for a short period of time. The minor misunderstanding of project tasks occurred on a few occasions, but these were clarified through scheduling meetings with group members and supervisors. Bugs in code were reduced to the best of our ability through thorough testing and debugging of code. Finally, the inability to decipher the Somerton Man Code was a risk estimated with an almost certain likelihood. Despite being unable to avoid this risk throughout the project, its effects were considered negligible, and the group was still able to complete all work to the best of its ability, and further the research into the decryption of the code for not only future honours groups, but also the wider community through publishing our results on our Wiki.

Conclusions

developed skills in using software and programming languages such as Microsoft Excel, Matlab, Java and Python

Future Work

Talk about bug in code the collected number of years occurring rather than actual count Potential reanalysis of 5-grams using raw count Running a limited search on smaller sample size students can extend the analysis to all European languages in Gutenberg Or focus on English and explore genre Or further explore 5-gram combinations Talk about mass spectrometer analysis of hair

• Extend the analysis to all European languages in Gutenberg to see which languages fail test and can be ruled out • Focus on English and explore genre • Further explore 5-gram combinations • Perform Mass Spectrometer Data Analysis of the Somerton Man’s Hair

References

- ↑ The News. (1948, December 1). Dead Man Found Lying on Somerton Beach [online]. Available: http://trove.nla.gov.au/ndp/del/article/129897161

- ↑ The News. (1948, December 1). Dead Man Found Lying on Somerton Beach [online]. Available: http://trove.nla.gov.au/ndp/del/article/129897161

- ↑ The Advertiser. (2005, March 9). Death riddle of a man with no name [online]. Available: http://www.eleceng.adelaide.edu.au/personal/dabbott/tamanshud/advertiser_mar2005.pdf

- ↑ The Advertiser. (1949, June 9). Cryptic Note on Body [online]. Available: http://trove.nla.gov.au/ndp/del/article/36371152

- ↑ Hub Pages Author. (2014, August 30). The Body on the Beach: The Somerton Man - Taman Shud Case [online]. Available: http://brokenmeadows.hubpages.com/hub/The-Mystery-of-the-Somerton-Man-Taman-Shud-Case

- ↑ Cleland. (1949). Coroner's Inquest [online]. Available: http://trove.nla.gov.au/ndp/del/article/130195091

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2009:_Who_killed_the_Somerton_man%3F

- ↑ Hub Pages Author. (2014, August 30). The Body on the Beach: The Somerton Man - Taman Shud Case [online]. Available: http://brokenmeadows.hubpages.com/hub/The-Mystery-of-the-Somerton-Man-Taman-Shud-Case

- ↑ YouTube ABC. Somerton Beach Mystery 1978 [online]. Available: https://www.youtube.com/watch?v=ieczsZRQnu8

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available:

- ↑ K. Ramirez and L-V. Michael. (2010). Final Report 2010 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2010

- ↑ S. Maxwell and P. Johnson. (2011). Final Report 2011 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2011

- ↑ A. Duffy and T. Stratfold. (2012). Final Report 2012 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2012

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available:

- ↑ K. Ramirez and L-V. Michael. (2010). Final Report 2010 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2010

- ↑ S. Maxwell and P. Johnson. (2011). Final Report 2011 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2011

- ↑ A. Duffy and T. Stratfold. (2012). Final Report 2012 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2012

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ The Advertiser. (1949, June 10). Tamam Shud [online]. Available: http://trove.nla.gov.au/ndp/del/article/36371416

- ↑ N. Gencarelli and J. K. Yang. (2015, March 15). Cipher Cracking 2015 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cipher_Cracking_2015

- ↑ COMPLETE THIS REFERENCE: http://research.microsoft.com/en-us/collaboration/focus/cs/web-ngram.aspx

- ↑ COMPLETE THIS REFERENCE: http://googleresearch.blogspot.nl/2006/08/all-our-n-gram-are-belong-to-you.html

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ No Author. Microsoft Web N-Gram Service Quick Start [online]. Available: http://weblm.research.microsoft.com/info/QuickStart.htm

- ↑ No Author. Visual Studio Languages [online]. Available: https://msdn.microsoft.com/en-us/library/vstudio/ee822860%28v=vs.100%29.aspx

- ↑ COMPLETE THIS REFERENCE: https://aws.amazon.com/ec2/instance-types/

- ↑ COMPLETE THIS REFERENCE: https://aws.amazon.com/ec2/instance-types/

- ↑ COMPLETE THIS REFERENCE: https://aws.amazon.com/ec2/instance-types/

- ↑ COMPLETE THIS REFERENCE: https://aws.amazon.com/ec2/instance-types/

- ↑ COMPLETE THIS REFERENCE: http://storage.googleapis.com/books/ngrams/books/datasetsv2.html

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ COMPLETE THIS REFERENCE: http://commondatastorage.googleapis.com/books/syntactic-ngrams/index.html

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ T.Brants and A.Franz. (2006). Web 1T 5-gram Version 1 [online]. Available: https://catalog.ldc.upenn.edu/LDC2006T13

- ↑ COMPLETE THIS REFERENCE: https://aws.amazon.com/ec2/pricing/

- ↑ No Author. RISK MANAGEMENT HANDBOOK [online]. Available: http://www.adelaide.edu.au/legalandrisk/docs/resources/Risk_Management_Handbook.pdf

Glossary and Symbols

- ASIO: Australian Security Intelligence Organisation

- ASIS: Australian Secret Intelligence Service

- ASD: Australian Signals Directorate

- P-value theorem: The p-value is the calculated probability that gives researchers a measure of the strength of evidence against the null hypothesis [1].

- Chi-Squared Test:

- Universal Declaration of Human Rights:

- Project Gutenberg:

- N-gram model: The N-gram model is a sequence of n items from a given sequence of phonemes, syllables, letters, words or base pairs [2].

- One-time pad: The one-time pad is a decoder technology which cannot be cracked if the correct key is used [3].

- Initialism: A group of letters formed using the initial letters of a group of words or a phrase [4].

- Ciphertext: The encoded format of a message [5].

- Plaintext: The information of an original message, which is desired to be deciphered from the ciphertext </ref>.

http://www.maths.uq.edu.au/~pa/SCIE1000/gma.pdf</ref>.

- Key: What is needed to convert the ciphertext into the plaintext using the one-time pad </ref>.

- ↑ B. David et al., “P Value and the Theory of Hypothesis Testing: An Explanation for New Researchers,” Clinical Orthopaedics and Related Research®, Vol.468 (3), pp.885-892 2010. [25] G G. L et al., “What is the Value of a p Value?,” The Annals of Thoracic Surgery, Vol.87(5), pp.1337-1343 2009. [26] No Author.p-value [online]. Available: http://en.wikipedia.org/wiki/P-value#cite_note-nature506-1

- ↑ A. Z Broder et al., “Syntactic clustering of the web”. Computer Networks and ISDN Systems 29 (8), pp.1157–1166. [28] No Author. Video Lectures [online]. Available: https://class.coursera.org/nlp/lecture/17

- ↑ S.M. Bellovin. (2011, July 12). Frank Miller: Inventor of the One-Time Pad [online]. Available: http://www.tandfonline.com.proxy.library.adelaide.edu.au/doi/full/10.1080/01611194.2011.583711#abstract

- ↑ No Author. Initialism [online]. Available: http://dictionary.reference.com/browse/initialism

- ↑ No Author (2011). Topic 1: Cryptography [online]. Available: http://www.maths.uq.edu.au/~pa/SCIE1000/gma.pdf