Progress Report 2010: Difference between revisions

| Line 194: | Line 194: | ||

===Pattern Algorithm Plans=== | ===Pattern Algorithm Plans=== | ||

The major remaining | The major remaining areas of the pattern matching algorithm yet to be completed is the implementation of ignoring HTML; and the algorithm being able to search for exact and ‘similar’ initialisms as described in the Stage 1 Design. Ignoring HTML will likely be done by ignoring the characters in the text file when a “<” is encountered, and then re-start comparing characters again after a “>” is found. The method to find ‘similar’ initialisms has currently not been identified as of yet, although some ideas have been formed. The approach for doing this will likely be similar to the FindInitialism method, utilising smart and logical coding rather than a simple isWhitespace class. | ||

Once that is completed, the code will be ready to be integrated into the web crawler. This area of the project is almost finished and the future lies with the combined web crawler-pattern matching algorithm. | Additionally, further implementation could include saving the found initialisms into another text file for inspection later. Beyond that, more testing and debugging should be done and any unforeseen errors that occur should be fixed as soon as possible. Once that is completed, the code will be ready to be integrated into the web crawler. This area of the project is almost finished and the future lies with the combined web crawler-pattern matching algorithm. | ||

===Obtaining and Using Results=== | ===Obtaining and Using Results=== | ||

Revision as of 17:37, 2 June 2010

Due Date

This report is due on the 3rd of June, 2010 (Thursday, Week 12).

Executive Summary

For decades the written code that is linked to the “Somerton Man Mystery” (or Taman Shud Case) has been undecipherable and delivered no solid information about his history or identity. This project aims to bring light to the unsolved mystery by tapping into the wealth of information available on the internet.

Recent collection of random letter samples has been used to reinforce previous claims that the code is unlikely to just be a randomly generated sequence of letters. Along with thorough revision and checking of previous code used to produce results, it has been agreed that the Somerton Man code is most likely initialism.

The code found with the dead man will be used in conjunction with a suitable “Web Crawler” and specific pattern matching algorithms. The aim is to target websites of interest and filter through the raw text available in search of character patterns that are also present in the code. Once some solid results are obtained, frequency analysis will hopefully enable a solid hypothesis on some possibilities in regards to the meaning behind the code.

unfinished***

Aims and Objectives

The aim of the project is to make progression with respect to the Somerton Man Case through use of engineering techniques such as information theory, problem solving, statistics, encryption, decryption and software coding. Through use of such techniques, the results from the previous year’s project will revised and used to solidify the pathway for the rest of the project. Once past results are properly revised the next aim of the project is to attempt to utilise a web crawling device in order to access information stored in raw text on websites of interest. Specifically designed algorithms will then be used on the sourced text in order to search for patterns and reoccurrences that may be of interest or relation to the contents of the Somerton Man Code.

Project Background

Mainly taken from proposal - michael will work on and edit this part

The Case

The project is based upon attempts to discover the identity of a middle aged man discovered dead on a metropolitan beach in South Australia in 1948. With little solid evidence of his death apart from the body itself, the man’s passing has remained a mystery covered in controversy for decades. He was found resting against a rock wall on Somerton Beach opposite a home for crippled children shortly before 6:45 am on the 1st of December in 1948. A post-mortem of the body revealed that there was no possibility that the death was natural due to severe congestion in his organs. The most likely cause of death was decided to be from poison, which in 1994 was uncovered by forensic science to be digitalis.

Among the man’s possessions were cigarettes, matches, a metal comb, chewing gum, a railway ticket to Henley Beach, a bus ticket and a tram ticket. A brown suitcase belonging to the man was later discovered at the Adelaide Railway Station. In it contained various articles of clothing, a screwdriver, a stencilling brush, a table knife, scissors and other such utensils. Authorities found the name “T. Keane” and the unknown man was temporarily identified as a local sailor by the name of Tom Keane. However, this was soon proved to be incorrect and the man’s identity has been a mystery ever since. The Somerton man has since been incorrectly identified as a missing stable hand, a worker on a steamship, and a Swedish man.

A spy theory also began circulating due to the circumstances of the Somerton man’s death. In 1947, The US Army’s Signal Intelligence Service, as part of Operation Venona, discovered that there had been top secret material leaked from Australia’s Department of External Affairs to the Soviet embassy in Canberra. Three months prior to the death of the Somerton man, on the 16th of August 1948, an overdose of digitalis was reported as the cause of death for US Assistant Treasury Secretary Harry Dexter White, who had been accused of Soviet espionage under Operation Venona. Due to the similar causes of their death, the rumours of the unknown man being a Soviet spy began to spread.

By far the most intriguing piece of evidence related to the case was a small piece of paper bearing the words “Tamam Shud”, which translated to “ended” or “finished”. This paper was soon identified as being from the last page of a book of poems called The Rubiayat by Omar Khayyam, a famous Persian poet. After a police announcement was made in search of the missing copy of The Rubiayat a local Glenelg resident came forth baring a copy that he had found in the back seat of his car. It was in the back of this copy of The Rubiayat that the Mystery Code was discovered.

The Code

The code found in the back of The Rubiayat revealed a sequence of 40 – 50 letters. It has been dismissed as unsolvable for some time due to the quality of hand writing and the available quantity of letters. The first character on the first and third line looks like an “M” or “W”, and the fifth line’s first character looks like an “I” or a “V”. The second line is crossed out (and is omitted entirely in previous cracking attempts), and there is an “X” above the “O” on the fourth line. Due to the ambiguity of some of these letters and lines, some possibly wrong assumptions could be made as to what is and isn’t a part of the code.

Professional attempts at unlocking this code were largely limited due to the lack of modern techniques and strategies, because they were carried out decades earlier. When the code was analysed by experts in the Australian Department of Defence in 1978, they made the following statements regarding the code: There are insufficient symbols to provide a pattern. The symbols could be a complex substitute code or the meaningless response to a disturbed mind. It is not possible to provide a satisfactory answer.

Last year’s code cracking project group ran a number of tests to selectively conclude which encryption method was mostly likely used. In their final report, they determined that the Somerton man’s code was not a transposition or substitution cipher. They tested the Playfair Cipher, the Vigenere Cipher and the one-time pad[2]. The group concluded that the code was most likely to be an initialism of a sentence of text and using frequency analysis, determined that the most likely intended sequence of letters in the code was:

Requirements and Specifications

The entire project can be broken into 3 main modules. These are:

- Verification of past results

- Design/utilisation of a web crawler

- Pattern matching algorithm

Each module has a set of requirements specific to its design and application with respect to the project.

Verification Requirements

Software Requirements

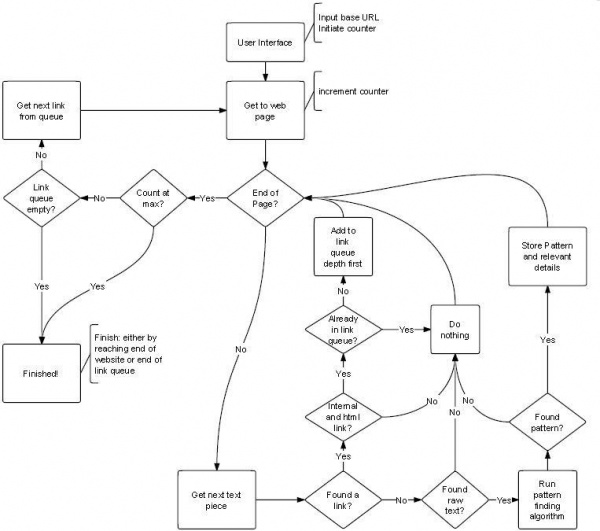

The following block diagram shows the software layout for traversing a website and filtering through raw text and storing all important findings. This method is using a breadth first traversal which is currently being revised and possibly changed to depth first. for more information see the "Web Crawler Plans" section.

Web Crawling Requirements

The web crawling device will be used to visit a website of interest and retrieve data that may contain information relevant to the project. The basic requirements of the web crawler are as follows:

- Take in a base URL from the user

- Traverse the base website

- Pass forward any useful data to the pattern matching algorithm

- Finish after traversing entire website or reaching a specified limit

The crawler will be required to detect and handle the following circumstances:

- Bad I/O

- Found a valid HTML URL

- Found a Non HTML URL

- Found a URL linking to an external site

- Found an Invalid URL

Pattern Matching Algorithm Requirements

The pattern matching code must be able to determine the difference between HTML code and raw text and data. The HTML code will be largely useless and probably repetitive in multiple sites which wouldn’t really be useful for the results we are after (in fact, if the frequency was quite high they could potentially skew our results). How the algorithm determines what is and isn’t HTML code will be very important because it will decrease the amount of processes as well as the amount of time needed to complete the methods in the algorithm.

The code must be able to switch between parsing every character or just initial letters of a word. This is mostly for added functionality, because it has been concluded that the Somerton man’s code is probably an initialism. This is done because we want our web crawler to have uses outside of this project.

Moreover, we want the code to be able to search for exact patterns as well as “similar” patterns. That is, data that has the same pattern as our given input, but with different characters (for example, if our input is searching for “ABAB” and comes across text with “XYXY”, it would be considered as a “similar” pattern and noted down). Also, the code should handle non-letter characters such as punctuation.

For every “found” pattern, the code should put the text (or sentence or phrase that the pattern was found in) and possibly URL in some sort of list or array. This is the most significant part of the algorithm because it will provide the basis of our results. From this, we can get frequency logs of certain words to better determine what the initialisms in the Somerton man’s code could possibly be.

Progress So Far

Verification of past results

In order to decide on a direction for the use of the web crawler, it was first important to look at the previous students work and make a decision on whether their conclusions are accepted.

The verification of their results was undertaken in two sections:

- Collection of random letter samples for comparison to the Summerton man code

- Analysis and Review of their code

A template for collecting random letter samples was created and used to collect 45 random letter samples from a variety of subjects. 45 was chosen as it is roughly the number of letters in the Summerton man code. As can be seen on the completed form(image to the right) details taken from each subject include the following:

- Intox. Yes/no - the Physical state of each subject was recorded to keep samples taken from intoxicated subjects separate from those that were, to the best of our knowledge, not under the influence of any substance.

- Age – each subject was required to supply their age for future use

- M/F – the sex of each subject was recorded for future use

The graphs below show the recently collected samples next to the samples collected by the previous students and the letter frequency plot of the Summerton man code.

- Change excel graphs to MATLAB graphs and add them in here (Michael)

Although the results are not identical to the previous student’s results, they are very different to the graph depicting the code from the Summerton man. This leads us to agree with the previous assumption that the code has not been produced randomly. So far there have not been enough samples from intoxicated subjects in order to produce a useful result. This is an ongoing collection and will be finalised when there are enough samples to compile a graph that can be considered useful. It was initially planned to use the age and sex data that has been collected to subdivide the results into different categories in order to conclude whether the letters in the Summerton man code are better correlated to a specific age group or sex. It was then realised that this will involve the collection of many more samples than is needed to verify the past students results and has therefore not been included in the results. Collecting more samples and splitting the data into different categories may provide useful results for future studies into the case.

Text Pattern Algorithms

The most recent revision of the pattern finding algorithm and testing text file can be found here.

The text pattern algorithm was written from scratch using java. The main purpose of the algorithm is to search through a text file and return an output saying if a word or initialism is found. This was done because we weren’t aware of what the web crawler’s were capable of using the initial functions (that is, using the web crawler without modifying any of the code).

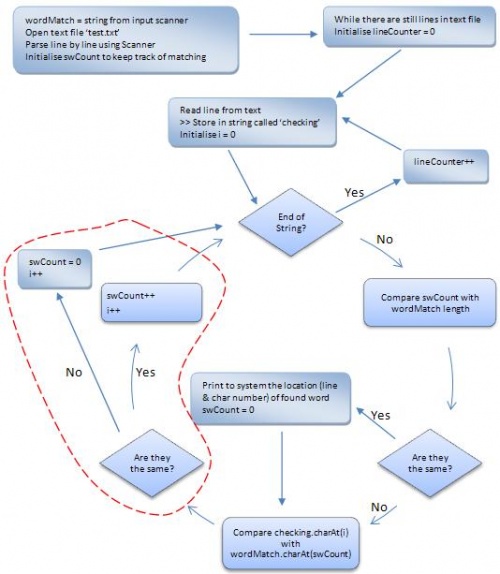

To start, a flow chart of the basic function of the algorithm was made to determine how to begin coding in the most efficient way possible. This is shown below:

To explain the function as simply as possible, the basic algorithm was designed to:

- Accept a user entered input from the command prompt and place it into a string

- Open a text file and place a line from that file into a string

- Compare the file string with the user’s string character by character, printing an output if the entire user entered string was found

- Repeat comparison process for every line until the end of the file is reached.

The algorithm utilises the scanner class from the util package as well as the File and FileReader classes from the io package. They are important because these classes allow the algorithm to open the needed text file and scan through it. They were easily found on the java API and are fairly simple to use. For this algorithm, the output would report the line and character number of the first letter of the matched word found in the text file.

To simplify the searching process, both the text file string and the user string were converted to lower case letters. It was felt that this helped improve the functionality of the algorithm because it involved fewer cases where characters would be the same letter but not the same. Since it doesn’t really matter if the characters are upper case or lower case for the results, there will really be no negative effect on the final outcome of the project.

To implement searching for initialisms, the area circled in red was modified to only change the swCount variable if the previous character in the file string was a white space. The results of the output would report the line and character number like the previous method, as well as the sentence that the initialism represents. This was done because the aim of the project is to find what the initialisms of the Somerton code could possibly represent. This seemed to work, although several errors in the output were observed when some special cases were done.

For example in the file string, if there were two white spaces in a row, the output would be incorrect (the result would print out the wrong character position and the wrong set of words that matched that initialism). Additionally, problems occurred when the input string had spaces in it as well (the method incorrectly assumed that the space represented an initialism as well). Several attempts were made to debug and fix these problems. The final solution was to create another method (to be used by the initialism finding algorithm) to remove the white spaces in the input string. Also, one more method was created to print the initialism sentence properly for every case that was thought of.

For testing purposes, a main method was created which utilises the scanner class again. For this, the method accepts a user entered input to determine which algorithm will be used for the current search (FindExact or FindInitialism) and then accepts another user inputted string that represents the word or initialism that will be searched for. It is currently unknown whether this will be used as the interface for the final web crawler, although it is likely that the final interface will have a similar function.

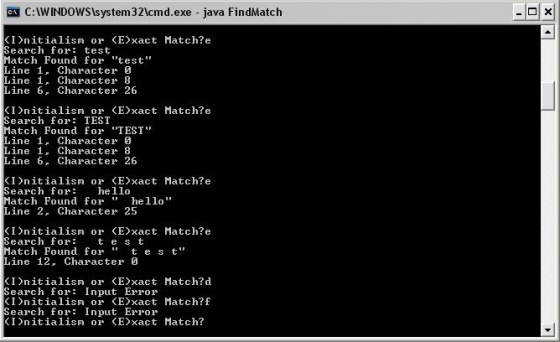

Some of the simulations and results from testing can be found below.

The image above demonstrates the FindExact method. From observing the word being searched for, and the testing text file used, it is possible to see that the algorithm works in the assumed manner. As stated earlier, the code considers upper and lower case characters the same – this is illustrated above with the searches for “TEST” and “test” returning the same thing. The method correctly determines the line and character number of the beginning of the word. Furthermore, the method determines the word “ hello” as “hello” (without the spaces at the start) and can correctly find its location in the text file.

Although the code ignores spaces in the search string, it can correctly determine the words “ t e s t” as “t e s t” with spaces in between, but not before the first non-whitespace character. Since the FindExact method can search for multiple words, it was felt that this specification was a requirement in the code. To do this, an algorithm was used to delete all of the white spaces in the user input string until a non-whitespace character was met.

Additionally, the main method returns an input error when a letter other than ‘i’ or ‘e’ is used.

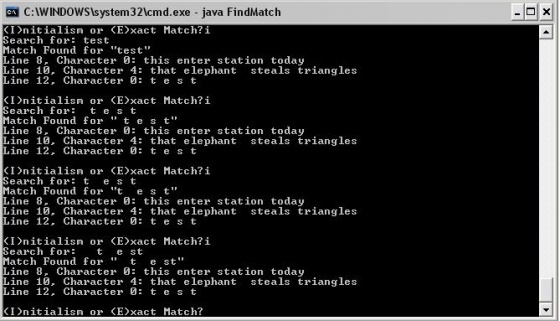

This image shows that the FindInitialism method returns the same result for every different search of the initialism test (with spaces in different places in the string). Incredible amounts of debugging and modification was done in order for this to work correctly. Extensive use of the isWhitespace() method helped to achieve a working algorithm. This method was very important because it was used to determine how many words of the initialism were currently printed as well as determining if a character was the beginning of a word or not.

The FindInitialism method is the method that will be developed for the final web crawler. It has a function specific to the final outcome for the project: to generate a list of potential sentences for an inputted initialism by reading through the source code of a web site.

Web Crawling

An open source Java based Web crawling device called Arachnid has currently been the main focus in terms of producing the web crawler. This crawler is so far proving to be quite useful for the project due to the following traits:

- Java based

- Basic example provided - allowing for reasonably steep learning curve instead of having to become familiar with a crawler from scratch

- Specific Case handling methods provided - methods for some URL cases are provided (with no handling code however it has provided a starting point to the solution)

- Highly Modifiable

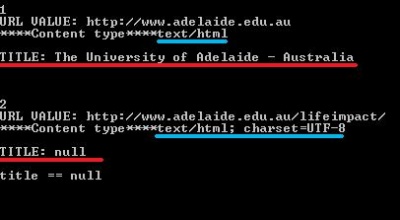

So far after modifying the supplied crawler and exploiting methods used in the supplied example the crawler is capable of the following:

- Compiling succesfully (This is a huge bonus! it allows for actual tests to take place)

- Intaking a base URL from the console

- Testing for correct protocol (We only want to look at http websites for now)

- Test for a null URL

- Test for non HTML URLs

- Test for URLs that link to external websites

- Run a handleLink()

- Iterate through the website and produce a list of URLs and their associated name found along with an incrementing counter

While using Arachnid is proving to be quite useful, there have also been some issues encountered

Approach for remaining part of the project

Web Crawler Plans

With the web crawler design there is currently two major issues to be resolved.

- Character encoding differences with HTML URLs

- Traversal method - breadth first vs depth first

plans***

Pattern Algorithm Plans

The major remaining areas of the pattern matching algorithm yet to be completed is the implementation of ignoring HTML; and the algorithm being able to search for exact and ‘similar’ initialisms as described in the Stage 1 Design. Ignoring HTML will likely be done by ignoring the characters in the text file when a “<” is encountered, and then re-start comparing characters again after a “>” is found. The method to find ‘similar’ initialisms has currently not been identified as of yet, although some ideas have been formed. The approach for doing this will likely be similar to the FindInitialism method, utilising smart and logical coding rather than a simple isWhitespace class.

Additionally, further implementation could include saving the found initialisms into another text file for inspection later. Beyond that, more testing and debugging should be done and any unforeseen errors that occur should be fixed as soon as possible. Once that is completed, the code will be ready to be integrated into the web crawler. This area of the project is almost finished and the future lies with the combined web crawler-pattern matching algorithm.

Obtaining and Using Results

sites of interest**

what we will look for**

what is the point of the results?**

Project Management

Project Alterations

As the project has progressed there have been a few things that have changed with respect to the original project plan.

It was originally planned to search the entire internet for patterns of interest. It was quickly realised that this is going to be unachievable within the time frame and resources of this project. The internet is simply too large and changing too quickly for our techniques and equiptment. For this reason the project goal has been down sized to searching through one website at a time. That is, there are now constricitons and limits to the size of the search that the software will be subjected to. The specifications now state that the software will be required to intake a starting URL and search through that website and that website only. This has given some extra challenges such as dealing with URLs that link to external websites however it will also make the project goal much more managable and realistic.

Budget & Resources

As stated when this project was started, the majority of the work related to this work is software based. The software being utilised is java based and can be readily used and accessed for free through the university's resources and on the internet. It was estimated that very little of the $500 budget would be used. Although barely any of the budget has been used, it is still available should we come to a situation where we need it (such as funding alcohol, etc to further highlight our results).

Costs so far:

- The Secrets of Codes: Understanding the World of Hidden Messages by Paul Lunde - $50

Risk Management

changes in risks? anything that has happened

Conclusion

References

See also

- Cipher Cracking 2010

- Final report 2009: Who killed the Somerton man?

- Timeline of the Taman Shud Case

- List of people connected to the Taman Shud Case

- List of facts on the Taman Shud Case that are often misreported

- Structuring the Taman Shud code cracking process

- List of facts we do know about the Somerton Man