Research Project Proposal & Progress Report 2015

Executive Summary

This project involves the design and implementation of software in order to decipher the code associated with the Somerton Man murder mystery. This document is a research proposal and progress outlining the key information required in undertaking the project in order to meet its aims, as well as a summary of the project progress thus far. The information in the research proposal includes the motivation, previous studies, aims, objectives and significance of the project. It also includes the technical background, knowledge gaps, technical challenges, specific project tasks involved, as well as project management resources. The information in the progress report includes the Outcomes, Assumptions, Testing Methods, Results, Evaluation, Preliminary Conclusions and Project Status.

Introduction

Motivation

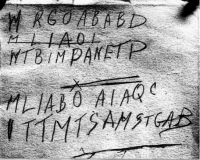

On the 1st of December, 1948, there was a dead body found at Somerton Beach, South Australia [1]. There was no evidence to show the man’s identification and the cause of death [2]. However, there were 5 lines of capital letters, with the second line struck out, that were found on a scrap of paper in the dead man’s trouser pocket [3]. A photo of the paper containing the letters can be seen in Figure 1. It was later discovered that the scrap of paper was torn from a book known as the Rubaiyat of Omar Khayyam [4]. These letters are considered vital to the case as it is speculated that they may be a code or cipher of some sort. As engineers, we have the ability to help investigators in solving the case. With that in mind, this project is being undertaken to attempt to decrypt the code in order to help solve the cold case. The South Australian Police stand to benefit from this project not only from the decoding technology developed for this case, but it also may be able to be applied to solve similar cases. Historians may be interested in gaining further historical information from this project since the case occurred during the heightened tension of the Cold War, and it is speculated that this case may be related in some way [5]. Pathologists may also be interested as the cause of death may have been an unknown or undetectable poison [6]. This project stands to benefit the wider community as well as extended family of the unknown man to provide closure to the mysterious case. Professor Derek Abbot also stands to benefit as he has been working closely with honours project students for the past seven years in an attempt to decipher the Somerton Man code.

Previous Studies/Related Work

Previous professional attempts to decipher the code were limited since they did not use modern techniques or have access to modern databases. Another limitation is that some of the characters in the code appear to be ambiguous and previous attempts made fixed assumptions on particular characters [7]. The Australian Navy’s response was that the letters were “neither a code nor a cipher” [8]. The Australian Department of Defence analysed the characters in 1978 using the computer technology available of that era and concluded: a) “There are insufficient symbols to provide a pattern” b) “The symbols could be a complex substitute code or the meaningless response to a disturbed mind” c) “It is not possible to provide a satisfactory answer” [9] Other previous studies into deciphering the code include Honours Projects at the University of Adelaide from 2009-2013. The previous work undertaken by these groups includes: multiple evolutions of letter frequency analysis of the code on a variety of base texts in a number of languages, initial letter and sentence letter probabilities, the probabilities of known cypher techniques, the likelihood of the code being an initialism of a poem, the use of various one-time pad techniques, the design and implementation of a web crawler, the analysis of text type and genre of the code’s likely plaintext, the implementation of pattern matching software into the web crawler, a 3D generated reconstruction of the bust of the Somerton Man (see Figure 2) and the analysis of mass spectrometer data taken from the Somerton Man’s hair [10] [11] [12] [13] [14]. The main conclusions that past groups have come to in their projects are: that the letters are unlikely to be random, the code is unlikely to be an initialism, it is likely that the Rubaiyat of Omar Khayyam was used as a one-time pad, the language of the code is likely to be English, the code is unlikely to be an initialism of a poem and that the Rubaiyat of Omar Khayyam was not used as a straight substitution one-time pad [15] [16] [17] [18] [19]. The analysis and extension upon specific elements of previous work that are directly related to the 2015 group’s project are discussed in the 'Method – Specific Tasks' section.

Aims and Objectives

The key aims and objectives in this project include the aim to statistically analyse the likely language of the plaintext of the code. Another aim is to design and implement software in order to try and decipher the code. The third aim is to analyse mass spectrometer isotope concentration data of the Somerton Man’s hair. Finally, the ultimate aim is to decrypt the code in order to solve the mystery, however this may be somewhat unrealistic as the code has remained uncracked for many years. Despite this, we will utilise computational techniques to attempt the decryption, and at the very least, further the past research into the case for future Honours students.

Significance

Considered “one of Australia’s most profound mysteries” at the time [20], this case still remains unsolved today. As the development of decoder technology and the related knowledge progresses, this project poses the opportunity to uncover further case evidence. The skills developed in undertaking this project are also of great significance in a broader sense, as these can be transferrable to possible future career paths. The techniques developed include: software and programming skills, information theory, probability, statistics, encryption and decryption, datamining and database trawling. The job areas and industries that these skills can be applied to are: computer security, communications, digital forensics, computational linguistics, defence, software, e-finance, e-security, telecommunications, search engines and information technology. Some possible job examples include working at: Google, ASIO, ASIS and ASD [21].

Technical Background

The technical background for the project involves the use of a number of tools including: the p-value theorem, the n-gram model and the one-time pad. Descriptions of these are as follows:

P-Value Theorem Explanation

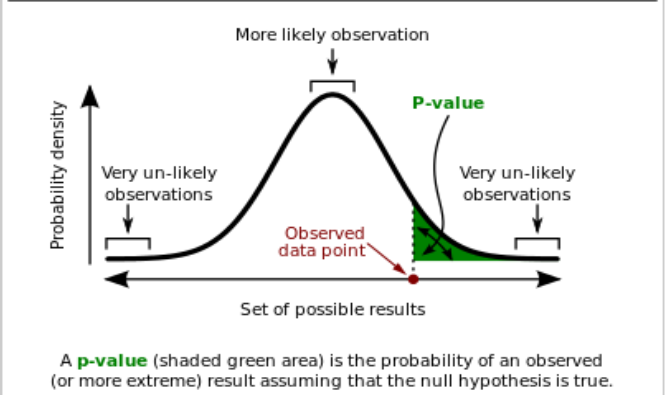

The p-value is the probability to obtain an effect equal to or more extreme than the one observed, presuming the null hypothesis of no effect is true. It gives researchers a measure of the strength of evidence against the null hypothesis.

In statistics, the p-value is used for testing a statistical hypothesis by observing sample results. P-value is an effective method to presume whether the null hypothesis of no effect is true. In this project, we will use p-value to do some simple tests to determine whether English was the language in the original message [22]

In this case, we make an assumption that English was not the language in the original message. Thus we should get a larger p-value which is higher than 0.05 in the simple test. This means the observed data point is in the range of “more likely observation”, mentioned in Figure 1 below. Oppositely, if we get a smaller p-value which is lower than 0.05 in the simple test, this means the observed data point is in the range of “very un-likely observations”. This indicates our assumption is wrong and that English was the language in the original message [23]

As the graph shows, the horizontal coordinate is the set of possible results, and the vertical coordinate is the probability density. The p-value is in the right side of observed data point, which is between the curve and horizontal coordinate [24]

N-Gram Model Explanation

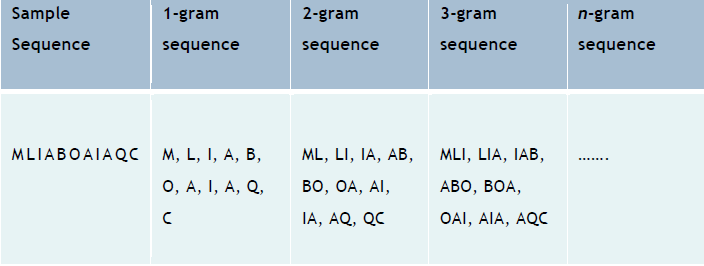

The n-gram model is a sequence of n items from a given sequence of phonemes, syllables, letters, words or base pairs. The n-grams typically are collected from articles or books. If the elements are words, n-grams may also be called shingles [25]

Size 1 of the n-gram model is called a "unigram". Size 2 of the n-gram model is called a "bigram". Size 3 of the n-gram model is called a "trigram". And so on.

An n-gram model is a type of probabilistic language model for predicting the next item. In a word, the item n is only related to item (n-1) regardless of any other items in the sequence [26].

We will use an n-gram database to find common groups of words for a variety of initialisms.

In Figure TB.2 shows below, we use one line from the Somerton Man code as a sample sequence to produce the n-gram model.

One-Time Pad Explanation

The one-time pad is a decoder technology which cannot be cracked if the correct key is used. For example, if we have a ciphertext, we need the key to decode it and get correct message [27].

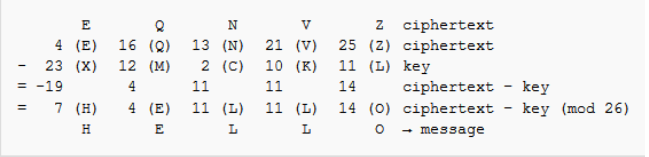

As in Figure TB.3 [28] shows above, we have the cipher text: EQNVZ. E is the fifth letter in the alphabet, so we label it as 4 (initially we label the first letter, A, as 0). Similarly, the letter Q is 16, N is 13, V is 21 and Z is 25. If we have a key which is: XMCKL, we can label X as 23, M as 12, C as 2, K as 10 and L as 11 using the same method as above. Then we use E minus X and process all cipher text elements one by one. Negative numbers are not used, so E minus X equals -19, then we add 26 and get the number 7, which represents letter H. Finally, we find the original message to be HELLO. The one-time pad technique will be used as another attempt to decipher the Somerton Man code.

Knowledge Gaps and Technical Challenges

In order to complete the specific tasks proposed within this project, the group members must develop new skills in text processing in a variety of programming languages. These languages include learning how to program in MATLAB, Java as well as native languages in Microsoft Visual Studio. The technical challenges to be faced within the project are directly associated with these knowledge gaps.

Method - Specific Tasks

Task 1: Statistical Frequency Analysis of Letters

A Critical review of the statistical frequency analysis of the letters from the 2013 group has been conducted and can be seen in the Statistical Frequency Analysis Review section of the Progress Report. It is proposed that the 2015 group is to repeat the statistical tests done by the 2013 group. Like the 2013 group, the Universal Declaration of Human Rights is to be used as the base text based on advice from Professor Abbott, but the validity of this text being used as the base text is to be statistically tested. The group is to find out how common each letter of the Somerton Man code is in each popular European language. The statistical results from this analysis will then be used on 44 letters out of pieces of text from the most likely languages. The 2013 group’s analysis will then be extended by the 2015 group by calculating p-values and implementing hypothesis testing. Like the 2013 group, the 2015 group is also to use Microsoft Excel to compute the statistical analysis and produce output graphs. The group is to run p-value tests on benchmark pieces of text from the most likely languages and see if the p-values suggest that the letters are indeed from those languages. The use of benchmark pieces of text are used to test the statistical accuracy of the method of analysis as well as the validity of the Universal Declaration of Human Rights as a base text. Once the most likely language is determined, the group is to process an additional number of benchmarks of that language and obtain a mean p-value and standard deviation of the p-values. Next, a hypothesis test can be performed based on the mean p-value and standard deviation of the p-values obtained from the benchmarks, when compared to, the p-value and standard deviation of the letters in the Somerton Man code against the base text. The null hypothesis is to be that ‘The group of letters are from another language’, and the alternative hypothesis is to be that ‘The group of letters are from the English language’. The alternative hypothesis can be altered if it is found that the most likely language from the statistical analysis is one other than English. Using this hypothesis testing method, the 2015 group is hoping to be able to more confidently determine which language the letters in the code are from.

Task 2: Web Crawler Re-design

Numerous studies from previous groups have shown that it is statistically likely for the Somerton Man code to be an initialism (see Previous Studies/Related Work section). This concept will be explored further using a technique that accesses a larger database in much shorter time than the web crawler developed by groups in previous years (see Previous Studies/Related Work section). Using an n-gram database, such as ‘Microsoft Web N-Gram Services’ or ‘Google N-Gram, rather than crawling the whole web for grams has the advantage that the crawling has already been done and all grams have been recorded. This will drastically increase the speed at which the gram combinations on the web can be found. The maximum number of grams provided by each database is five. Due to this, five letter gram groups from the code must be processed at a time, and then shifted across by one letter in the code to process the next gram group search. The use of Microsoft Web N-Gram Services is likely to be chosen due to its larger database [29] [30], better provided documentation and tutorials [31], and lower cost (see Budget Section). The improved web-crawler is to be implemented via a web application using developer tools such as Microsoft Visual Studio or Eclipse [14]. The application can be programmed in native languages including Visual Basic, Visual C#, Visual C++, Visual F# and Jscript [32]. An assumption that is to be made in order to complete this task are that the letters in the code, and thus the words in the grams, are order relevant. Another assumption is that all variants of ambiguous letters in the code are to be included. The application is to output a list of possible gams from the input letter combination. The input letters are then reduced by one, and the number ‘n’ in the gram search is also reduced by one. Once again a list of possible grams is output. This is repeated until it is deemed there are too many results for lower numbers of ‘n’ in the n-gram.

Task 3: Rubaiyat of Omar Khayyam as a One-Time Pad

This task involves the investigation that the letters have been substituted for others using a one-time pad technique. The Somerton Man code is to act as the ciphertext, the plaintext of the code is desired to be deciphered using a key and the Rubaiyat of Omar Khayyam is to act as the one-time pad. The ‘2013 Decoding Toolkit – One Time Pad’ software only used alphabetic shifts as keys [33]. The 2015 group is proposing to use an alternate technique using letter position. The key needed to decipher the code using the pad is the numerical value of the letter position within each word, with respect to the first letter of each word. Where the first letter has numerical value 0, the second letter has numerical value 1, and so on. This is to be implemented through the use of a computer program. It is likely that the java language will be used since this is the language that all previous groups have implemented for their text processing and decryption (see Previous Studies/Related Work section). Some of the base code such as reading in text files may be able to be analysed and reused from the 2013 group’s ‘Decoding Toolkit – One Time Pad’ in parts of the 2015 group’s program. The main assumption to be made to complete this task is that the letters in the code are order relevant, in order to produce a meaningful output. The direct substitution of the letters in the pad will be used. Firstly, the first letter of the code is to be used. Assuming it is an M, the program will search the Rubaiyat from beginning to end, until it finds the first word that begins with M. This will then be decoded to the second letter in the same word. This process is then repeated for the second letter in the code, being R. It finds the first word in the Rubaiyat that starts with R, and decodes it to the second letter in that word. This method is then repeated until there are too few words long enough to decode all of the letters. After this point, one final decode is to be attempted, where rather than using letter position, the last letter of each word will be used. The output of the software is to be possible words or phrases made up of letter substitutions in place of the letters in the Somerton Man code. A verification method is to be used to test the code to be working effectively. One group member will encode a message using this method, and the other will attempt to decode it using the software. If the decoding is successful, the group is able to verify that the software is indeed working correctly.

Task 4: Mass Spectrometer Data Analysis

The final task for the group is the analysis of the mass spectrometry data of the Somerton Man’s hair. This is to be undertaken if time permits, once all three of the other tasks have been completed. The hair was obtained from the plaster cast bust made of the Somerton Man, taken after his autopsy. The spectral analysis was carried out using laser ablation of the hair to measure the concentration of isotopes found in the hair. The results can be used to find out the environment the Somerton Man lived in in the lead up to his death. The 2013 group graphed the concentration of each isotope found in the hair versus the time up until his death using measurements made along the length of the hair. Additional data from a separate hair has since been taken using the mass spectrometer on a separate occasion since the 2013 group’s analysis. On this occasion, data on the concentration of isotopes in the plaster were also taken. This data could be used by the 2015 group to not only crosscheck the data between separate hairs, but also to crosscheck the isotopes in the plaster versus the hair, to see if isotopes from the plaster may have diffused into the hair [34].

Project Management - Planning and Feasibility

Work Breakdown/Deliverables

The workload for this project has been broken down into its main tasks. These can be seen in list form in the project Gant chart (see Timeline section). The key deliverables are represented as milestones on the project Gantt chart (see Timeline section). The dependencies of the tasks and deliverables can be seen in the Gantt chart as black arrows, these are as follows: The research proposal and progress report have dependence on the draft research proposal, which has dependence on the proposal seminar. Of the specific project tasks, task 1 is to be completed first, tasks 2 and 3 are to be completed in parallel, and task 4 has dependence on the first three tasks. The final seminar presentation, project exhibition poster, final performance, dump of final work and Youtube video are all dependent on the completion of the specific project tasks. The Final Report/Honours Thesis is to be completed in parallel with the rest of the work from the Research Proposal and Progress Report hand-up, onwards.

Timeline

The timeline for this project was created in the form of a Gantt Chart. This can be seen in Figure 3.

Task Allocation

The workload for the tasks within this project has been allocated based on the strengths and skillset of each member, as well as the estimated time taken and complexity of each task. A table of the project task allocation can be seen in Figure 4. The key allocations are that Nicholas Gencarelli will be undertaking the tasks of project management, web crawler re-design and the project exhibition poster. Jikai Yang will be undertaking the tasks of the use of the Rubaiyat of Omar Khayyam as a One-time Pad, and the project Youtube video. The allocations are subject to change throughout the project life cycle should the need arise.

Management Strategy

A number of management strategies have been adopted for use throughout the project. One of which is frequent face-to-face contact through regular meetings every 2-3 weeks. Another is regular communication between group members via text message and email. Collaboration is another strategy that is useful, if one member requires assistance on a particular task, the other is able to step in and help. This can be achieved through the use of flexible task allocation. The use of collaborative software including Google Drive for working together on project documents, and Git Hub repository for working together on software code. The project Wiki page is updated in real time including the weekly progress section to monitor and review work completed by each member every week, as well as plan tasks for the upcoming week. Finally, the use of a Gantt chart as a management strategy incorporates clearly identified task and goals and establishes a critical path through use of task dependencies.

Budget

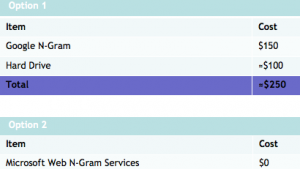

The project budget will depend on the n-gram database chosen to be used for the web crawler in task 2. A variety of options have been considered and the main two largest databases are Microsoft Web N-Gram Services, and Google N-Gram [35] [36]. Tables highlighting the key costs of each option can be found in Figure 5. The Microsoft alternative is free to use for academic purposes after applying for a user token, and is stored for free on Microsoft’s web server [37]. The Google alternative has a cost of 150 dollars for a student license [15], plus an additional cost of approximately 100 dollars for a hard drive upon which to store the database. One reason the Microsoft Web Gram Services is likely to be chosen due to its larger database [38] [39]. It also has the advantage of being stored on the web, so there is no need for a hard drive to store the database on. It has better provided documentation and tutorials [40], as well as lower cost.

Risk Analysis

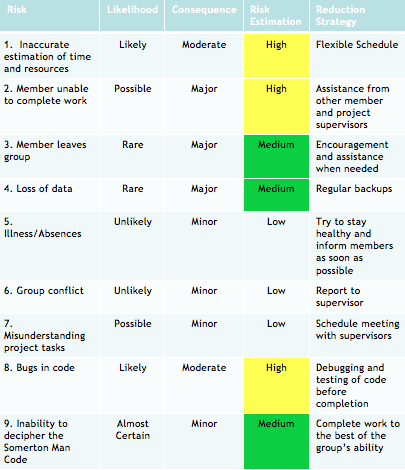

A risk assessment has been undertaken for this project to include risk identification, analysis, evaluation and treatment strategies using the Adelaide University risk matrix procedure [41]. This can be seen in Figure 6. One of the risks with a high risk rating is the inaccurate estimation of time and resources. This is to be reduced through the use of a flexible schedule. Another high rated risk is if a member is unable to complete work. This is to be reduced through seeking assistance from the other project member and the project supervisors. The third high risk is the discovery of bugs in code. This risk is difficult to avoid so debugging and testing of code before completion will be used to reduce this risk.

Nicholas Gencarelli's Progress Report

Outcomes

The general outcomes completed this semester include the successful completion of background research and literature review, Project Work Breakdown Structure, Project Gantt Chart, Task Allocation, Budget, Risk Assessment, Proposal Seminar, and Draft Research Proposal. The project specific outcomes include the successful completion of Task 1: Statistical Frequency Analysis of Letters.

Task 1 Progress Report

2013 Statistical Frequency Analysis Review

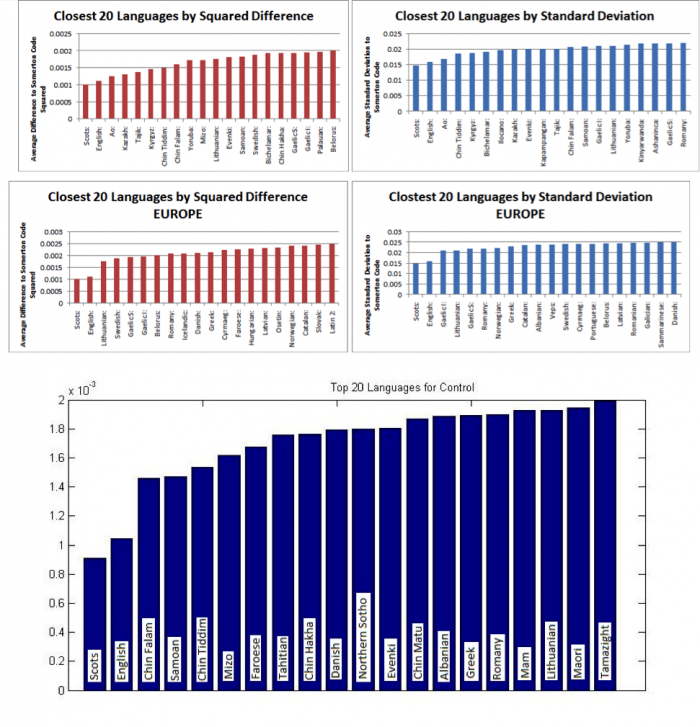

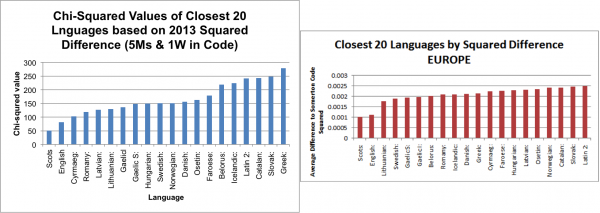

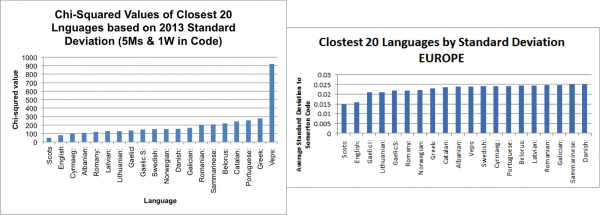

A critical review of the statistical frequency analysis of the letters from the 2013 group has been conducted to determine possible extensions to be undertaken by the 2015 group. The 2013 group based their statistical frequency analysis of letters on the translations and transliterations of the Universal Declaration of Human Rights. This document was chosen since it is translated in over 400 languages. The 2015 group is to analyse this choice of base document using statistical techniques. 266 languages were analysed, since many of the translations were not text files, but paper scans. This seems like a reasonable omission due to the time constraints of the project and the common European languages are all in text form. The analysis included accented letters normalised to their ‘parent’ characters, for example, considering 'ǎ' as 'a'. The languages were then analysed using a variety of combinations of the ambiguous letters within the code. The 2013 group’s statistical analysis was then refined to the top 20 closest European languages. These included more uncommon European languages such as Scots and Vepsian. The graphs of the results from the 2013 group’s analysis can be seen in Figure 7. The most likely language of the Somerton Man code and control text was found to be Scots. This is unlikely since it is not a common European language as it is a Scottish dialect [42]. Due to this, Scots, and other less common European languages will be omitted in the 2015 group’s statistical analysis. Instead, only the most common European languages will be included in order to further refine the statistical process. Despite this, a conclusion was made that the most likely language was English since Scots and English shared very similar initial letters. In order to test this conclusion, the 2013 group used the English translation of the Rubaiyat of Omar Khayyam as an English control text. The first 44 words from the text were used since this is the same number as the number of letters in the Somerton Man code. The results showed, once again, that Scots was the most likely language, followed by English (see Figure 7). Analysing this choice of control text, the choice was made by the 2013 group as a matter of convenience. This may not have been the best decision to make as the Rubaiyat of Omar Khayyam was originally written in Persian, and has since been translated into English. The use of a translated text as the English control may have skewed the statistical results of the analysis of the control text as the translation may use uncommon words or expressions. Another reason for the suspected use of uncommon words or expressions is the fact that the text is a book made up of four line poems, meaning that it may not accurately represent commonly used words or letters in such a small sample size of 44 words. The 2015 group is to attempt to counteract this possible skew by using 44 words out of a popular novel, originally written in the most likely language.

Assumptions

A number of initial assumptions were made for this task. One assumption was that the language used as the basis for the code is a European Language. This assumption was made based on the European appearance of the Somerton Man, results from previous groups concluding that the most likely language is English (see Previous Studies/Related Work section), and advice from Professor Abbott. Another assumption that was made is that the code is an initialism, meaning that it is made up of letters that represent the first letters of an ordered series of words. This assumption has also been made based on conclusions made by previous groups (see Previous Studies/Related Work section) and advice from Professor Abbott. Due to this assumption, only the first letters of words in each language in the base text will be considered for analysis, and not every letter. This assumption has been made since in Task 2: The Web Crawler Re-design, the crawler will search for English grams using each letter in the Somerton Man code as the first letter of each word in the gram phrase. In addition, in Task 3: The Rubaiyat of Omar Khayyam as a One-time Pad, the deciphering technique used relies on the initial letters of words in the pad text. Further assumptions were the inclusion of all accented letters in base texts and all combinations of ambiguous letters in the code. These assumptions were made to increase the robustness of the analysis. A final assumption that was made was the choice not to include the crossed out line of letters in the code. This was made since from observation, one can see that this line is very similar to the third line and is most likely to be a mistake.

Text Preparation

The 2013 group’s frequency analysis code was obtained. Initially, some background research in Java code was undertaken for use in compiling and editing the code. The text files used by the 2013 group from the Universal Declaration of Human Right for the statistical frequency analysis were also obtained. A selection of these were then processed in Java, using a modified version of the 2013 group’s code, and the results were tabulated in an excel spread sheet including language, numerical frequency of each letter in each language and the proportional frequency based on the total number of initial letters in each language. These results were then used to test our statistical method of analysis in Microsoft Excel, MATLAB and by hand.

A spread sheet containing the letter frequency data from the Universal Declaration of Human Rights was obtained from the 2013 group. These results were verified by processing the original text files through the initial letter frequency java code. This data spread sheet was used for statistical calculations for comparison of the letter frequency in the code versus each language in the Universal Declaration of Human Rights.

Normal Distribution P-Value Testing

Firstly, a group of test texts were prepared, using 3 groups of 44 letters from the English, French and German languages from the Universal Declaration of Human Rights, as well as the Somerton Man Code. The test texts were analysed using ANOVA in Excel. Once these results were obtained, the p-value calculation method used was tested using various pieces of software. A test case with known p-value result from the Engineering Maths IIA notes was run through ANOVA in Microsoft Excel, as well as using built-in MATLAB functions found in Engineering Maths IIA notes, and by hand. All of these methods produced the same p-value using the test case with known p-value and so the ANOVA method was verified to be functioning correctly.

Results

ANOVA One Way analysis was used in Microsoft Excel to compare letter frequency between texts, as well as ANOVA Two Way Analysis of Variance, where Factor 1 was the letter, Factor 2 was the text and the response was the letter frequency. Both of these methods did not produce valid p-values as they used the comparison of total means and variances. Paired data analysis using a paired sample t-test was researched and attempted based on the Engineering Maths IIA Notes. This was computed using MATLAB, but also produced unusable p-values since the method uses mean and standard deviation of the differences between samples to calculate the p-value, thus letter and text type data is lost in the process and so is not applicable. The p-values calculated using these methods fluctuated depending on the type of data used. If the raw number of letter frequencies for the sample text or code (44 letters) versus the Universal Declaration of Human Rights reference text (1000-2000 letters depending on language) was used, then the p-value became very small since the total means of each text were vastly different. Whereas, if the frequency of each letter as a percentage of the total number of letters in each text were used, this gave a p-value of 1 since the means became the same. Thus this method of mean comparison did not work for letter frequency analysis.

Evaluation

All of these methods did not compare individual data entries from one group to their corresponding entry in another group. Instead they used the sample size, sum, mean, and variance of each group to compare to the other group. Because of this, this method could not be used when comparing letter frequency between languages. For example the frequency of the letter A in one text, must be compared with the frequency of the letter A in another text, rather than the frequency of all letters in one text being compared to the frequency of all letters in another text.

Non-Parametric Testing

Due to the lack of usability of the normally distributed statistical methods, a number of non-parametric tests were researched including the Kolmogrov-Smirnov Test, Mann-Whitney U Test and Chi-Squared test. These 3 tests were researched and considered but ultimately the chi-squared test was chosen based on a cryptography reference found that uses the chi-squared test to compare a code to a particular language [43], as well as advice from supervisor Dr Matthew Berryman. An extension for future Honours groups could be to attempt the analysis with other non-parametric tests such as the Kolmogrov-Smirnov Test or Mann-Whitney U Test. The base text chosen for testing was the full English translation of the Universal Declaration of Human Rights. The sample texts chosen were the first 44 letters from a the novel Tess of the d’Urbervilles by Thomas Hardy as an English benchmark [44], as well as the first 44 letters from the German, French and Zapoteco translations of the Universal Declaration of Human Rights.

Initial Results, Evaluations

Initially, a result was unable to be computed for the Chi-Squared value or p-value using the Chi-Squared test method. It was soon discovered that in the calculation of the Chi-Squared value, if the frequency of a letter in the reference text (A particular language from the Declaration of Human Rights) was 0, this caused the denominator of the Chi-Squared value equation to be 0 and thus the equation became invalid. An assumption in the method of the Chi-Squared test was made in an attempt to rectify the issue. The count for letters in the reference text that had 0, were altered to 1x. This caused the result to very large Chi-Squared values (in the order of 1010) and very small p-values (approaching 0). This issue was solved by making a different assumption. The letters in the reference text with frequency 0 were amended to have frequency 1, a small number chosen to be the closest integer value to 0 in the hope that it would not have significant enough effect on the results to cause a skew in the data. This assumption was made since the sample size for the reference text was too small, and so not every letter appeared at least once. Ideally compiled statistics on a much larger sample size would have been used, but these were too difficult to obtain for all languages in the analysis. An option for a future group would be to use an alternate reference text of greater length in each language to provide a larger sample size and allow for every letter to appear at least once. The Chi-Squared Test resulted in large Chi-Squared values that were brought about by a small text size sample of 44 letters. This effectively caused the p-value results to be very small numbers and therefore were unable to be used as a means of comparison and caused the null hypothesis to be rejected in every case. However, this test could still be used as a measure of similarity since the Chi-Squared Values for each text comparison can be compared based on the fact that the smaller the Chi-Squared value, the more similar the two texts are. Thus, despite being unable to obtain valid p-values and perform hypothesis testing, the texts could still be ranked based on the Chi-Squared value.

Modified Reference Text Frequency Comparison (1)

The English initial letter frequency calculated from a subset of Project Gutenberg [19] was compared to the initial letter frequency results calculated from the English Universal Declaration of Human Rights. The Chi-Squared values for both the original and modified versions of the English Declaration were compared and the results can be seen in Figure 7. This test served two purposes. It tested the assumption to increase the count of 0 frequency letters to 1, as well as testing the validity of the Universal Declaration of Human Rights as a reference text.

Results

_

_

_

_

_

_

_

_

_

_

_

_

_

Evaluation and Justification

The results showed that the English Declaration is a reasonable reference text to use with a Chi-Squared value of 7733. This number could be reduced by using a reference text with a larger sample size. Despite this, the results show the difference between the Modified English Declaration Statistics and Original English Declaration Statistics when compared to the Internet statistics. There was found to be a 0.27% difference between the Chi-Squared values of the modified and original reference text. From this a conclusion was made that the assumption of the modified reference text could be used without a significant impact on the results of the rest of the analysis. The validity of other translations of the Universal Declaration of Human rights being used as a reference text could have been tested if time and resources had permitted, since statistical data for all other languages was difficult to find. This could be a possible task for a future group. Another possible test for future groups could be to try using an expected value of 1x10-3 rather than a count of 1 for the reference text.

Modified Reference Text Frequency Comparison (2)

A test of samples from three European languages: English, French and German, one non-European language: Zapoteco, as well as the Somerton Man Code were analysed using the chi-squared test to verify the method.

Results

_

_

_

_

_

_

_

_

_

_

_

_

_

_

Evaluation and Justification

The use of samples from texts of known languages was used to test that the method was able to correctly differentiate between languages that are closer to English before applying it to compare the code to various languages. This test was another way to test the assumption of altering the frequencies of letters with 0 count in the reference text to 1. The results show that the English benchmark (Thomas Hardy sample) and the Somerton Man Code are the closest texts to the English Declaration, followed by French, German and Zapoteco. The results also show that by using the modified English Declaration, the Chi-Squared values become much closer to the values generated by using the Project Gutenberg English statistics [45]. These results are both desirable as they show that the modified English declaration is a closer match to using statistics with a larger sample size, as well as that the Chi-Squared method appears to be differentiating between closest languages correctly.

Top 20 European Language Comparison

The top 20 closest European languages by squared difference and standard deviation from the 2013 Honours group were then run through the Chi-Squared test against the Somerton Man code as in the original police report (5 Ms and 1 W). This test was run as a method of comparison to test how similar our results were to the 2013 group’s results.

Results

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

Evaluation

The results show that the two closest languages to the Somerton Man code are Scots, followed by English in all cases. The main conclusion to draw from the results of this comparison was that the Chi-Squared method appeared to be functioning correctly, and so the 2015 group could now further extend the analysis.

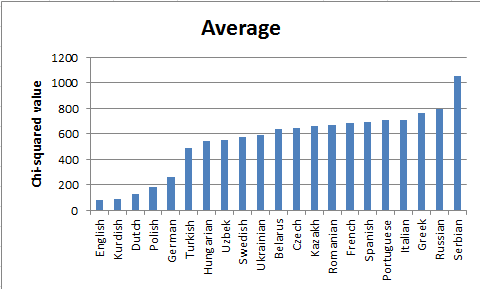

Top 20 European Languages based on Estimated Number of Native Speakers

In extension to the 2013 group’s work, the 2015 group conducted a Chi-Squared test of the Somerton Man code against the top 20 European languages based on the estimated number of native speakers [46]. This test included all versions of the Somerton Man code including the versions with 6 Ms, 6 Ws and the police report code (5 Ms and 1 W).

Results

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

Evaluation

The results show that for 2 of the 3 code versions, English was the closest language to the Somerton Man code. Kurdish, a language spoken in some parts of Turkey, was found to be the closest language to the code version with 6 Ms. English was also found to be the closest language to the code as in the original police report.

Top 20 European Languages based on Estimated Number of Native Speakers against Thomas Hardy Sample

A comparison of the English control text (a 44 letter sample of Thomas Hardy’s Tess of the d’Urbervilles) against the top 20 European Languages based on Estimated Number of Native Speakers was conducted in order to test the ability of the method being able to identify an English sample text among the top 20 European languages.

Results

_

_

_

_

_

_

_

_

_

_

_

_

_

_

_

Evaluation

The results of this test show that English was the closest text to the English sample text. This result is desirable as it successfully verified the ability of the Chi-Squared test to distinguish a 44 letter English sample out of the top 20 European languages, and can be used to back up the results obtained from comparing the same 20 languages against the Somerton Man code.

Evaluation of Task 1 Method

The original proposal suggested that the group repeat the statistical analysis from the 2013 group and use benchmark texts to statistically assess the validity of the method as well as the Universal Declaration of Human Rights as a base text. The group was then to extend the analysis by calculating the p-values for the Somerton Man code when compared to the most common European languages and perform hypothesis testing based on the results. The group was also to use benchmark texts to test the statistical accuracy of the method as well as the validity of the Universal Declaration of Human Rights as a base text. The 2015 group’s statistical analysis has achieved almost all of its proposed goals. A slight diverge from the initially proposed method was decided upon once it was found that p-values useful for comparison or hypothesis testing were unable to be obtained using any attempted statistical method. Instead, the texts were ranked using their calculated Chi-Value. All assumptions outlined in the proposal were followed, with the addition of the modification of the frequency data to account for the small sample size of the base text.

Preliminary Conclusions

The results of all of the Chi-Squared testing lead to the conclusion that we can now say more confidently than ever that English was the most likely language from which the Somerton Man code was written assuming it is an initialism.

Project Status

The 2015 Honours group’s project is currently on schedule as can be seen in the Gantt chart in Appendix G. All deliverables thus far have been completed on time and to a high standard. The project is also within budget (Appendix I), as no unexpected project expenses have arisen. Since the project is running on schedule, the project plan and Gantt chart do not need to be amended at this stage. The tasks that are still to come are outlined in the project Gantt chart. The upcoming general tasks include the preparation and completion of the Final Seminar, Exhibition Poster, Project Exhibition, Honours Thesis and Project Youtube Video. The upcoming project specific tasks include Task 2: Web Crawler Re-design, Task 3: Rubaiyat of Omar Khayyam as One-Time Pad and the optional Task 4: Mass Spectrometer Data Analysis if the project is to remain on schedule throughout Semester 2.

Jikai Yang's Progress Report

Outcomes

In 2015 semester 1, we finish our project task 1 which is statistical frequency analysis of letters. We have initial letter frequency test for English, French and German which is from the Universal Declaration of Human Rights. I use the initial letter frequency text files for each language which is provided by Nick and use a statistic tool in the Microsoft excel called Anova-single factor to compare with letter frequency of Somerton Code. I have done the calculations for English statistics (initial letter frequency for English from Wikipedia) against Somerton code, English declaration against code, English declaration against itself, English declaration against English statistics, French declaration against code, French declaration against English statistics, German declaration against code and German declaration against English statistics. After these calculations, I find the p-value for all tests are very large, even for French and German declaration against English statistics. Because there is large difference between French, German and English, the p-value for these tests should smaller than 0.05 which is shows big difference between 2 languages. We discussed the result with Professor Abbott, He suggested us to use another method to do the Anova, because excel may make a mistake. After the meeting, I used mathematic method to manually calculate the p-value between text files and also use matlab to calculate the p-value. The calculation results from matlab and manually calculated by mathematic method are same with excel did. So we think the calculations from excel is correct. According to the strange p-value, we sent all the calculations we did by using Anova to our second supervisor Dr Matthew for suggestions. Dr Matthew said our calculations are mostly correct, and the reason we get strange p-value is the letter frequencies do not obey the normal distribution. Anova is better used for the data that obey normal distribution. Because our letter frequencies are far from normal, we should use the non-parametric method to calculate the p-value for letter frequencies. Dr Matthew advised us to use Chi-squared test which is one of the method in non-parametric test to analyse the data. For the Chi-squared test, I use the top 20 European languages from the Universal Declaration of Human Rights which has low squared difference and low standard deviations to against Somerton code. I calculate chi-squared value between the European language and the Somerton code for each letter, and add all chi-squared value of each letter together. The total chi-squared value is means the difference between two languages. Also I use the top 21 European Languages based on number of native speakers from Wikipedia to against Somerton code. The languages text files are also from the Universal Declaration of Human Rights. My partner Nick also uses popular European languages to against Thomas Hardy to calculate the chi-squared value.

Justification

For the Anova-single factor test: The 2013 groups did the squared difference and standard deviations for 266 languages which is from the Universal Declaration of Human Rights against Somerton code. In statistics, squared difference and standard deviations are not strong evidences to prove the results are correct. So we have to use stronger method to shows the relationships between languages and Somerton code. Professor Abbott advised us to calculate p-value and implement hypothesis tests. We use Analysis of variance (Anova) to analyse the differences between languages and Somerton code. Anova is a statistic models and it aims to analyse differences between two groups, it will use the means and variance between two groups and calculate their statistical significance [47]. We choose single factor for test because there is only one factor which is letter frequency between it. After doing Anova-single factor in Microsoft excel, if we get statistical significant value which is the p-value between two groups, and the p-value is smaller than 0.05, it will indicate there is a large difference between two groups, otherwise, there is no difference between two groups.

For the Chi-squared test: AS poor result of p-value for the letter frequency test, we got a suggestion by Dr Matthew. He said our letter frequency distribution does not obey normal distribution and Anova is not a good statistic method to amylase data which is not following normal distribution. He also advised us to use non-parametric method for letter frequency test. Chi-squared test is a statistical hypothesis test that data do not have to obey normal distribution and it is able to crake codes [48]. We are able to calculate the chi-squared value for each letter in languages and Somerton code, and add them to get the total chi-squared value. If the total chi-squared value between one language and Somerton code is very small, it will indicate the Somerton code is very similar to this language.

Evaluation Results

Assumptions

Because the letter M and W in the Somerton code are very similar. Our group make 3 assumptions for this case. First assumption is All 6 M OR W letters in the code are M. Second assumption is All 6 M OR W letters in the code are W. Third assumption is All 6 M OR W letters in the code are 5M and 1W. The last assumption we make as it appears in the original police report from 1949.

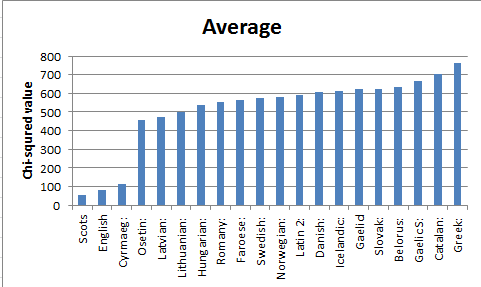

Chi-squared value of top 20 European languages from Declaration

For the chi-squared test, we use formula (observed-expected)^2/expected [49]. In the formula, “observed” in our test represent how many times that a letter appears in Somerton code, “expected” value in our test means the total number of letters in Somerton code times the frequency of each letter in languages from Declaration of Human Rights. We face a big problem in our calculation. Some letters frequency in languages from Declaration of Human Rights is 0, and it will result expected value becomes 0. As expected value in the formula is denominator, it cannot be 0. So we discuss it with Dr Matthew and try to use a very small number like 1E-6 to instead of 0. After that we face a problem again. For example, if letter Q appeared once in the Somerton code but 0 in languages, we will get a very large number of chi-squared values from using formula. Under this situation, we consider that the number of letter appeared in the language should be an integer. So we use 1 which is closest integer to 0 to instead of 0. It will not affect our result because the total number of letters for each language is over thousand. For the p-value of chi-squared tests, we can find it according to the chi-square distribution table [50]. In our case, p-value is small because the Somerton code is not a really language. But we can still use chi-squared value to compare difference between languages and Somerton code. As the bar charts below show the average chi-squared value for three assumptions we make before. The Scots and English have lower chi-squared value than other European languages. We compared our result with 2013 groups, both of us get Scots and English are most likely language which is Somerton code could be.

The bar chart below show the average chi-squared value for top 20 European langueges which has lower squared difference in Declaration of Human Rights.

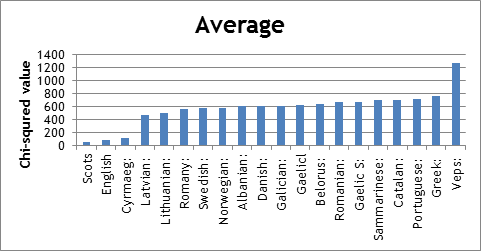

The bar chart below show the average chi-squared value for top 20 European langueges which has lower standard deviation in Declaration of Human Rights.

As the conclusion was the same as our expectation before, the most likely language is English if the letters in the Somerton code were the initial letters of words. Although the Scots shows lowest chi-squared value in all assumptions and in average. We consider that Scots and English are very similar and English is more popular language used in the world than Scots. We define the Somerton code is most likely be English.

Chi-squared value for top 21 European languages based on number of native speakers

I also calculate the chi-squared value for top 21 European languages based on number of native speakers [51]. We still use three assumptions for letter M and W as before. The bar chart below shows the average chi-squared for each language.

In the conclusion, English still has lowest chi-squared value. It means English is the most likely language in the top 21 European languages based on number of native speakers.

Project Status

Based on our Gantt chart, we finished task 1 on time. Nick will start task 2 and I will start task 3 immediately. According to Dr Matthews’s suggestion, we will do the chi-squared calculations by using 1E-3 to instead of 0 for the letter frequency to improve our task 1.

References

- ↑ The News. (1948, December 1). Dead Man Found Lying on Somerton Beach [online]. Available: http://trove.nla.gov.au/ndp/del/article/129897161

- ↑ The News. (1948, December 1). Dead Man Found Lying on Somerton Beach [online]. Available: http://trove.nla.gov.au/ndp/del/article/129897161

- ↑ The Advertiser. (2005, March 9). Death riddle of a man with no name [online]. Available: http://www.eleceng.adelaide.edu.au/personal/dabbott/tamanshud/advertiser_mar2005.pdf

- ↑ The Advertiser. (1949, June 9). Cryptic Note on Body [online]. Available: http://trove.nla.gov.au/ndp/del/article/36371152

- ↑ Hub Pages Author. (2014, August 30). The Body on the Beach: The Somerton Man - Taman Shud Case [online]. Available: http://brokenmeadows.hubpages.com/hub/The-Mystery-of-the-Somerton-Man-Taman-Shud-Case

- ↑ Cleland. (1949). Coroner's Inquest [online]. Available: http://trove.nla.gov.au/ndp/del/article/130195091

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2009:_Who_killed_the_Somerton_man%3F

- ↑ Hub Pages Author. (2014, August 30). The Body on the Beach: The Somerton Man - Taman Shud Case [online]. Available: http://brokenmeadows.hubpages.com/hub/The-Mystery-of-the-Somerton-Man-Taman-Shud-Case

- ↑ YouTube ABC. Somerton Beach Mystery 1978 [online]. Available: https://www.youtube.com/watch?v=ieczsZRQnu8

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available:

- ↑ K. Ramirez and L-V. Michael. (2010). Final Report 2010 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2010

- ↑ S. Maxwell and P. Johnson. (2011). Final Report 2011 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2011

- ↑ A. Duffy and T. Stratfold. (2012). Final Report 2012 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2012

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ A. Turnbull and D. Bihari. (2009). Final Report 2009: Who killed the Somerton man? [online]. Available:

- ↑ K. Ramirez and L-V. Michael. (2010). Final Report 2010 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2010

- ↑ S. Maxwell and P. Johnson. (2011). Final Report 2011 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2011

- ↑ A. Duffy and T. Stratfold. (2012). Final Report 2012 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2012

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ The Advertiser. (1949, June 10). Tamam Shud [online]. Available: http://trove.nla.gov.au/ndp/del/article/36371416

- ↑ N. Gencarelli and J. K. Yang. (2015, March 15). Cipher Cracking 2015 [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cipher_Cracking_2015

- ↑ B. David et al., “P Value and the Theory of Hypothesis Testing: An Explanation for New Researchers,” Clinical Orthopaedics and Related Research®, Vol.468 (3), pp.885-892 2010.

- ↑ G G. L et al., “What is the Value of a p Value?,” The Annals of Thoracic Surgery, Vol.87(5), pp.1337-1343 2009.

- ↑ No Author.p-value [online]. Available: http://en.wikipedia.org/wiki/Pvalue# cite_note-nature506-1.

- ↑ A. Z Broder et al., “Syntactic clustering of the web”. Computer Networks and ISDN Systems 29 (8), pp.1157–1166.

- ↑ No Author. Video Lectures [online]. Available: https://class.coursera.org/nlp/lecture/17

- ↑ S.M. Bellovin. (2011, July 12). Frank Miller: Inventor of the One-Time Pad [online]. Available: http://www.tandfonline.com.proxy.library.adelaide.edu.au/doi/full/10.1080/0161 1194.2011.583711#abstract

- ↑ No Author. One-time pad [online]. Available: http://enc.slider.com/Enc/OneTimePads

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ T.Brants and A.Franz. (2006). Web 1T 5-gram Version 1 [online]. Available: https://catalog.ldc.upenn.edu/LDC2006T13

- ↑ T.Brants and A.Franz. (2006). Web 1T 5-gram Version 1 [online]. Available: https://catalog.ldc.upenn.edu/LDC2006T13

- ↑ No Author. Visual Studio Languages [online]. Available: https://msdn.microsoft.com/en-us/library/vstudio/ee822860%28v=vs.100%29.aspx

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ L. Griffith and P. Varsos. (2013). Semester B Final Report 2013 – Cipher Cracking [online]. Available: https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Semester_B_Final_Report_2013_-_Cipher_cracking

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ T.Brants and A.Franz. (2006). Web 1T 5-gram Version 1 [online]. Available: https://catalog.ldc.upenn.edu/LDC2006T13

- ↑ J. Fettes. (2013). Professor’s 15-year search for answers seeks to crack the secret code to the death of the ‘Somerton man’ found on an Adelaide Beach [online]. Available: http://www.heraldsun.com.au/news/law-order/portrait-may-hold-key-to-somerton-man-beach-mystery/story-fni0ffnk-1226674957043

- ↑ C. X. Zhai et al. (2010, July 19-23). Web N-gram Workshop [online]. Available: http://research.microsoft.com/en-us/events/webngram/sigir2010web_ngram_workshop_proceedings.pdf

- ↑ T.Brants and A.Franz. (2006). Web 1T 5-gram Version 1 [online]. Available: https://catalog.ldc.upenn.edu/LDC2006T13

- ↑ No Author. Microsoft Web N-Gram Service Quick Start [online]. Available: http://weblm.research.microsoft.com/info/QuickStart.htm

- ↑ No Author. RISK MANAGEMENT HANDBOOK [online]. Available: http://www.adelaide.edu.au/legalandrisk/docs/resources/Risk_Management_Handbook.pdf

- ↑ S. L. Center. (2015). What is Scots? [online]. Available: http://www.scotslanguage.com/What_is_Scots%3F_uid2/What_is_Scots_%3F

- ↑ No Author. 2014. Using Chi Squared to Crack Codes [online]. Available: http://ibmathsresources.com/2014/06/15/using-chi-squared-to-crack-codes/.

- ↑ T Hardy. 2005. Tess of the d’Urbervilles (11th Edition) [online]. Available: https://ia801409.us.archive.org/24/items/tessofthedurberv00110gut/110-8.txt.

- ↑ Author unknown. 2015. Letter frequency [online]. Available: http://en.wikipedia.org/wiki/Letter_frequency#cite_note-17

- ↑ No Author. 2015. List of languages by number of native speakers [online]. Available: http://en.wikipedia.org/wiki/List_of_languages_by_number_of_native_speakers#cite_note-Nationalencyklopedin-1.

- ↑ No Author. Analysis of variance [online]. Available: http://en.wikipedia.org/wiki/Analysis_of_variance

- ↑ No Author ( 2014). Using Chi Squared to Crack Codes [online]. Available: http://ibmathsresources.com/2014/06/15/using-chi-squared-to-crack-codes/

- ↑ No Author. Chi-squared test [online]. Available: http://en.wikipedia.org/wiki/Chi-squared_test

- ↑ No Author. Chi-Square Distribution Table [online]. Available: http://sites.stat.psu.edu/~mga/401/tables/Chi-square-table.pdf

- ↑ No Author. 2015. List of languages by number of native speakers [online]. Available:http://en.wikipedia.org/wiki/List_of_languages_by_number_of_native_speakers#cite_note-Nationalencyklopedin-1

Glossary and Symbols

- ASIO: Australian Security Intelligence Organisation

- ASIS: Australian Secret Intelligence Service

- ASD: Australian Signals Directorate

- P-value theorem: The p-value is the calculated probability that gives researchers a measure of the strength of evidence against the null hypothesis [1].

- N-gram model: The N-gram model is a sequence of n items from a given sequence of phonemes, syllables, letters, words or base pairs [2].

- One-time pad: The one-time pad is a decoder technology which cannot be cracked if the correct key is used [3].

- Initialism: A group of letters formed using the initial letters of a group of words or a phrase [4].

- Ciphertext: The encoded format of a message [5].

- Plaintext: The information of an original message, which is desired to be deciphered from the ciphertext </ref>.

- Ciphertext: The encoded format of a message [6].

- Key: What is needed to convert the ciphertext into the plaintext using the one-time pad </ref>.

- Ciphertext: The encoded format of a message [7].

- ↑ B. David et al., “P Value and the Theory of Hypothesis Testing: An Explanation for New Researchers,” Clinical Orthopaedics and Related Research®, Vol.468 (3), pp.885-892 2010. [25] G G. L et al., “What is the Value of a p Value?,” The Annals of Thoracic Surgery, Vol.87(5), pp.1337-1343 2009. [26] No Author.p-value [online]. Available: http://en.wikipedia.org/wiki/P-value#cite_note-nature506-1

- ↑ A. Z Broder et al., “Syntactic clustering of the web”. Computer Networks and ISDN Systems 29 (8), pp.1157–1166. [28] No Author. Video Lectures [online]. Available: https://class.coursera.org/nlp/lecture/17

- ↑ S.M. Bellovin. (2011, July 12). Frank Miller: Inventor of the One-Time Pad [online]. Available: http://www.tandfonline.com.proxy.library.adelaide.edu.au/doi/full/10.1080/01611194.2011.583711#abstract

- ↑ No Author. Initialism [online]. Available: http://dictionary.reference.com/browse/initialism

- ↑ No Author (2011). Topic 1: Cryptography [online]. Available: http://www.maths.uq.edu.au/~pa/SCIE1000/gma.pdf

- ↑ No Author (2011). Topic 1: Cryptography [online]. Available: http://www.maths.uq.edu.au/~pa/SCIE1000/gma.pdf

- ↑ No Author (2011). Topic 1: Cryptography [online]. Available: http://www.maths.uq.edu.au/~pa/SCIE1000/gma.pdf