Final Report 2012: Difference between revisions

No edit summary |

|||

| (68 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

===Executive Summary=== | ===Executive Summary=== | ||

In 1948 on Somerton Beach, a man was found dead, his identity still remains unknown and a strange code found in connection to the body remains unsolved. The project is aimed at using modern techniques and programs to provide insight into the meaning of the Code and the identification of the victim. | |||

The main results of the project involved a number of areas; review of previous work, Cipher GUI and Web Crawler expansion and 3D reconstruction. The review of previous years projects concluded that the Code was consistent with being the initial letter of English words. Work on previous years’ Cipher GUI and Web Crawler was improved to remove bugs and to create a more user friendly system. | |||

The addition of creating a 3D reconstruction of the victim’s face allowed for the project to expand into a new aspect and the use of new techniques. Through the use of David Laserscanner software, a laser line and webcam, the bust taken of the victim was scanned and a model was produced. Using the scans and connecting them together ultimately lead to a 3D model which is a good representation of the victim. However due to time constraints the model couldn’t be modified to correct some of the inaccuracies with the bust. | |||

===History=== | ===History=== | ||

On December 1st 1948, around 6.30 in the morning, a couple walking found a dead man lying propped against the wall at Somerton Beach, south of Glenelg. He had very few possessions about his person, and no form of identification. Though wearing smart clothes, he was without a hat (an uncommon sight at the time), and there were no labels in his clothing. The body carried no form of identification, his fingerprints and dental records didn’t match any international registries. The only items on the victim were some cigarettes, chewing gum, a comb, an unused train ticket and a used bus ticket. | On December 1st 1948, around 6.30 in the morning, a couple walking found a dead man lying propped against the wall at Somerton Beach, south of Glenelg. He had very few possessions about his person, and no form of identification. Though wearing smart clothes, he was without a hat (an uncommon sight at the time), and there were no labels in his clothing. The body carried no form of identification, and his fingerprints and dental records didn’t match any international registries. The only items on the victim were some cigarettes, chewing gum, a comb, an unused train ticket and a used bus ticket. <ref name=TamamShudWiki>''Tamam Shud Case'', Wikipedia Foundation Inc, http://en.wikipedia.org/wiki/Taman_Shud_Case</ref> | ||

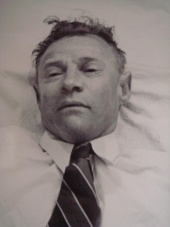

[[Image:DeadBody.jpeg|thumb|170px|right|The Somerton Man shortly after autopsy]] | [[Image:DeadBody.jpeg|thumb|170px|right|The Somerton Man shortly after autopsy]] | ||

The coroner's verdict on the body stated that he was 40-45 years old, 180 centimetres tall, and in top physical condition. His time of death was found to be at around 2 a.m. that morning, and the autopsy indicated that, though his heart was normal, several other organs were congested, primarily his stomach, his kidneys, his liver, his brain, and part of his duodenum. It also noted that his spleen was about 3 times normal size, but no cause of death was given, the coroner only expressing his suspicion that the death was not natural and possibly caused by a barbiturate. 44 years later, in 1994 during a coronial inquest, it was suggested that the death was consistent with digitalis poisoning | The coroner's verdict on the body stated that he was 40-45 years old, 180 centimetres tall, and in top physical condition. His time of death was found to be at around 2 a.m. that morning, and the autopsy indicated that, though his heart was normal, several other organs were congested, primarily his stomach, his kidneys, his liver, his brain, and part of his duodenum. It also noted that his spleen was about 3 times normal size, but no cause of death was given, the coroner only expressing his suspicion that the death was not natural and possibly caused by a barbiturate.<ref name=inquest>Inquest Into the Death of a Body Located at Somerton on 1 December 1948. [[State Records of South Australia]] GX/0A/0000/1016/0B, 17–21 June 1949. pp. 12–13.</ref> 44 years later, in 1994 during a coronial inquest, it was suggested that the death was consistent with digitalis poisoning. <ref name=Phillips>Phillips, J.H. "So When That Angel of the Darker Drink", ''Criminal Law Journal'', vol. 18, no. 2, April 1994, p. 110.</ref> | ||

Of the few possessions found upon the body, one was most intriguing. Within a fob pocket of his trousers, the Somerton Man had a piece of paper torn from the pages of a book, reading "Tamam Shud" on it. These words were discovered to mean | Of the few possessions found upon the body, one was most intriguing. Within a fob pocket of his trousers, the Somerton Man had a piece of paper torn from the pages of a book, reading "Tamam Shud" on it. These words were discovered to mean "ended" or "finished" in Persian, and linked to a book called the Rubaiyat, a book of poems by a Persian scholar called Omar Khayyam. A nation-wide search ensued for the book, and a copy was handed to police that had been found in the back seat of a car in Jetty Road, Glenelg, the night of November 30th 1948. This was duly compared to the torn sheet of paper and found to be the same copy from which it had been ripped. | ||

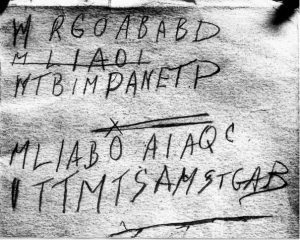

In the back of the book was written a series of five lines, with the second of these struck out. Its similarity to the fourth line indicates it was likely a mistake, and points towards the lines likely being a code rather than a series of random letters. | In the back of the book was written a series of five lines, with the second of these struck out. Its similarity to the fourth line indicates it was likely a mistake, and points towards the lines likely being a code rather than a series of random letters. | ||

| Line 22: | Line 24: | ||

ITTMTSAMSTGAB | ITTMTSAMSTGAB | ||

==Introduction== | ==Introduction== | ||

| Line 28: | Line 29: | ||

For 60 years the identity of the Somerton Man and the meaning behind the code has remained a mystery. This project is aimed at providing an engineer’s perspective on the case, to use analytical techniques to decipher the code and provide a computer generated reconstruction of the victim to assist in the identification. Ultimately the aim is to crack the code and solve the case. | For 60 years the identity of the Somerton Man and the meaning behind the code has remained a mystery. This project is aimed at providing an engineer’s perspective on the case, to use analytical techniques to decipher the code and provide a computer generated reconstruction of the victim to assist in the identification. Ultimately the aim is to crack the code and solve the case. | ||

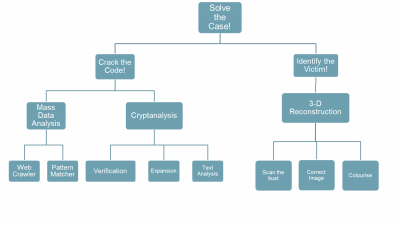

The project was broken down into two main aspects of focus, Cipher Analysis and Identification, and then further into subtasks to be accomplished: | The project was broken down into two main aspects of focus, Cipher Analysis and Identification, and then further into subtasks to be accomplished: | ||

The first aspect of the project focuses on the analysis of the code, through the use of cryptanalysis and mathematical techniques as well as programs designed to provide mass data analysis. The mathematical techniques looked at confirming and expanding on the statistical analysis and cipher cross off from previous years. The mass data analysis expanded on the current Web Crawler, pattern matcher and Cipher GUI to improve the layout and functionality of these applications. | The first aspect of the project focuses on the analysis of the code, through the use of cryptanalysis and mathematical techniques as well as programs designed to provide mass data analysis. The mathematical techniques looked at confirming and expanding on the statistical analysis and cipher cross off from previous years. The mass data analysis expanded on the current Web Crawler, pattern matcher and Cipher GUI to improve the layout and functionality of these applications. | ||

The second aspect focuses on creating a 3D reconstruction of the victim’s face, using the bust as the template. The 3D image is hoped to be able to be used to help with the identification of the Somerton Man. This aspect of the project is a new focus, that hasn’t previously been worked on. | The second aspect focuses on creating a 3D reconstruction of the victim’s face, using the bust as the template. The 3D image is hoped to be able to be used to help with the identification of the Somerton Man. This aspect of the project is a new focus, that hasn’t previously been worked on. | ||

The techniques and programs we have been using have been designed to be general, so that they could easily be applied to other cases in used in situations beyond the aim of this project. | The techniques and programs we have been using have been designed to be general, so that they could easily be applied to other cases in used in situations beyond the aim of this project. | ||

===Motivation=== | ===Motivation=== | ||

For over 60 years, this case has captured the public's imagination. An unidentified victim, an unknown cause of death, a mysterious code, and conspiracy theories surrounding the story - each wilder than the last. As engineers our role is to solve problems that arise, and this project gives us the ideal opportunity to apply our problem solving skills to a real world problem, something different from the usual "use resistors, capacitors and inductors" approach in electrical | For over 60 years, this case has captured the public's imagination. An unidentified victim, an unknown cause of death, a mysterious code, and conspiracy theories surrounding the story - each wilder than the last. As engineers our role is to solve problems that arise, and this project gives us the ideal opportunity to apply our problem solving skills to a real world problem, something different from the usual "use resistors, capacitors and inductors" approach in electrical & electronic engineering. The techniques and technologies developed in the course of this project are broad enough to be applied to other areas; pattern matching, data mining, 3-D modelling and decryption are all useful in a range of fields, or even of use in other criminal investigations such as the (perhaps related) [http://en.wikipedia.org/wiki/Taman_Shud#Marshall_case Joseph Saul Marshall case]. | ||

===Project Objectives=== | ===Project Objectives=== | ||

| Line 43: | Line 46: | ||

===Previous Studies=== | ===Previous Studies=== | ||

====Previous Public Investigations==== | ====Previous Public Investigations==== | ||

During the course of the police investigation, the case became ever more mysterious. Nobody came forward to identify the dead man, and the only possible clues to his identity showed up several months later, when a luggage case that had been checked in at Adelaide Railway Station was handed in. This had been stored on the 30th November, and it was linked to the Somerton Man through a type of thread not available in Australia but matching that used to repair one of the pockets on the clothes he wore when found. Most of the clothes in the suitcase were similarly lacking labels, leaving only the name "T. Keane" on a tie, "Keane" on a laundry bag, and "Kean" (no 'e') on a singlet. However, these were to prove unhelpful, as a worldwide search found that there was no "T. Keane" missing in any English-speaking country. It was later noted that these three labels were the only ones that could not be removed without damaging the clothing. | |||

[[Image:The_Code.png|thumb|300px|right|The code found in the back of the Rubaiyat linked to the Somerton Man.]] | |||

During the course of the police investigation, the case became ever more mysterious. Nobody came forward to identify the dead man, and the only possible clues to his identity showed up several months later, when a luggage case that had been checked in at Adelaide Railway Station was handed in. This had been stored on the 30th November, and it was linked to the Somerton Man through a type of thread not available in Australia but matching that used to repair one of the pockets on the clothes he wore when found. Most of the clothes in the suitcase were similarly lacking labels, leaving only the name "T. Keane" on a tie, "Keane" on a laundry bag, and "Kean" (no 'e') on a singlet. However, these were to prove unhelpful, as a worldwide search found that there was no "T. Keane" missing in any English-speaking country. It was later noted that these three labels were the only ones that could not be removed without damaging the clothing. <ref name=TamamShudWiki>''Tamam Shud Case'', Wikipedia Foundation Inc, http://en.wikipedia.org/wiki/Taman_Shud_Case</ref> | |||

At the time, code experts were called in to try to unravel the text, but their attempts were unsuccessful. In 1978, the Australian Defence Force analysed the code, and came to the conclusion that: | At the time, code experts were called in to try to unravel the text, but their attempts were unsuccessful. In 1978, the Australian Defence Force analysed the code, and came to the conclusion that: | ||

* There are insufficient symbols to provide a pattern | * There are insufficient symbols to provide a pattern | ||

* The symbols could be a complex substitute code, or the meaningless response to a disturbed mind | * The symbols could be a complex substitute code, or the meaningless response to a disturbed mind | ||

* It is not possible to provide a satisfactory answer | * It is not possible to provide a satisfactory answer <ref name=InsideStory>''Inside Story'', presented by Stuart Littlemore, ABC TV, 1978.</ref> | ||

What does not help in the cracking of the code is the ambiguity of many of the letters. | What does not help in the cracking of the code is the ambiguity of many of the letters. | ||

As can be seen, the first letters of both the first and second (third?) lines could be considered either an 'M' or a 'W', the first letter of the last line either an 'I' or a 'V', plus the floating 'C' at the end of the penultimate line. There is also some confusion about the 'X' above the 'O' in the penultimate line, whether it is a part of the code or not, and the relevance of the second, crossed-out line. Was it a mistake on the part of the writer, or was it an attempt to underline (as the later letters seem to suggest)? Many amateur enthusiasts since have attempted to decipher the code, but with the ability to "cherry-pick" a cipher to suit the individual it is possible to read any number of meanings from the text. | As can be seen, the first letters of both the first and second (third?) lines could be considered either an 'M' or a 'W', the first letter of the last line either an 'I' or a 'V', plus the floating 'C' at the end of the penultimate line. There is also some confusion about the 'X' above the 'O' in the penultimate line, whether it is a part of the code or not, and the relevance of the second, crossed-out line. Was it a mistake on the part of the writer, or was it an attempt to underline (as the later letters seem to suggest)? Many amateur enthusiasts since have attempted to decipher the code, but with the ability to "cherry-pick" a cipher to suit the individual it is possible to read any number of meanings from the text. | ||

| Line 68: | Line 71: | ||

MLIABOAIAQC | MLIABOAIAQC | ||

ITTMTSAMSTCAB | ITTMTSAMSTCAB <ref name= Final Report 2009> "Final Report 2009"; Turnbull, A and Bihari, D; https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2009:_Who_killed_the_Somerton_man%3F</ref> | ||

=====2010===== | =====2010===== | ||

In the following year, Kevin Ramirez and Michael Lewis-Vassallo verified that the letters were indeed unlikely to be random by surveying a larger number of people to improve the accuracy of the letter frequency analysis, with both more sober and more drunk participants. They further investigated the idea that the letters represented an initialism, testing against a wider range of texts and taking sequences of letters from the code to do comparisons with. They discovered that the Rubaiyat had an intriguingly low match, suggesting that it was made that way intentionally. From this they proposed that the code was possibly an encoded initialism based on the Rubaiyat. | In the following year, Kevin Ramirez and Michael Lewis-Vassallo verified that the letters were indeed unlikely to be random by surveying a larger number of people to improve the accuracy of the letter frequency analysis, with both more sober and more drunk participants. They further investigated the idea that the letters represented an initialism, testing against a wider range of texts and taking sequences of letters from the code to do comparisons with. They discovered that the Rubaiyat had an intriguingly low match, suggesting that it was made that way intentionally. From this they proposed that the code was possibly an encoded initialism based on the Rubaiyat. | ||

The main aim for the group in 2010 was to write a web crawler and text parsing algorithm that was generalised to be useful beyond the scope of the course. The text parser was able to take in a text or HTML file and find specific words and patterns within that file, and could be used to go through a large directory quickly. The web crawler was used to pass text directly from the internet to the text parser to be checked for patterns. Given the vast amount of data available on the internet, this made for a fast method for accessing a large quantity of raw text to be statistically analysed. This built upon an existing crawler, allowing the user to input a URL directly or a range of URLs from a text file, mirror the website text locally, then automatically run it through the text parsing software. | The main aim for the group in 2010 was to write a web crawler and text parsing algorithm that was generalised to be useful beyond the scope of the course. The text parser was able to take in a text or HTML file and find specific words and patterns within that file, and could be used to go through a large directory quickly. The web crawler was used to pass text directly from the internet to the text parser to be checked for patterns. Given the vast amount of data available on the internet, this made for a fast method for accessing a large quantity of raw text to be statistically analysed. This built upon an existing crawler, allowing the user to input a URL directly or a range of URLs from a text file, mirror the website text locally, then automatically run it through the text parsing software. <ref name= Final Report 2010> "Final Report 2010"; Lewis-Vassallo, M and Ramirez, K; https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2010</ref> | ||

=====2011===== | =====2011===== | ||

In 2011, Steven Maxwell and Patrick Johnson proposed that there may already exist an answer to the code, it just hasn't been linked to the case yet. To this end, they further developed the web crawler to be able to pattern match directly, an exact phrase, an initialism or a regular expression, and pass the matches on to the user. When they tested their "WebCrawler", they did find a match to a portion of the code, "MLIAB". This turned out to be a poem with no connection to the case, but shows the effectiveness and potential for the WebCrawler. | In 2011, Steven Maxwell and Patrick Johnson proposed that there may already exist an answer to the code, it just hasn't been linked to the case yet. To this end, they further developed the web crawler to be able to pattern match directly, an exact phrase, an initialism or a regular expression, and pass the matches on to the user. When they tested their "WebCrawler", they did find a match to a portion of the code, "MLIAB". This turned out to be a poem with no connection to the case, but shows the effectiveness and potential for the WebCrawler. | ||

They also looked to expand the range of ciphers that were checked, and wrote a program that performed the encoding and decoding of a range of ciphers automatically, allowing them to test a wide variety of ciphers in a short period of time. This also lead them to use a "Cipher Cross-Off List" to keep track of which possibilities had been discounted, which were untested, and which had withstood testing to remain candidates. By using their "CipherGUI" and this cross-off list, they were able to test over 30 different ciphers, and narrow down the possibilities significantly - only a handful of ciphers were inconclusive, and given the time period in which the Somerton Man was alive, more modern ciphers could automatically be discounted. | They also looked to expand the range of ciphers that were checked, and wrote a program that performed the encoding and decoding of a range of ciphers automatically, allowing them to test a wide variety of ciphers in a short period of time. This also lead them to use a "Cipher Cross-Off List" to keep track of which possibilities had been discounted, which were untested, and which had withstood testing to remain candidates. By using their "CipherGUI" and this cross-off list, they were able to test over 30 different ciphers, and narrow down the possibilities significantly - only a handful of ciphers were inconclusive, and given the time period in which the Somerton Man was alive, more modern ciphers could automatically be discounted. <ref name= Final Report 2011> "Final Report 2011"; Johnson, P and Maxwell, S; https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2011</ref> | ||

===Structure of this Report=== | ===Structure of this Report=== | ||

With so many disparate objectives, each a self-contained project within itself, the rest of the report has been divided into sections dedicated to each aspect. There is then a section on how the project was managed, including the project breakdown, timeline and budget, and the sections are finally brought back together in the conclusion, which also indicates potential future directions that this project could take. | With so many disparate objectives, each a self-contained project within itself, the rest of the report has been divided into sections dedicated to each aspect. There is then a section on how the project was managed, including the project breakdown, timeline and budget, and the sections are finally brought back together in the conclusion, which also indicates potential future directions that this project could take. | ||

==Methodology== | ==Methodology== | ||

===Statistical Analysis of Letters=== | ===Statistical Analysis of Letters=== | ||

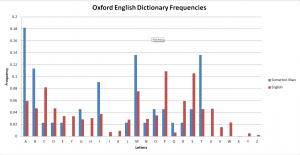

[[Image:OED.png|thumb|300px|right|Initial Letters Frequency in Oxford Dictionary.]] | [[Image:OED.png|thumb|300px|right|Initial Letters Frequency in Oxford Dictionary.]] | ||

The initial step within this project, involved reviewing the previous work accomplished by other projects and to set up tests that would confirm the results found were consistent. The main focus for verification involved the analysis performed on the code, and the statistical analysis that lead to these results. | |||

The results from previous years suggested that the code was most likely to be in English, and represented the initial letters of words. To test this theory and to create some baseline results, the online Oxford English Dictionary was searched and the number of words for each letter of the alphabet was extracted. From this data, the frequency of each letter being used in English was calculated. | |||

The results show that there were many inconsistencies with the Somerton Code, but can be used as a baseline for comparison with other results. The main issue with the results produced, is that most of the words that were included are not commonly used, and as such are a poor representation of the English language. Therefore the likelihood of them being used is not related to the number of words for each letter. | The results show that there were many inconsistencies with the Somerton Code, but can be used as a baseline for comparison with other results. The main issue with the results produced, is that most of the words that were included are not commonly used, and as such are a poor representation of the English language. Therefore the likelihood of them being used is not related to the number of words for each letter. | ||

In order to produce some useful results for comparison a source text was found which had been translated into over 100 different [8]. The text was the Tower of Babel passage from the Bible, and consisted of approximately 100 words and 1000 characters; which allowed it to be a suitable size for testing. | In order to produce some useful results for comparison a source text was found which had been translated into over 100 different [8]. The text was the Tower of Babel passage from the Bible, and consisted of approximately 100 words and 1000 characters; which allowed it to be a suitable size for testing. <ref name="Tower of Babel"> Ager, S "Translations of the Tower of Babel"; http://www.omniglot.com/babel/index.htm</ref> In order to create the frequency representation for these results a java program was created which would take in the text for each language, and output the occurrence of each of the letters. This process was repeated for 85 of the most common languages available and from this data, the standard deviation and sum of difference to the Somerton Man Code was determine. | ||

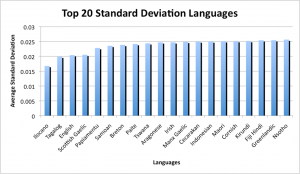

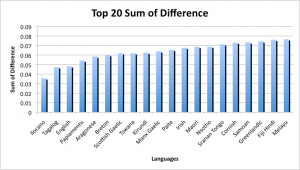

The results were quite inconsistent with previous results, as well as each other. The top four results for both the standard deviation and sum of difference were: Sami North, Ilocano, White Hmong and Wolof; but were in different orders. These languages are all geographically inconsistent as they represent languages spoken in Eastern Europe, Southern Asia and Western Africa. This suggests that these aren’t likely to represent the language used for the Code. | The results were quite inconsistent with previous results, as well as each other. The top four results for both the standard deviation and sum of difference were: Sami North, Ilocano, White Hmong and Wolof; but were in different orders. These languages are all geographically inconsistent as they represent languages spoken in Eastern Europe, Southern Asia and Western Africa. This suggests that these aren’t likely to represent the language used for the Code. | ||

Previous studies suggested that the Code represents the initial letters of words, so this theory was tested as well. The process from before was repeated, with a modified java program which would record the first letter of each word within the text. From these results, the frequencies of each letter occurring was calculated | Previous studies suggested that the Code represents the initial letters of words, so this theory was tested as well. The process from before was repeated, with a modified java program which would record the first letter of each word within the text. From these results, the frequencies of each letter occurring was calculated. | ||

[[Image:Top20SD.png|thumb|300px|left|Top 20 Standard Deviation for Initial Letters.]] | [[Image:Top20SD.png|thumb|300px|left|Top 20 Standard Deviation for Initial Letters.]] | ||

[[Image:Top20SoD.png|thumb|300px|right|Top 20 Sum of Difference for Initial Letters.]] | [[Image:Top20SoD.png|thumb|300px|right|Top 20 Sum of Difference for Initial Letters.]] | ||

| Line 123: | Line 127: | ||

===Cipher GUI=== | ===Cipher GUI=== | ||

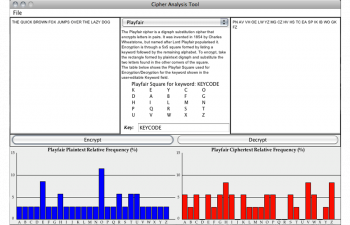

[[Image:CipherGUI.png|thumb|350px|right|Cipher GUI Interface.]] | |||

In the course of evaluating a range of different ciphers, the group last year developed a Java program which could both encode and decode most of the ciphers they investigated. Part of our task this year was to double-check what they had done. To do this, we tested each cipher with both words and phrases being encoded and decoded, to look for errors. Whilst most ciphers were faultless, we discovered an error in the decryption of the Affine cipher that lead to junk being returned. We investigated possible causes of the error, and were able to successfully resolve a wrapping problem (going past the beginning of the alphabet C, B, A, Z, Y... was not handled correctly), so now the cipher functions correctly both ways. | In the course of evaluating a range of different ciphers, the group last year developed a Java program which could both encode and decode most of the ciphers they investigated. Part of our task this year was to double-check what they had done. To do this, we tested each cipher with both words and phrases being encoded and decoded, to look for errors. Whilst most ciphers were faultless, we discovered an error in the decryption of the Affine cipher that lead to junk being returned. We investigated possible causes of the error, and were able to successfully resolve a wrapping problem (going past the beginning of the alphabet C, B, A, Z, Y... was not handled correctly), so now the cipher functions correctly both ways. | ||

We also sought to increase the range of ciphers covered. One example we found was the Baconian Cipher, whereby each letter is represented by a unique series of (usually 5) As and Bs (i.e. A = AAAAA, B = AAAAB, and so on). Whilst this was known of during the Somerton Man's time, the use of letters besides A and B in the code clearly indicates the Baconian Cipher was not used. Despite this, the Baconian Cipher would have made a useful addition to the CipherGUI. However, the nature of the way it was coded, and our lack of familiarity with the program, made the addition of further ciphers difficult, so we decided to focus our efforts on other aspects of the project. | We also sought to increase the range of ciphers covered. One example we found was the Baconian Cipher, whereby each letter is represented by a unique series of (usually 5) As and Bs (i.e. A = AAAAA, B = AAAAB, and so on). Whilst this was known of during the Somerton Man's time, the use of letters besides A and B in the code clearly indicates the Baconian Cipher was not used. Despite this, the Baconian Cipher would have made a useful addition to the CipherGUI. However, the nature of the way it was coded, and our lack of familiarity with the program, made the addition of further ciphers difficult, so we decided to focus our efforts on other aspects of the project. | ||

Last year's group did a lot of good work developing a "Cipher Cross-off list", which went through a range of encryption methods and determined whether it was likely or not that the Somerton Man passage had been encoded using them. We analysed their conclusions, and for the most part could find no fault with their reasoning. However, they dismissed "One-Time Pads" wholesale, arguing that the frequency analysis of the code did not fit that of an OTP. However, they assumed that the frequency analysis of the OTP would be flat, since a random pattern of letters would be used. This is fine for most purposes, but if the Somerton Man had used an actual text - for example the Rubaiyat - then its frequency distribution would not be flat. It is for all intents and purposes impossible to completely discount OTPs as the source of the cipher, since the possible texts that could have been used - even ignoring a random sequence of letters - is near infinite. You cannot disprove something for which no evidence exists; you can only prove its existence by finding it. | |||

===Web Crawler GUI and Pre-indexed Web Data=== | |||

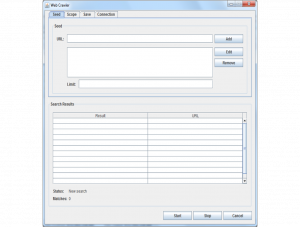

[[Image:OldGUI.png|thumb|300px|center|Original Web Crawler GUI Interface.]] | |||

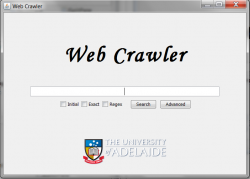

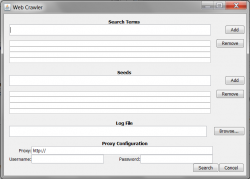

The WebCrawler developed by last year's group working on this project is a highly competent, fully-functional program that does what it has been designed to do very well. However, upon first appearances it is intimidating, with a range of options required to be specified before a search can even be undertaken. There is a detailed and helpful README file provided with the WebCrawler, but it is well-known that most people don't resort to manuals until absolutely desperate. Therefore we sought to redesign only the front end of the WebCrawler in order to encourage more experimentation and greater usage by people interested in getting their search results as quickly and easily as possible. | |||

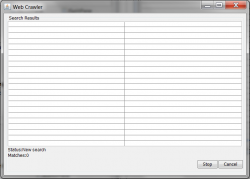

Our brief was to design something more like Google, which is immediately accessible and could not be more intuitive. To achieve this without a loss of functionality, we decided to abstract away the more complex options and provide default values for key parameters, so that the user is only required to enter their search string to perform a basic web crawl. However, if they wanted to fine-tune the experience - change the web address the search starts from, save the results to a different location, or define a proxy server connection - they had access to this through an "Advanced" menu. Once either search has commenced, the user is shown a results page which is able to display a greater number of matches at a time - meaning less scrolling and making the results more immediately accessible to the user. | |||

[[Image:NewGUISearchPanel.png|thumb|250px|center|New Web Crawler GUI Interface.]][[Image:NewGUIOptionsPanel.png|thumb|250px|left|Advanced Options.]][[Image:NewGUIResultsPanel.png|thumb|250px|right|Displaying Results.]] | |||

====Pre-Indexed Web Data==== | |||

The internet is a massive place, and the time it would take to crawl every single web page - even by the WebCrawler developed by last year's group - would be prohibitively long. Therefore it was proposed that we search for a means to traverse the web faster. With search engines such as Google regularly trawling the internet and caching the data, it was suggested we find a means to access this pre-indexed data so that search times could be significantly reduced (think how quickly Google returns its search results!). However, the data collected by search engines is not publicly available, so an alternative approach was required. We looked into the possibility of using a service such as Amazon's Elastic Cloud Compute (EC2) to speed the process up. Further investigation indicated that this would require a substantial re-writing of the WebCrawler - which is designed to use a graphical user interface for its input - and only side-stepped the issue. Amazon EC2, and other similar cloud computing solutions, would only provide a means to run large numbers of instances of the WebCrawler simultaneously and, given enough instances, this would be harsh on popular websites with large numbers of links to them, which would be receiving requests from many instances in a short space of time, potentially (accidentally!) causing a Distributed Denial of Service attack as the crawlers use up all the servers' bandwidth. | |||

Given the amount of time and effort required to fully evaluate the possibilities for speeding up trawling the internet, and the reworking of the WebCrawler code, this would make an interesting and useful project in itself for a future group to undertake. | |||

===3D Reconstruction=== | |||

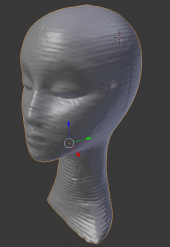

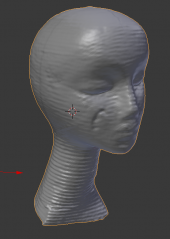

[[Image:OurBust3.jpeg|thumb|400px|right|The bust of the Somerton Man.]] | |||

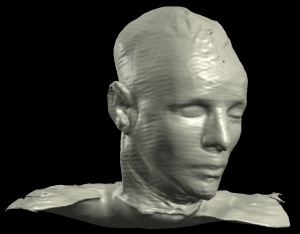

The main addition that we were tasked to make this year was to provide a means through which a positive identification of the Somerton Man could be made. In order to do this, we needed to create a likeness of the Somerton Man as he would have appeared whilst he was alive. However, the only existing photographs and other identifiers were made after his death, so some modification would be required to make him appear life-like. The best avenue for creating a likeness was a bust made of his head and shoulders after he had been dead for some while and post-autopsy. This meant that, along with being plaster-coloured and over 60 years old, the bust was not a complete likeness, with his neck flattened and his shoulders hunched due to the body having lain on a slab for several months, and a distinct ridge around his forehead where it had been cut off to examine his brain. | |||

This meant that our task was three-fold: firstly, we needed to find a method of creating a three-dimensional model of the bust that could be displayed on a computer; secondly, we needed to be able to manipulate the model to fix the inaccuracies introduce post-mortem - such as the forehead ridge; and thirdly we needed to be able to then add colour to the model, based on the coroner's description of his skin, hair and eyes. After completing all those tasks, we would end up with a reasonable likeness of how the Somerton Man may have appeared before he died, which could be then circulated to allow a positive identification to be made. | |||

[[Image:SoftwareComparison.png|thumb|400px|right|Comparison of Software Considered.]] | |||

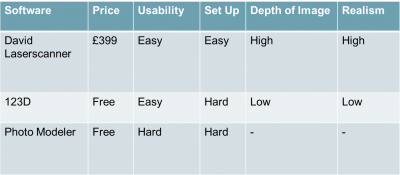

The first step in creating the model was determining a method by which we could reconstruct the bust in three dimensions in a computer. We looked into several means for doing this, such as 123D Catch from Autodesk, PhotoModeler Scanner from PhotoModeler, and the DAVID LaserScanner from DAVID. The former two are very simple systems, whereby a series of photographs is taken of the desired subject from multiple angles all around it, and these are then analysed by a piece of software (remotely on Autodesk servers in the case of 123D Catch and on your own PC for PhotoModeler Scanner), which then attempts to create the three-dimensional model by finding common points in each photo that allow it to interpolate how the whole subject appears in three dimensions. However, having tested these software products, we found that while they were initially simple to use, they were very particular about what they could connect and you were only aware of this after attempting to combine the images. Given that we would be doing the scan on-site at the Police History Museum, we would prefer a system that immediately told you that the picture was not helpful so that time was not wasted. PhotoModeler Scanner and 123D Catch also had a tendency to still flatten the images, creating a rough approximation of the subject in three dimensions but losing smaller protrusions as two-dimensional details on the object. With the requirement to make as accurate a reconstruction as possible, this was not of a high enough standard so we were forced to reject both 123D Catch and PhotoModeler Scanner as candidates. | |||

This left DAVID LaserScanner as our main option. This program works somewhat differently from the others, using a laser line and webcam to capture depth measurements of the subject relative to calibration panels that provide a baseline. By taking multiple scans from different angles, then combining them into one image, it is possible to create a far more accurate reconstruction of the subject. Although being slightly more fiddly to set up (requiring calibration of the camera and the use of the calibration panels), once in operation it is straightforward to operate: in a darkened room, scan the laser line vertically over the subject repeatedly, with the scan being updated in real-time to allow the user to see if they had missed any spots or if there were any problems with the scan. This process was simply repeated as many times as necessary, slowly rotating the subject after each scan was complete to get data about every side of the subject. Thus with just a few scans a rough reconstruction was possible, and more scans lead to a more accurate representation of the original subject - the more scans the better, but this needs to be offset against any time constraints that might exist. | |||

Once we had settled upon the DAVID LaserScanner as our solution, we immediately had an order for the kit placed. This included all the equipment required for a successful scan: webcam and stand, calibration panels and baseboard, a 5mW laser line, and the all-important DAVID LaserScanner software. However, since we were using a laser with a power output greater than 1mW, we were required to perform a Risk Assessment and provide a Standard Operating Procedure in order to enable correct usage and avoid the risks associated with lasers. We consulted Henry Ho, a technician in the School, who was able to provide us with examples of Risk Assessments and Standard Operating Procedures, and advise us on the best approach to take, what particular risks we might need to consider, and what steps might be necessary regarding the correct use of lasers. We proceeded to draft these documents, and with feedback from Henry we were able to produce versions which were submitted to the School Manager, Stephen Guest, for approval. These required minor adjustment, but were then approved and we were able to obtain the LaserScanner equipment during the mid-year break. | |||

Once we were in possession of the kit, we first familiarised ourselves with its operation by consulting the manual and doing some dry runs with just the software. When we were relatively confident, we started doing test scans on small objects - an apple and a hat model. These scans helped us work out how long it would likely take us per scan, and so how many scans we could do in a fixed period of time. They showed us the limitations of the system - such as the difficulty in getting scans of the top and bottom of the subject, and helped us to figure out ways to overcome them. Through this process we were able to perform our first fully successful model - taking in all the scans and combining them to create a reasonable facsimile of the real-world object, the hat model. | |||

[[Image: | [[Image:Bust2Left.png|thumb|170px|left|First Fully Successful Scan.]] | ||

[[Image:Bust2Right.png|thumb|170px|right|First Fully Successful Scan.]] | |||

One significant difference between the hat model and the actual bust was the extent of the shoulders. The hat model remains narrow because it truncates at the base of the neck, but the bust would be significantly wider due to the inclusion of the Somerton Man's shoulders. The calibration panels provided were A3 in size, and this turned out to not be large enough for our purposes; either the bust would have to be too far away from the panels for accurate reproduction, or the bust would be wider than the panels and so not being able to be scanned successfully. Therefore we had enlarged versions made which we felt would overcome this problem - though we had no way to test with certainty without access to the bust. These enlarged calibration panels were mounted on a thicker backboard to provide stability, but this also prevented them from fitting into the baseboard that came with the originals. The software required a 90 degree angle between the boards, but we were unable to guarantee this now. | |||

After we were satisfied that we were as prepared as we could be, we contacted the SA Police Museum and were given permission to visit and scan the bust on-site. The scanning itself was conducted over a period of 2 hours, with 65 separate scans covering all angles of the bust to ensure we had enough data to make as accurate a reconstruction as possible. Fortunately, the glass case the bust was contained in had a groove around each side (for the glass to sit in) that was a perfect fit for the boards and gave a good right angle for the software to work with. Our time with the bust was a success, though we felt more time would have been beneficial - the time constraints meant that more attention ended up being focused on one side than the other, and this is evident in the resulting 3-D model. | |||

With our time with the bust finished, we turned our attention to combining the scans into one continuous model. This was a very time-consuming and processor-intensive task, requiring combining two scans in order to achieve the best match-up, then adding in and aligning a third, then the fourth, and so on. We made several separate attempts at this task, using varying numbers of the scans, adding them in different orders, different techniques for adding them (all scans available at once to be added; then just having two, combining, then adding the third and so forth), until eventually we achieved a model we were happy with given the time constraints and limitations of the software. This model has since been sent to be made a reality using a 3-D printer within the school, and we look forward to seeing the results of our efforts. | |||

[[Image:FinalScan.png|thumb|300px|center|Screenshot of the Final Scan.]] | |||

== | ====Aside: Ear Analysis==== | ||

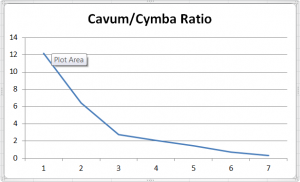

Though the Somerton Man is yet to have been identified, numerous attempts have been made using his relatively rare physiological features. One such feature is the enlarged cymba in relation to cavum of his ears. This is the inverse of the norm, where the cavum tends to be the larger of the two. However, the relative sizes can be distorted by taking pictures at angles other than directly in line with the ear (i.e. with the camera not parallel to the ground at ear height). To investigate this effect initially, we attepted to use the DAVID LaserScanner to do a 3-D scan of a human ear (my own). However, the software is only effective with completely stationary subjects, so our attempts at scanning were not of sufficient quality for our purposes. Instead, Tom took multiple photographs of my ear from different angles, and then we used these to compute the areas of the cymba and cavum visible in each image. These areas were then turned into a ratio (cavum area/cymba area) to generate a single value which could be compared across pictures and represented graphically. The results showed that as the camera angle gets lower relative to the ear, the size of the cymba relative to the cavum increases - so pictures taken from below parallel overestimate the size of the cymba and so could be used to falsely suggest an identity for the Somerton Man. Picture 1 represents the image taken from highest above the ear (i.e. the greatest angle, with parallel to the ear representing 0°, and perpendicular to the ground representing +90° and -90° above and below the ear respectively) through to Picture 7 being the lowest image relative to the ear. | |||

[[Image:CymbaCavumGraph.png|thumb|300px|right|Graph showing relationship between cavum/cymba ratio and photo angle.]] | |||

{| class="wikitable" style="text-align: center; width: 200px; height: 200px; | |||

|- | |||

! Picture !! Cymba (pixels) !! Cavum (pixels) !! Cavum/Cymba Ratio | |||

|- | |||

| 1 || 223 || 2712 || 12.16143498 | |||

|- | |||

| 2 || 473 || 3032 || 6.410147992 | |||

|- | |||

| 3 || 1257 || 3451 || 2.745425617 | |||

|- | |||

| 4 || 1782 || 3718 || 2.086419753 | |||

|- | |||

| 5 || 1905 || 2732 || 1.434120735 | |||

|- | |||

| 6 || 2373 || 1640 || 0.691108302 | |||

|- | |||

| 7 || 1294 || 398 || 0.307573416 | |||

|} | |||

==Project Management== | ==Project Management== | ||

===Timeline=== | ===Timeline=== | ||

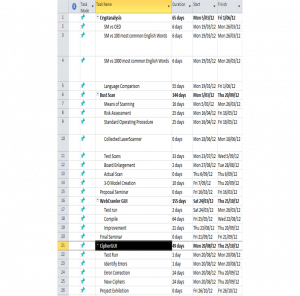

Throughout the course of the project there were a number of key milestones, representing deliverables and achievement goals. A summary of all the milestones is shown in the Work Breakdown Structure and Gantt chart. The deliverables included a proposal seminar and progress report in the first semester, and the final report, seminar and exhibition in the second semester. All of these deadlines were easily met and submitted on time. | |||

The other aspect of the project timeline involved the achievement goals, the goals set forward at the start of the year describing the timeframe of the project. The review of previous work began and was completed through the course of the first semester, alongside modifications to the web crawler. | |||

The 3D reconstruction was original planned to begin around term 2 of semester 1. However due to issues with the ordering and the requirement for risk assessment to be preformed, it was delayed until the start of semester 2. As a result the actual scan was not performed until early September, which meant the objectives for 3D reconstruction required modification. | |||

The initial timeline created was quite unrealistic and didn’t take into account the time commitments of each task. It would have been more successful to reduce the focus of the project, which would have achieved more results in those areas. | |||

[[Image:NewListofTasks.png|thumb|300px|left|Task Breakdown.]] | |||

[[Image:NewGanttChart.png|thumb|500px|right|Project Outline.]] | |||

===Budget=== | ===Budget=== | ||

{| class="wikitable" style="text-align: center; width: 250px; height: 200px;" | |||

|- | |||

! Item !! Expenditure | |||

|- | |||

| DAVID LaserScanner || $650 | |||

|- | |||

| Enlarged Calibration Panels || $50 | |||

|- | |||

| Total Allocation || $500 | |||

|- | |||

| Total Expenditure || $700 | |||

|- | |||

| '''Difference''' || '''-$200''' | |||

|} | |||

With two students in the group, we had a combined budget of ($250 * 2) = $500 from the School. | |||

At the outset of the project, we looked into several different options for making 3-D models of real world objects. We discovered a program called DAVID LaserScanner which appeared to give a good recreation, but cost €449.00 (approximately $570 at current market rates) plus shipping from Europe. The extra cost of the LaserScanner was borne by Prof. Derek Abbott, who saw its usefulness outside the scope of this project and agreed that the higher quality output was necessary for successfully achieving our objectives. | |||

After performing test scans on a similar-sized object to the bust, we discovered that it would be necessary to generate larger calibration panels, and these were printed at a cost of approximately $50 at OfficeWorks. | |||

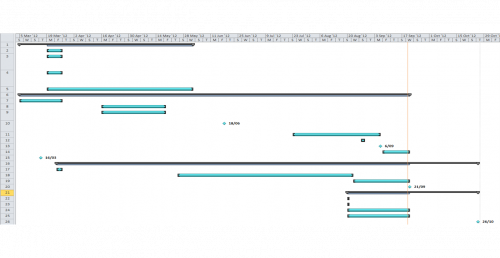

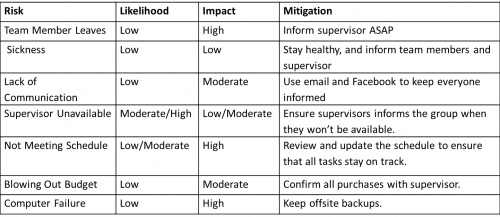

===Risk Management=== | ===Risk Management=== | ||

[[Image:RiskManagementTable.png|thumb|500px|right|Potential Risks to the Project.]] | |||

At the beginning of the project a risk management plan was created, outlining the potential risks and hazards faced by the project. | |||

The majority of these risks were avoided, and the mitigation techniques were successful in avoiding any of them causing major issues within the project. The effect of sickness and lack of communication was not a problem during the course of the project. However there were a number of occasions when the supervisors were unavailable, but the effect of this was minimised through the use of email. By using SVN as an online backup, it allowed for everyone within the group access to the latest version of data and documentation. | |||

The two main issues which arose from the project where the schedule and budget, however the effects were still minimised through the mitigation. Due to issues surrounding the David Laserscanner, the schedule was required to be modified and updated to meet the new deadlines. The other issue of budget wasn’t a problem, as Prof. Derek Abbott approved all purchases and allowed for the requirement to be met. | |||

==Project Outcomes== | ==Project Outcomes== | ||

=== | ===Conclusion=== | ||

This project can be considered a success, since it achieved majority of the objectives and achieved some interesting results. The use of a wide range of technical skills, such as software programming, statistical analysis, user interface design, documentation and 3D scanning techniques, allowed for the use of an electrical engineers’ experience to produce valid results. | |||

The statistical data preformed helped to verify results from other groups, drawing the same conclusion that the Code is consistent with the initial letters of English words. Improvements to the Web Crawler’s interface and the Cipher GUI’s functionality allows for expansion in the future, as well as better performance of current operation. The new aspect of the 3D reconstruction was successful as it produced a computer generated model which could be modified and used to help finally identify the Somerton Man. | |||

The overall project achieved some interesting results and leaves an interesting path for future projects to expand and improve upon. | |||

===Future Development=== | |||

Although the likelihood of actually solving the case during the course of an honours project is quite low, this exercise provides myriad ways in which electrical and electronic students can expand upon the work done by previous years' groups. | |||

It would be possible for a group in future years to carry on the work that we have done on the 3-D model, finding a way to manipulate it to produce a passable likeness of the Somerton Man as he might have appeared before he died, with hair colour, eye colour and skin colour all included. Perhaps they could develop a device and software that allows them to automatically scan and rotate an object and fuse the scans together to create the 3-D model. There is also the option to integrate the re-designed GUI interface into the existing code, or come up with an even more user-friendly design themselves and use that instead. No doubt they could find yet more ciphers that could be tested and incorporated into the CipherGUI - one such that we considered was the Baconian which is trivially discounted but would be a useful addition to the CipherGUI. If they were so inclined, they might want to apply the techniques and programs developed to similar mysterious deaths, such as the previously mentioned Joseph Saul Marshall case in Sydney. | |||

Alternatively, they could try some totally new avenues of investigation. The body of the Somerton Man is known to be buried in the cemetery on West Terrace, and so if it were exhumed it would provide DNA that could be analysed in order to identify where he was likely to come from, and perhaps compared with that of potential candidates in order to eliminate them (or not). As a project in itself, future groups could look into more efficient searching through the internet, either through parallel processing - and overcoming the pitfalls inherent in that approach - or by accessing pre-indexed data somehow. Different ways to try to identify the Somerton Man could be considered and investigated, such as the use of image comparison software to look at photos of potential "Somerton Men" and discount them based on facial recognition or something similar. | |||

The possibilities thrown up by this case are limited only by the imaginativeness of the students and the supervisor, covering as it does such a wide variety of aspects. Even 60 years on, so little is known for sure that any insights could prove to be decisive - what more could a budding engineer want than to solve one of the most fascinating cold cases in Australian history? | |||

==References== | ==References== | ||

| Line 162: | Line 305: | ||

==See Also== | ==See Also== | ||

* [[Glossary 2011|Glossary]] | * [[Glossary 2011|Glossary]] | ||

* [[Progress Report 2012]] | * [[Semester A Progress Report 2012]] | ||

* [[Cipher Cross-off List]] | * [[Cipher Cross-off List]] | ||

* [[Media:Cipher GUI.rar|CipherGUI 2011 (Download)]] | * [[Media:Cipher GUI.rar|CipherGUI 2011 (Download)]] | ||

* [[Web Crawler 2011|Web Crawler 2011 (Download)]] | * [[Web Crawler 2011|Web Crawler 2011 (Download)]] | ||

* [http:// | * [http://youtu.be/Zd8gQDM43iI 2012 Final Seminar (YouTube)] | ||

* [http://www.youtube.com/watch?v= | * [http://www.youtube.com/watch?v=bmorbe01mTA The scanning process (YouTube)] | ||

* [http:// | * [http://youtu.be/SEAo4pt-JHU The fusion process (YouTube)] | ||

* [http://www.youtube.com/watch?v= | * [http://youtu.be/vzijH9zGmXE The final model (YouTube)] | ||

* [http://www.youtube.com/watch?v=jWE7xl9LiO The full project overview (YouTube)] | |||

== References and useful resources== | == References and useful resources== | ||

Latest revision as of 21:11, 27 November 2012

Executive Summary

In 1948 on Somerton Beach, a man was found dead, his identity still remains unknown and a strange code found in connection to the body remains unsolved. The project is aimed at using modern techniques and programs to provide insight into the meaning of the Code and the identification of the victim. The main results of the project involved a number of areas; review of previous work, Cipher GUI and Web Crawler expansion and 3D reconstruction. The review of previous years projects concluded that the Code was consistent with being the initial letter of English words. Work on previous years’ Cipher GUI and Web Crawler was improved to remove bugs and to create a more user friendly system. The addition of creating a 3D reconstruction of the victim’s face allowed for the project to expand into a new aspect and the use of new techniques. Through the use of David Laserscanner software, a laser line and webcam, the bust taken of the victim was scanned and a model was produced. Using the scans and connecting them together ultimately lead to a 3D model which is a good representation of the victim. However due to time constraints the model couldn’t be modified to correct some of the inaccuracies with the bust.

History

On December 1st 1948, around 6.30 in the morning, a couple walking found a dead man lying propped against the wall at Somerton Beach, south of Glenelg. He had very few possessions about his person, and no form of identification. Though wearing smart clothes, he was without a hat (an uncommon sight at the time), and there were no labels in his clothing. The body carried no form of identification, and his fingerprints and dental records didn’t match any international registries. The only items on the victim were some cigarettes, chewing gum, a comb, an unused train ticket and a used bus ticket. [1]

The coroner's verdict on the body stated that he was 40-45 years old, 180 centimetres tall, and in top physical condition. His time of death was found to be at around 2 a.m. that morning, and the autopsy indicated that, though his heart was normal, several other organs were congested, primarily his stomach, his kidneys, his liver, his brain, and part of his duodenum. It also noted that his spleen was about 3 times normal size, but no cause of death was given, the coroner only expressing his suspicion that the death was not natural and possibly caused by a barbiturate.[2] 44 years later, in 1994 during a coronial inquest, it was suggested that the death was consistent with digitalis poisoning. [3]

Of the few possessions found upon the body, one was most intriguing. Within a fob pocket of his trousers, the Somerton Man had a piece of paper torn from the pages of a book, reading "Tamam Shud" on it. These words were discovered to mean "ended" or "finished" in Persian, and linked to a book called the Rubaiyat, a book of poems by a Persian scholar called Omar Khayyam. A nation-wide search ensued for the book, and a copy was handed to police that had been found in the back seat of a car in Jetty Road, Glenelg, the night of November 30th 1948. This was duly compared to the torn sheet of paper and found to be the same copy from which it had been ripped.

In the back of the book was written a series of five lines, with the second of these struck out. Its similarity to the fourth line indicates it was likely a mistake, and points towards the lines likely being a code rather than a series of random letters.

WRGOABABD

MLIAOI

WTBIMPANETP

MLIABOAIAQC

ITTMTSAMSTGAB

Introduction

For 60 years the identity of the Somerton Man and the meaning behind the code has remained a mystery. This project is aimed at providing an engineer’s perspective on the case, to use analytical techniques to decipher the code and provide a computer generated reconstruction of the victim to assist in the identification. Ultimately the aim is to crack the code and solve the case.

The project was broken down into two main aspects of focus, Cipher Analysis and Identification, and then further into subtasks to be accomplished:

The first aspect of the project focuses on the analysis of the code, through the use of cryptanalysis and mathematical techniques as well as programs designed to provide mass data analysis. The mathematical techniques looked at confirming and expanding on the statistical analysis and cipher cross off from previous years. The mass data analysis expanded on the current Web Crawler, pattern matcher and Cipher GUI to improve the layout and functionality of these applications.

The second aspect focuses on creating a 3D reconstruction of the victim’s face, using the bust as the template. The 3D image is hoped to be able to be used to help with the identification of the Somerton Man. This aspect of the project is a new focus, that hasn’t previously been worked on. The techniques and programs we have been using have been designed to be general, so that they could easily be applied to other cases in used in situations beyond the aim of this project.

Motivation

For over 60 years, this case has captured the public's imagination. An unidentified victim, an unknown cause of death, a mysterious code, and conspiracy theories surrounding the story - each wilder than the last. As engineers our role is to solve problems that arise, and this project gives us the ideal opportunity to apply our problem solving skills to a real world problem, something different from the usual "use resistors, capacitors and inductors" approach in electrical & electronic engineering. The techniques and technologies developed in the course of this project are broad enough to be applied to other areas; pattern matching, data mining, 3-D modelling and decryption are all useful in a range of fields, or even of use in other criminal investigations such as the (perhaps related) Joseph Saul Marshall case.

Project Objectives

The ultimate goal of this project is to help the police to solve the mystery. Whilst this is a rather lofty and likely unachievable goal, by working towards it we may be able to provide useful insights into the case and develop techniques and technologies that are applicable beyond the scope of this single case. We have taken a multi-pronged approach to give a broader perspective and draw in different aspects that, taken in isolation, may be meaningless but taken as a whole become significant. The two main focuses of this project are cracking the code found in the Rubaiyat, and trying to identify the victim. To further break down cracking the code, the web crawler and pattern matcher are used to trawl the internet for meaning in the letters of the code, and directly interrogating the code with various ciphers to try and find out what is written. In order to be able to identify the victim so long after his death, we need to recreate how he might have looked when he was alive, firstly by making a three-dimensional reconstruction from a bust taken after he was autopsied, and then by manipulating the resulting model to create a more life-like representation, complete with hair, skin and eye colours (which can be found in the coroner's report).

Previous Studies

Previous Public Investigations

During the course of the police investigation, the case became ever more mysterious. Nobody came forward to identify the dead man, and the only possible clues to his identity showed up several months later, when a luggage case that had been checked in at Adelaide Railway Station was handed in. This had been stored on the 30th November, and it was linked to the Somerton Man through a type of thread not available in Australia but matching that used to repair one of the pockets on the clothes he wore when found. Most of the clothes in the suitcase were similarly lacking labels, leaving only the name "T. Keane" on a tie, "Keane" on a laundry bag, and "Kean" (no 'e') on a singlet. However, these were to prove unhelpful, as a worldwide search found that there was no "T. Keane" missing in any English-speaking country. It was later noted that these three labels were the only ones that could not be removed without damaging the clothing. [1]

At the time, code experts were called in to try to unravel the text, but their attempts were unsuccessful. In 1978, the Australian Defence Force analysed the code, and came to the conclusion that:

- There are insufficient symbols to provide a pattern

- The symbols could be a complex substitute code, or the meaningless response to a disturbed mind

- It is not possible to provide a satisfactory answer [4]

What does not help in the cracking of the code is the ambiguity of many of the letters.

As can be seen, the first letters of both the first and second (third?) lines could be considered either an 'M' or a 'W', the first letter of the last line either an 'I' or a 'V', plus the floating 'C' at the end of the penultimate line. There is also some confusion about the 'X' above the 'O' in the penultimate line, whether it is a part of the code or not, and the relevance of the second, crossed-out line. Was it a mistake on the part of the writer, or was it an attempt to underline (as the later letters seem to suggest)? Many amateur enthusiasts since have attempted to decipher the code, but with the ability to "cherry-pick" a cipher to suit the individual it is possible to read any number of meanings from the text.

Previous Honours Projects

This is the fourth year for this particular project, and each of the previous groups has contributed in their own way, providing a useful foundation for the studies undertaken this year and into the future.

2009

The original group from 2009, Andrew Turnbull and Denley Bihari, focused on the nature of the "code". In particular, they looked at whether it even warranted investigation - could it just have been the random ramblings of an intoxicated mind? Through surveying people, both drunk and sober, they established that the letters were significantly different from the frequencies generated by a population sample, and weren't likely to be random. Given the suggestion that the letters had some significance, they set about establishing what that significance was. They considered the possibility that the letters represented the initial letters of an unordered list, and this was shown to have some merit. They also tested the theory that the letters were part of a transposition cipher - where the order of letters is simply swapped around, as in an anagram - but the relative frequencies of the letters (no 'e's whatsoever for example) suggested this was unlikely. After discounting transposition ciphers, they looked at the other main group of ciphers - substitution ciphers, where the letters of the original message are replaced with totally different ones. They were able to discount Vigenere, Playfair, Alphabet Reversal and Caesar ciphers, and examined the possibility the code used a one-time pad that was the Rubaiyat or the Bible, though these were eliminated as candidates. Finally, they attempted to establish the language the code was written in, and in a comparison of mainly Western-European languages found that English was the best match by scoring the languages using Hidden Markov Modelling. Based on this, they discovered that, with the ambiguity in some of the letters, the most likely intended sequence of letters in the code is actually:

WRGOABABD

WTBIMPANETP

MLIABOAIAQC

ITTMTSAMSTCAB Cite error: Invalid parameter in <ref> tag

2010

In the following year, Kevin Ramirez and Michael Lewis-Vassallo verified that the letters were indeed unlikely to be random by surveying a larger number of people to improve the accuracy of the letter frequency analysis, with both more sober and more drunk participants. They further investigated the idea that the letters represented an initialism, testing against a wider range of texts and taking sequences of letters from the code to do comparisons with. They discovered that the Rubaiyat had an intriguingly low match, suggesting that it was made that way intentionally. From this they proposed that the code was possibly an encoded initialism based on the Rubaiyat.

The main aim for the group in 2010 was to write a web crawler and text parsing algorithm that was generalised to be useful beyond the scope of the course. The text parser was able to take in a text or HTML file and find specific words and patterns within that file, and could be used to go through a large directory quickly. The web crawler was used to pass text directly from the internet to the text parser to be checked for patterns. Given the vast amount of data available on the internet, this made for a fast method for accessing a large quantity of raw text to be statistically analysed. This built upon an existing crawler, allowing the user to input a URL directly or a range of URLs from a text file, mirror the website text locally, then automatically run it through the text parsing software. Cite error: Invalid parameter in <ref> tag

2011

In 2011, Steven Maxwell and Patrick Johnson proposed that there may already exist an answer to the code, it just hasn't been linked to the case yet. To this end, they further developed the web crawler to be able to pattern match directly, an exact phrase, an initialism or a regular expression, and pass the matches on to the user. When they tested their "WebCrawler", they did find a match to a portion of the code, "MLIAB". This turned out to be a poem with no connection to the case, but shows the effectiveness and potential for the WebCrawler.

They also looked to expand the range of ciphers that were checked, and wrote a program that performed the encoding and decoding of a range of ciphers automatically, allowing them to test a wide variety of ciphers in a short period of time. This also lead them to use a "Cipher Cross-Off List" to keep track of which possibilities had been discounted, which were untested, and which had withstood testing to remain candidates. By using their "CipherGUI" and this cross-off list, they were able to test over 30 different ciphers, and narrow down the possibilities significantly - only a handful of ciphers were inconclusive, and given the time period in which the Somerton Man was alive, more modern ciphers could automatically be discounted. Cite error: Invalid parameter in <ref> tag

Structure of this Report

With so many disparate objectives, each a self-contained project within itself, the rest of the report has been divided into sections dedicated to each aspect. There is then a section on how the project was managed, including the project breakdown, timeline and budget, and the sections are finally brought back together in the conclusion, which also indicates potential future directions that this project could take.

Methodology

Statistical Analysis of Letters

The initial step within this project, involved reviewing the previous work accomplished by other projects and to set up tests that would confirm the results found were consistent. The main focus for verification involved the analysis performed on the code, and the statistical analysis that lead to these results. The results from previous years suggested that the code was most likely to be in English, and represented the initial letters of words. To test this theory and to create some baseline results, the online Oxford English Dictionary was searched and the number of words for each letter of the alphabet was extracted. From this data, the frequency of each letter being used in English was calculated.

The results show that there were many inconsistencies with the Somerton Code, but can be used as a baseline for comparison with other results. The main issue with the results produced, is that most of the words that were included are not commonly used, and as such are a poor representation of the English language. Therefore the likelihood of them being used is not related to the number of words for each letter. In order to produce some useful results for comparison a source text was found which had been translated into over 100 different [8]. The text was the Tower of Babel passage from the Bible, and consisted of approximately 100 words and 1000 characters; which allowed it to be a suitable size for testing. [5] In order to create the frequency representation for these results a java program was created which would take in the text for each language, and output the occurrence of each of the letters. This process was repeated for 85 of the most common languages available and from this data, the standard deviation and sum of difference to the Somerton Man Code was determine.

The results were quite inconsistent with previous results, as well as each other. The top four results for both the standard deviation and sum of difference were: Sami North, Ilocano, White Hmong and Wolof; but were in different orders. These languages are all geographically inconsistent as they represent languages spoken in Eastern Europe, Southern Asia and Western Africa. This suggests that these aren’t likely to represent the language used for the Code. Previous studies suggested that the Code represents the initial letters of words, so this theory was tested as well. The process from before was repeated, with a modified java program which would record the first letter of each word within the text. From these results, the frequencies of each letter occurring was calculated.

The results described in the figures above, provide more consistent results from before. The top three languages for both the sum of difference and standard deviation were, in order: Ilocano, Tagalog and English. The first two languages are from the Philippines, and since all the other information about the Somerton Man suggests he is of "Britisher" appearance, it is unlikely that he spoke either of these languages. This leaves English as the next likely, as it is the most consistent with the information known about both the code and the Somerton Man. This also reinforces the results that the code most likely represents the first letters of an unknown sentence and is consistent with results from previous years.

Cipher GUI

In the course of evaluating a range of different ciphers, the group last year developed a Java program which could both encode and decode most of the ciphers they investigated. Part of our task this year was to double-check what they had done. To do this, we tested each cipher with both words and phrases being encoded and decoded, to look for errors. Whilst most ciphers were faultless, we discovered an error in the decryption of the Affine cipher that lead to junk being returned. We investigated possible causes of the error, and were able to successfully resolve a wrapping problem (going past the beginning of the alphabet C, B, A, Z, Y... was not handled correctly), so now the cipher functions correctly both ways.

We also sought to increase the range of ciphers covered. One example we found was the Baconian Cipher, whereby each letter is represented by a unique series of (usually 5) As and Bs (i.e. A = AAAAA, B = AAAAB, and so on). Whilst this was known of during the Somerton Man's time, the use of letters besides A and B in the code clearly indicates the Baconian Cipher was not used. Despite this, the Baconian Cipher would have made a useful addition to the CipherGUI. However, the nature of the way it was coded, and our lack of familiarity with the program, made the addition of further ciphers difficult, so we decided to focus our efforts on other aspects of the project.

Last year's group did a lot of good work developing a "Cipher Cross-off list", which went through a range of encryption methods and determined whether it was likely or not that the Somerton Man passage had been encoded using them. We analysed their conclusions, and for the most part could find no fault with their reasoning. However, they dismissed "One-Time Pads" wholesale, arguing that the frequency analysis of the code did not fit that of an OTP. However, they assumed that the frequency analysis of the OTP would be flat, since a random pattern of letters would be used. This is fine for most purposes, but if the Somerton Man had used an actual text - for example the Rubaiyat - then its frequency distribution would not be flat. It is for all intents and purposes impossible to completely discount OTPs as the source of the cipher, since the possible texts that could have been used - even ignoring a random sequence of letters - is near infinite. You cannot disprove something for which no evidence exists; you can only prove its existence by finding it.

Web Crawler GUI and Pre-indexed Web Data

The WebCrawler developed by last year's group working on this project is a highly competent, fully-functional program that does what it has been designed to do very well. However, upon first appearances it is intimidating, with a range of options required to be specified before a search can even be undertaken. There is a detailed and helpful README file provided with the WebCrawler, but it is well-known that most people don't resort to manuals until absolutely desperate. Therefore we sought to redesign only the front end of the WebCrawler in order to encourage more experimentation and greater usage by people interested in getting their search results as quickly and easily as possible.

Our brief was to design something more like Google, which is immediately accessible and could not be more intuitive. To achieve this without a loss of functionality, we decided to abstract away the more complex options and provide default values for key parameters, so that the user is only required to enter their search string to perform a basic web crawl. However, if they wanted to fine-tune the experience - change the web address the search starts from, save the results to a different location, or define a proxy server connection - they had access to this through an "Advanced" menu. Once either search has commenced, the user is shown a results page which is able to display a greater number of matches at a time - meaning less scrolling and making the results more immediately accessible to the user.

Pre-Indexed Web Data