Final Report 2011

Under Construction

Construction Notespace:

- Due to be finished 23/10/11 11:59pm

- The Introduction to the Introduction section may need to be removed if it repeats the Executive Summary material.

Executive Summary

A man discovered deceased on Somerton Beach in 1948 left many mysteries. None were more prominent than an apparently enciphered code. No one was able to decipher the code, nor identify who the man was or how he died. This remains the situation over six decades later. This project harnesses modern technological capabilities, not available to previous studies, to assist in the investigation of the case, approaching the as yet un-deciphered code from a new angle.

One side of the project has approached the problem as if an answer to the code already exists; it just has not been linked to the case. For this purpose a completely integrated Java-based web crawler with fully customisable pattern recognition capabilities was produced to search the web for answers. It has been designed to accept a broad spectrum of patterns and thus has uses extending well beyond the scope and context of the 2011 project.

A separate facet to the 2011 project was the cryptologic investigation of cipher methodologies that could have been used to generate the code. Over thirty different cipher techniques have been comprehensively examined. Some ciphers have withstood all tests and remain possibilities. The use of software that was developed for the investigation of cipher methodologies was extended to create a unique cryptologic analysis tool. The tool provides the full functionality of all available ciphers, including customisation of keywords and has real-time letter frequency graphical display.

As the project closeout phase progresses, the achievements of the past year can be reflected upon. A comprehensive cipher analysis has contributed to the ongoing cipher investigation surrounding the case, in the process producing a highly usable cryptanalytic software tool. A web crawler with flexible pattern acceptance packaged through an intuitive Graphical User Interface provides a tool to aid future investigations into the case.

The project team is satisfied the project has progressed to its conclusion well and has been a success; on time and on budget, meeting and extending objectives. The project’s software products are complete, thoroughly tested and validated.

Introduction

This project, ‘Code Cracking: Who murdered the Somerton Man?’ concerns an un-deciphered code linked to a man discovered dead on Somerton Beach in 1948. The inspiration for the project is the chance to shed light on this code’s meaning, taking advantage of technology not available when the case was first investigated. The project has two focuses. A cipher analysis; analysing the link between the code and historic ciphers, and web crawling; mining the internet to acquire data consistent with patterns in the code.

Along with providing an analysis of cipher links to the code, the project develops two software products: a cipher analysis tool and a web searching application. The flexible designs of these products facilitate wide-ranging applications beyond the scope of this project.

This is the final report for the project. It describes the processes and outcomes of the project and defines the degree of success to which objectives have been met. The report discusses methodology used, the results achieved and provides an evaluation of the outcomes. For completeness, background information, inspiration and designs from previous reports have been included. The report also includes an analysis of the project management strategies employed in managing progress, mitigating risks and auditing results.

History

The Case

On December 1st 1948 a deceased man was found on Somerton Beach. The conclusion from the autopsy was that his death had not been natural and a poison was the likely cause. At the time an identification of the poison could not be made but a review in 1994 determined it was the uncommon Digitalis[1].

The man possessed no form of identification. His fingerprints and dental records were not found in any international registries. All the labels had been removed from his clothing and thus could not be traced. Further complicating the investigation was eight different “positive” identifications of the man by members of the public before 1950[1].

The man and his origins remain unidentified to this day. He rests in an un-named grave in West Terrace Cemetery, Adelaide.

The Code

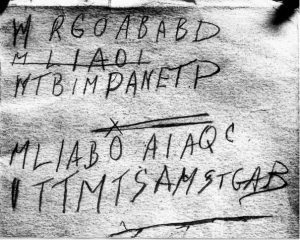

Connected to the unidentified man and the focus of this project is the five-line code in Figure 1. No one has yet extracted meaning from the code despite over 60 years of attempts.

The code was found as a faint pencil marking in the back of a book containing a collection of Persian poems titled The Rubaiyat by Omar Khayyam. A man in Glenelg found the book after it was tossed into the back seat of his car sometime during the night of November 30, 1948. On the last page of the book the words “Tamám Shud”, meaning ended of finished in Persian, had been removed. A small piece of paper with these words printed on it was found in a concealed pocket in the deceased man’s clothing. Later testing confirmed the piece of paper originated from the book[1].

No one has yet determined the meaning of this code and deciphering it provides the basis and inspiration for this project.

Motivation

The fact that no one has been able to solve the case in over 60 years raises the question: Why do we think we can do better? The answer lies in technological advances made in this period. By far the biggest and most critical developments for the 2011 project have been the evolution of computers and the introduction of the Internet.

There exists a possibility that within the vast amounts of data contained in the Internet there is a meaning to the code; it just has not been connected to the case. In order to sort through the data and analyse potential ciphers, the phenomenal processing power of computers will also be vital.

The approach of this project aimed to utilise these advances to approach the code from a new angle.

Project Objectives

At the beginning of the project several broad objectives were established. These were:

- Conduct a comprehensive cipher analysis

- Create an ability to custom search the web

The aim of the cipher analysis was to methodically investigate as many ciphers as possible to determine a valid encryption methodology for the code. This process was to contribute to the ongoing cipher examination of the code.

The second objective aimed to create the ability to custom search and analyse the data available on the Internet with greater control and flexibility than the average internet search engine. The motivation for this was the theory that the true meaning of the code already exists within the data on the web and by exhaustively searching for distinct patterns evident in the code it may be possible to directly identify parts of the underlying message. This objective also included the design aim of making the software generic so that many different search patterns would be accepted; providing applications beyond the scope of the Somerton Man investigation.

It should be noted that the project did not set the objective of cracking the code or solving the case. The code has not been solved in over 60 years, so while the project endeavoured to shed light on the meaning behind the code; success of the project was not dependent on the code being cracked or the case being solved.

Extended Objectives

Efficient progress during the 2011 project provided an opportunity to extend the original objectives. Both project team members in consultation with the project supervisor established the following additional objectives:

In the cipher analysis component a lot of software was being written for investigating cipher links to the code. Rather than archive this software after each cipher was examined, an objective was set to use it to create a centralised cipher analysis tool that intuitively implemented numerous ciphers.

With the aim of designing web search software with numerous applications a need for a user-friendly interface was identified. This spawned the objective of creating an interactive user-friendly GUI from which to the search mechanism could be run and results presented.

Finally, the goal was set to release these useful software applications to the public by making them available on the project's wiki page.

Structure of Remainder of Report

The structure of this report reflects the approach used to conduct the project. Firstly background of the project is given with an overview of the previous studies relevant to the project, software tools, cipher analysis methodology and a conceptual description of a web crawler.

An overview of the strucutral and statistical analysis conducted on the Rubaiyat of Omar Khayyam is included as section 3 followed immediately by a summary of the 2011 cipher investigation as section 4. The Cipher GUI project extension component is discussed in section 5.

The next three sections, sections 6, 7 and 8, summarise the pattern matching software, web crawler development and the integration of the two systems. This is followed by the findings of an extensive investigation conducted with the combined pattern matching and web crawling system in section 9.

The report is concluded with a summary of proposed future directions for the 2011 project, project management tools and responsibilities and a discussion of the project outcomes including the project's significance, innovations, strengths and limitations.

A complete list of references is included at the end of this document with links to other resources associated with the 2011 project.

Background

Previous Studies

Over the years there have been many attempts to shed light the code’s meaning. Of much interest is the analysis performed by Government cryptanalysts in Canberra and two previous projects done by students at the University of Adelaide.

In 1978, the code was examined by Government cryptanalysts in the Department of Defence in 1978. They made three conclusions:

- There are insufficient symbols to provide a pattern

- The symbols could be a complex substitute code or the meaningless response of a disturbed mind

- It is not possible to provide a satisfactory answer[2].

These results appear discouraging, however, this study was done in 1978, prior to the technological advances this project aims to utilise. In light of this the University of Adelaide student projects done in the past two years are more relevant in providing a starting point for our own investigations.

In 2009, the students concentrated on a cipher investigation and structural investigation. Results included concluding that the Somerton code isn’t random; it contains a message. They also made some conclusions regarding ciphers, such as a transposition cipher was not used (the letters are not simply shifted in position) and that the code is consistent with an English initialism – a sequence of English initial letters[3].

The students examining the code in 2010 concentrated on a statistical pattern investigation analysing the everyday occurrence of patterns evident in the code and creating web analysis software able to archive web pages and analyse the contents[4].

Software Tools

This section provides a list of the software tools that were used in the development of the project.

All code development was completed using the Java programming language version 1.6.0_26 and the Java Standard Edition Runtime Environment version 1.6.0_26Cite error: Invalid parameter in <ref> tag. Testing was conducted across three operating systems, Mac OSX Lion, Windows 7 and Ubuntu Linux version 9.10.

Netbeans integrated development environment version 7.0.1 was used for graphical user interface development in combination with Eclipse integrated development environment, versions Galileo, Helios and Indigo.

Multiple Wikipedia webpages were used for documentation and result co-ordination.

Cipher Methodology and Analysis

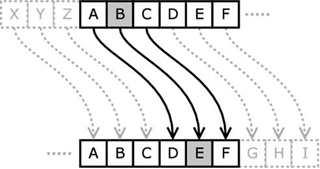

A cipher is any general system for hiding the meaning of a message by replacing each letter in the original message with another letter[5]. The two general types of ciphers are substitution ciphers and transposition ciphers. Substitution ciphers are those in which each letter of the plaintext is replaced by another letter to form the ciphertext, while transposition ciphers are those in which letters within the message retain their values but change position[5].

A simple example of a substitution cipher is the Caesar Cipher. The cipher is formed by shifting each plaintext letter three places along the alphabet to form the ciphertext letter as shown in the figure below.

A simple example of a Caesar Cipher encryption would to be to encrypt the word “face”. By moving each letter along three places (refer to the figure above), the plaintext first letter ‘f’ goes to ciphertext ‘I’, ‘a’ goes to ‘D’, ‘c’ to ‘F’ and ‘e’ to ‘H’ . Thus the plaintext “face” gets transformed to the ciphertext “IDFH”. Decryption can be performed by trivially reversing the process.

Ciphers typically involve a general method that specifies what sort of algorithm is used in encrypting the plaintext, and a key that specifies the exact values used in the algorithm[5]. For example, in the above Caesar Cipher, the algorithm can be considered a shift of the alphabet with the key being 3, resulting in the specific instruction of a shift in the alphabet of 3.

Cipher analysis on substitution ciphers is traditionally performed through a process called Frequency Analysis. This process uses linguistics and statistics, recognising that each letter in a language has its own unique characteristics that can be used to identify it. For example, in the English language, the letter ‘e’ has the characteristic of occurring most commonly; on average 13% of the time[6]. Thus it would make sense to replace the most commonly occurring ciphertext letter with the plaintext letter ‘e’.

Web Crawler

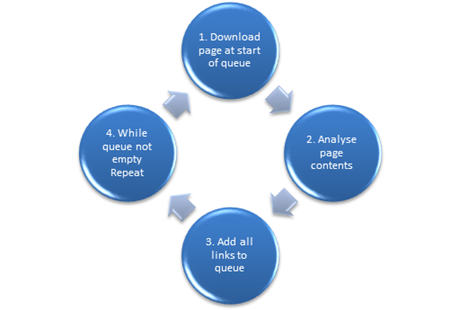

A web crawler is an automated and exhaustive internet explorer. Starting with a “seed” page, web crawlers analyse the content of this seed page before searching downloading all of the web pages that are linked from this page. It then repeats the search process with these pages in a recursive manner[7]. The process can be visualized through the figure below. The biggest dot represents the seed webpage; the four connecting dots represent four links the web crawler finds on the seed page. As it recursively searches each page the web crawler spreads out over the internet.

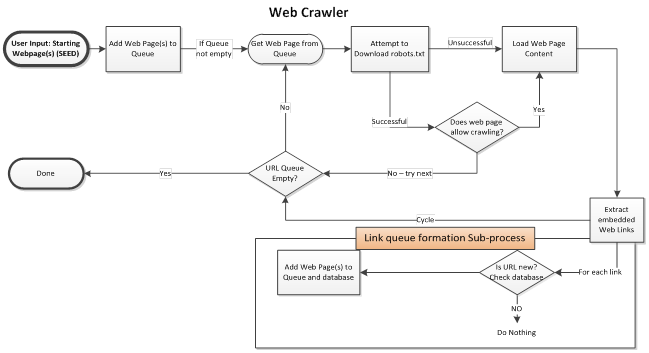

The ability of web crawlers to autonomously explore the web is very powerful. A basic step process for web crawlers is shown in the flowchart below.

Step 2 of the above process chart shows the capability of analysing the data contained on the web page. This is where this project plans to integrate pattern matching software. The complete system forms a personalised internet search engine.

Structural and Statistical Investigation

Concept

An analysis of the text in the Rubaiyat of Omar Khayyam was conducted to investigate if the code had been derived directly from the collection of poems within this book. Three different investigative directions were followed in a statistical analysis of each poem’s structure. The results from this preliminary analysis were used to decide if a subsequent cipher investigation was required in 2011.

One of three conclusions reached in the 2009 honours project identified that the letters in the code are consistent with an English initialism. In acknowledgement of the connection between the code and the Rubaiyat of Omar Khayyam, three hypotheses were derived to statistically investigate initialisms within this book. The cipher investigation component of the 2011 project was initiated with this analysis, as in accordance with the project objectives stipulated above, the results determined if a cryptologic cipher investigation was actually necessary.

The three hypotheses that were considered:

Hypotheses

- The code is an initialism of a poem in the Rubaiyat

- Based on previous studies indicating an English initialism and the fact the code has four (un-crossed out) lines, with each poem being a quatrain (four line poem).

- The code is related to the initial letters of each word, line or poem

- Based on previous studies indicating an English initialism.

- The code is generally related to text in the Rubaiyat

- Based on the links between the Rubaiyat and the code.

Technical Challenges

The two main challenges in this analysis were with the source material.

- Code Ambiguities

- Sample Size

Code ambiguities refer to difficulties in determining which letters some of the handwritten symbols in the code represent; a challenge created by the untidy handwriting. Sample Size refers to the issues encountered due to the limited sample of 44 letters we have to analyse from the code.

Design of Tests

The approach to testing these hypotheses varied, although each used Java text parsing and statistic-gathering code. The first hypothesis was tested through statistically analysing the structure of the Rubaiyat poems and comparing to the Somerton Man code structure. The second and third hypotheses were tested through analysing letter frequencies in the poems using software and comparing these results to Somerton Man code letter frequencies. In the case of Hypothesis 2 frequency data was gathered on the first letter of each poem, the first letter of each line and the initial letter of each word. The third hypothesis similarly analysed letter frequencies of all letters in the Rubaiyat.

Results of Tests

Hypothesis 1: The code is an initialism of a poem. Statistics were gathered on the number of words in each line (first, second, third, fourth) of each poem. The statistics gathered include the mean number of words in each line, the standard deviation, the maximum number of words in a line and the minimum. The results categorized by line number in a Rubaiyat poem are shown in the table below, followed by the statistics from the Somerton Man’s code.

| Line | Mean | Std Dev | Max | Min |

| First | 8.00 | 1.15 | 10 | 5 |

| Second | 7.69 | 1.20 | 10 | 5 |

| Third | 7.88 | 1.06 | 10 | 5 |

| Fourth | 7.87 | 1.31 | 10 | 5 |

| Line | Number of Letters |

| First | 9 |

| Second | 11 |

| Third | 11 |

| Fourth | 13 |

The important result is the maximum number of words in the poem lines. Each line category has a maximum number of words of 10 across all of the 75 poems contained in the Rubaiyat. However, the code has 11, 11 and 13 letters in its second, third and fourth lines respectively, each over the maximum. These results allow Hypothesis 1 to be ruled out, giving the conclusion that the code is not an initialism of a Rubaiyat poem.

Hypothesis 2: The code is related to the initial letters of each word, line or poem. Letter frequency data was gathered on the first letter of each poem, of each line and of each word. This data is plotted against average English initial frequencies and the code letter distribution.

A link between poem initials or line initials and the code can be trivially ruled out. There is a ‘G’ in the code but no line or poem starts with a ‘G’ in the entire Rubaiyat. A link between all initial letters in the Rubaiyat and the code is more difficult to rule out. There is a generally good correlation between English initials and initials in the Rubaiyat (graphed in light blue) as might be expected, but there are significant discrepancies when compared to the code, such as the code clearly having a greater proportion of A’s, B’s and M’s. While a link cannot be ruled out due to the small sample size of the code (44 letters), for the purposes of this project a link has been ruled unlikely.

Hypothesis 3: The code is generally related to the text in the Rubaiyat. This hypothesis was tested by adapting the Java text parser code to generate letter frequency plots for the all letters in the Rubaiyat poems. The results are displayed in the graph below.

While there is very good correlation between the Rubaiyat poems and English text, the letter frequency of the code is substantially different, with significantly larger proportions of M’s, A’s and B’s. Again the sample size of 44 letters for the code restricts our ability to make a conclusion, but for our purposes there is enough evidence to discount a link.

Conclusions

The rejection of these three hypotheses indicates there is no direct unencrypted link between the code and the Rubaiyat, disregarding the weaknesses surrounding the assumptions required with ambiguous letters and the small sample size. This result, combined with the decision that the code was not random[3][4] from the 2009 and 2010 Honours Projects led to the conclusion that a comprehensive cipher analysis was required in the 2011 project. It should be noted that these results do not rule out all connections between the code and the Rubaiyat; just unencrypted ones.

Cipher Investigation

Concept

The Cipher Investigation component of the project provides a comprehensive cipher analysis of the Somerton Man Code through the methodical investigation of as many ciphers as was possible, to determine whether they had been used in generating the Somerton Man code. In the process, contributing to the ongoing cipher investigation associated with the case.

Previous Work

Two previous attempts at testing ciphers against the code were considered in 2011. These were the examination within the 2009 University of Adelaide Honours Project and the Department of Defence investigation conducted in 1978. The cryptanalysts from the Department of Defence did not derive any definitive results, they concluded[2]:

- There are insufficient symbols to provide a pattern.

- The symbols could be a complex substitute code or the meaningless response to a disturbed mind.

- It is not possible to provide a satisfactory answer.

In 2009, the project group investigated transposition ciphers, the One Time Pad, the Vigenère Cipher and the Playfair Cipher. Their conclusion was that transposition ciphers warranted no further investigation, the Vigenère and Playfair Ciphers were unlikely to be have been used but the One Time Pad was plausible[3]

This year the One Time Pad, the Vigenère Cipher and the Playfair Cipher have been re-examined in greater depth and in acknowledgement of the 2009 results, no additional ciphers were tested that used a transposition methodology.

Technical Challenges

The key technical challenges faced in the Cipher Analysis portion of the project are identified below.

- Cipher Possibilities

- Sample Size

- Code Ambiguities

- Circumstantial Unknowns

The first challenge, cipher possibilities, refers to the almost infinite number of potential ciphers that could have been used; there are many different algorithms to transform plaintext to ciphertext. For example, considering just simple mono-alphabetic substitution ciphers, there are 26! (factorial) possible ciphers; that is over 400,000,000,000,000,000,000,000,000 or 400 trillion trillion ciphers[5].

The sample size of the source material, 44 letters, also adds challenges. Frequency Analysis was introduced in the Background section as being the traditional method of solving substitution ciphers, however it relies on an accurate representation of the letter frequency distributions. This typically requires a sample of at least 100 letters.

Code ambiguity refers to some of the letters in the Somerton code being hard to identify. For example, the first letter in the first and second line in the figure below could be M’s or W’s. Code ambiguities added further challenges to the cipher analysis.

Finally, the circumstantial unknowns of the case introduce more complications. It is not known what nationality the Somerton Man was so we cannot be sure what language any hidden message would be in.

Methodology

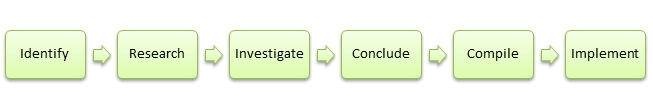

Cipher analysis was done through a methodology established at the beginning of the project.

Initially potential ciphers were identified by broad research aimed at isolating relevant ciphers. Relevance was determined as the cipher meeting several pre-conditions:

- The cipher needed to have been invented and in use prior to the time the unknown man was found in 1948. Thus no cipher invented after December 1 1948 was included in the 2011 investigation as they can be trivially discounted.

- It must be possible to perform the cipher methodology by hand. This acknowledges the original code written in the back of the Rubaiyat of Omar Khayyam whilst also reflecting that computers were not in common use in 1948. Mechanically derived codes such as those generated by the Enigma machine were not included.

- In accordance with the results from 2009, the ciphers were not to use a transposition-based methodology.

Once identified, detailed research was conducted into each cipher determining methods for its use. Following the research, each cipher was investigated in its potential relationship to the Somerton Man code. The investigative methods that were used depended on the function and scope of the individual cipher. These ranged from exhaustive software testing to statistical analysis, structural analysis and frequency analysis.

The product of the investigation stage was a conclusion: whether the cipher could be ruled out as being used in encrypting the code or not. In the Compilation stage, the results of the previous three sections, namely Research, Investigation and Conclusion were compiled onto a central database; this database being a sub-page on the project’s publicly accessible wiki page known as the Cipher Cross-off List.

The final stage was to implement the cipher in the cipher analysis software tool. This software tool is referred to as the CipherGUI in this report.

Results

The result of the cipher investigation is a comprehensive list of approximately 30 different ciphers tested complete with detailed reasoning. The following table summarises these results.

For complete descriptions of each cipher and the full results and data, a visit to the centralised official database; the Cipher Cross-off List is strongly recommended.

| Cipher | Test techniques | Status | Student |

| ADFGVX | Structural analysis | Disproven | Steven |

| Affine | Direct decryption | Disproven | Patrick |

| Alphabet reversal | Direct decryption | Disproven | Patick |

| Auto-key | Direct decryption | Disproven | Steven |

| Baconian | Structural analysis | Disproven | Patick |

| Beaufort | Frequency analysis | Unlikely | Steven |

| Bifid | Frequency analysis | Disproven | Steven |

| Book | Structural analysis | Disproven | Patrick |

| Dvorak encoding | Direct decryption | Disproven | Patrick |

| Flat-frequency | Statistical analysis | Disproven | Patrick |

| Four square | Structural analysis | Unlikely | Patrick |

| Gronsfeld | Structural analysis | Disproven | Steven |

| Hill | Frequency analysis | Disproven | Steven |

| Homophonic substitution | Structural analysis, Statistical analysis | Disproven | Patrick |

| Keyword | Frequency analysis | Possible | Patrick |

| Nihilist | Structural analysis | Disproven | Steven |

| Null | Structural analysis | Unlikely | Patrick |

| Number based | Structural analysis | Disproven | Patrick |

| One time pad | Statistical analysis | Unlikely | Patrick |

| Pigpen | Direct decryption | Disproven | Patrick |

| Playfair | Structural analysis | Disproven | Patrick |

| Playfair (Double) | Frequency analysis | Unlikely | Steven |

| Porta | Frequency analysis | Unlikely | Steven |

| Rail fence | Direct decryption | Disproven | Patrick |

| Shift | Direct decryption | Disproven | Steven |

| Templar | Direct decryption | Disproven | Steven |

| Trifid | Frequency analysis | Unlikely | Steven |

| Trithemius | Direct decryption | Disproven | Steven |

| Two square | Structural analysis | Unlikely | Patrick |

| VIC | Structural analysis | Disproven | Steven |

| Vigenere | Frequency analysis | Unlikely | Steven |

The table shows that the majority of ciphers analysed have been ruled out, with most of those that remain declared unlikely. Only one cipher was determined to be plausible; the Keyword Cipher which yielded a profile consistent with the Somerton Man code. Once again, for more extensive details, please refer to the Cipher Cross-off List.

CipherGUI

A centralised cipher analysis tool implementing multiple ciphers with an interactive and easy-to-use interface.

Concept

The CipherGUI was a concept developed by the team that was initially not part of the objectives but became a major section of the project with encouragement from the project supervisor. The significant amount of research and time spent creating software in order to investigate each cipher’s relation to the Somerton Code in the Cipher Analysis section provided a unique opportunity to create a lasting product that could take advantage of this work, in the form of a Graphical User Interface (GUI) merging multiple ciphers into one centralised intuitive application.

Technical Challenges

The following technical challenges were identified for the CipherGUI.

- Merge multiple ciphers intuitively

- Providing interactive functionality

- Implementing hand-designed ciphers

Merging multiple ciphers into one centralised tool with an easily understood interface created challenges. Ciphers are inherently different to each other, presenting alternative interfaces to the outside world. They use different algorithms and accept different key types, returning results in different structures.

Another challenge was providing interactive functionality, referring to allowing the user to choose key values or keywords (where appropriate) and providing feedback in the form of real time analysis tools. Converting older ciphers designed for use by hand into software form also provided difficulties.

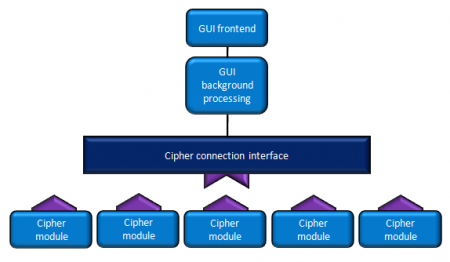

Design

The key design feature to meet these technical challenges was the use of a modular design approach. This allowed multiple ciphers to be easily integrated into the central tool through just five lines of code. The issue of providing interactive functionality to ciphers with inherently different interfaces was solved through the allocation of a unique central panel set by each individual cipher, enabling descriptions, instructions, different key entry types and graphical aids. Real-time letter frequency graphs were also included to provide an analysis tool as well as dynamic tables and cipher alphabets. Zoom features on larger graphical aids were also designed.

Other design decisions included restricting language used to Java as this was the language project members had experience in and the use of the freeware Eclipse IDE minimising budget impact.

Another advantage of the modular structure of the CipherGUI software is that additional cipher methodology can be easily integrated in the future providing there is conformity to the established connection interface used by all exisitng individual cipher implementations. A conceptual software map is shown in Figure 6.

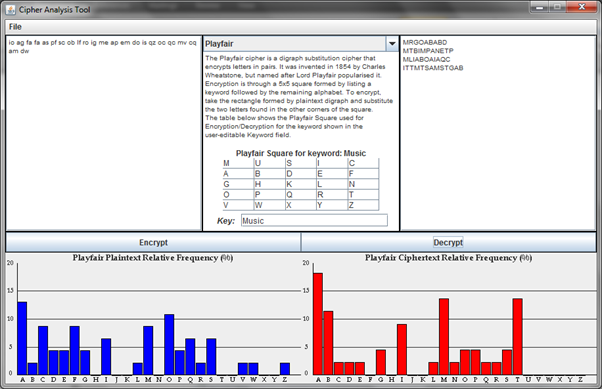

Implementation

A modular implementation approach was taken in which, once the base functionality of the CipherGUI was coded, each cipher could be easily integrated by setting the encryption and decryption algorithms and building the unique central panel as described above. Figure 7, below, shows a screenshot of the working application. A large selection of ciphers is available via the dropdown list above the unique central panel, with areas designated for plaintext, ciphertext and frequency plots.

The screenshot shows the Playfair Cipher in use. The user of the program has specified the input key “Music” and the cipher-square in the central panel has updated to reflect this choice of keyword. Letter frequencies of the ciphertext and plaintext are displayed in the graphs.

Testing

With the modular design, testing on cipher implementations was also done in a modular manner. Research from the cipher investigation stage provided comparative examples and boundary cases were also considered. The simple modular design meant there were few issues encountered during testing. The Testing phase also included successful cross-platform compatibility testing between Unix, Windows and Apple operating systems.

Pattern Matcher

Concept

The pattern matching software was the first module of the internet search application defined in the 2011 project objectives. The purpose of the pattern matching component was to analyse the contents of web pages for given input patterns. It was important that the pattern matching software interfaced with the web crawler module, accepting web page content and returning results to the centralised user interface, for later system integration.

Previous Work

The project group in 2010 put extensive work into creating pattern matching software able to analyse web pages. The three main pattern matching functions of the software are:

- FindExact – matches exact text patterns

- FindPattern – match patterns, such as @#@# matching ERER

- FindInitialism – find exact matches considering only initial letters[4].

While the 2010 implementation does function, this year’s project aimed to completely redesign and expand some of these functions. Flexibility and modularity were considered to be lacking in the 2010 implementation which could use only pre-defined patterns. In 2011 the software would enable patterns to be dynmaically specified which would enhance usability.

Technical Challenges

Key technical challenges identified for the pattern matching module included:

- Handling special cases

- Upper and lower alphabet cases and recognising when a pattern existed that used a combination.

- Recognising patterns separated by punctuation and whitespace (spanning multiple words)

- Acknowledging patterns spanning multiple lines

- The Wildcard character.

- Providing interactivity to the pattern detector

Pattern matching programs should be flexible in that they can detect a pattern no matter what special case applies. In meeting these requirements, the algorithms should take into account that text could be in different capitalisations, separated by punctuation, split across lines or separated by other types of whitespace.

The implementation of a wildcard character into pattern matching algorithms provided another technical challenge. The need for wildcard characters was driven by the existence of ambiguities in letters in the Somerton Man code (see Cipher Analysis Technical Challenges).

Finally, the desire to design an interactive pattern detecting algorithm that was not limited to pre-set cases as in the 2010 version created intricate technical challenges. The software in 2010 was limited by only detecting patterns specified by symbols ‘@’ and ‘#’ and only of lengths 3 and 4; this was done through an exhaustive list. The challenge of 2011 was to allow for any characters to be used for the pattern specification with any pattern size.

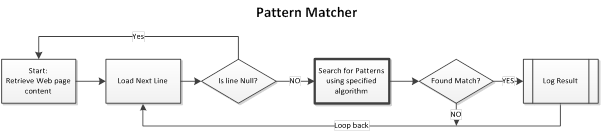

Design

The overall design of the pattern matching module is shown in the diagram below.

The bolded process represents the application of a search algorithm which is the key section of the pattern matcher. The following search algorithms have been designed for 2011:

- Exact

- Searches for exact sequence of characters specified (ignoring capitalisation). For example, a search for “ERER” would match “Gatherer”.

- Pattern

- Searches for a pattern as specified by characters entered. For example, a search for “HDHD” would match both “Gatherer” and “gbhdhdk”. The reasoning for this pattern algorithm is it is able to detect simple mono-alphabetic substitution ciphers; a likely candidate for the Somerton Code.

- Initials

- Searches only initial letters. Can be implemented in conjunction with both Exact and Pattern searches (i.e. ExactInitial and PatternInitial). For example, a search for “HDHD” in PatternInitial would match “Almond bread and butter”. This was implemented because previous studies have suggested the code is likely to be an initialism.

- Regular Expression

- Searches for any user defined Regular Expression (or Regex). A Regular Expression is a concise and flexible means for matching string patterns[8]. Regexes are very powerful; alone it can implement all of the above search functions. For example, a search for “([Ee])([Rr])\2\1” would be identical to the Exact search for “ERER”. The disadvantage being the technical knowledge required to use regexes. The Regular Expression searching function takes advantage of inbuilt Java classes.

The ability to have unknown or wildcard for characters represented as “*” –asterisk- was also designed in the non-regex search functions. Java was chosen as the programming language to maintain compatibility with the other modules.

Implementation

To improve regularity for ease of modification and integration into the web crawler, the search algorithms were implemented to use a Java-defined interface, SearchManager, which specified a search function, to-String function and results publication function. All searching programs use this interface.

The implementation of the Exact search function uses a simple stepping process, checking each character to see if it is valid in building the exact pattern (or a wildcard). The search function for Pattern is much more complex, beginning by building the pattern from the input parameter before stepping through and attempting to match the identified pattern.

The search function for Initial considers only letters not following other letters to mimic the behaviour of an initial letter. The implementation allows it to be easily integrated with Exact and Pattern.

There was a two-fold benefit to the use of Java as the development language. It provided compatibility with the Java based web crawler, as will be discussed in the following section and it enabled the use of the advanced text handling capabilities inherent in the language, which included the inbuilt library for regular expressions which was used directly in that search functionality.

Testing

The testing process for the pattern matching module followed a majorly white-box approach. Each search algorithm was subjected to scenario analyses using both web-page content and self-generated test files design to test specific scenarios. Boundary cases such as patterns spread over separate lines were also thoroughly tested with internal workings verified to be functioning as expected.

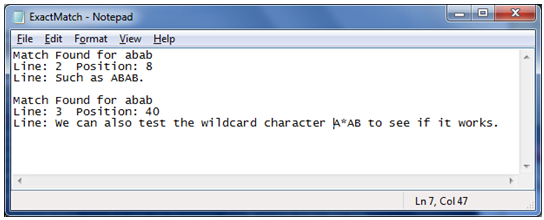

An example of a simple test run on a test file investigating the Exact search algorithm as well as the wildcard function is shown below.

Web Crawler

Concept

The web crawler is the second module of the internet search application defined in the Objectives. The purpose of the web crawler module is to autonomously browse websites as explained in the Background Theory section. This web crawler module must interface with the pattern matcher module, passing web page content and accepting results to deliver to the centralised user interface. The requirements of the web crawler included:

- Java-coded to maintain compatibility with other modules

- Accept a seed URL from which to explore

- Download pages linked in the page being explored

- Not revisit pages already visited

- Abide by ethical web crawling practices.

Ethical web crawling practices refer to not overloading servers with repeated requests and not exploring sections of internet pages the domain determines should be off-limits to web crawlers[7].

Previous Work

In 2010, the project group aimed to create a similar product to what this project set out to design; an application that could download internet pages and pattern analyse them. The 2010 solution was a two-stage process: a web archive tool that would mirror copies of web pages in local directories and a pattern matching module to analyse the stored pages at a later time. This was done using open source software program, HTTRACK.

In 2011, a slightly different approach was taken, aiming to create a real-time integrated solution that analysed web content as it browsed, with a more user-friendly layout and function.

Technical Challenges

Technical challenges encountered in the Web Crawler module included the following:

- Robots Exclusion Protocol

- Webpage content extraction

- Multithreading

- Crawling https secure sites

- Accessing the Internet through secured proxies that require a username and password for use.

Robots Exclusion Protocol refers to the policy governing ethical behavioural of web crawlers. The policy for each site is stored in a text file named robots.txt (available at <domain>/robots.txt. The challenge this provides web crawlers is reading the robots.txt file and obeying any instructions limiting crawling across that domain.

In interfacing to the pattern matcher module, the web crawler is required to supply the contents of each webpage it crawls. In addition to this, any html code should be ignored and not passed. The complex nature of web crawlers meant parallel processing was required through multithreading. This introduced the technical challenge of controlling threads externally. Finally, providing the ability to access encrypted sessions or https (secure) sites is a challenge that is yet to be overcome.

Currently the web crawler is unable to function in an operating environment where access to the interent is secured by a proxy that requires a username and password. An example of this type of domain is the University of Adelaide student computer accounts. An update to the web crawler has been designed to remedy this and will be implemented prior to project closeout.

Design

The design for the web crawler process is explained by the diagram below.

The design starts with a seed from which it attempts to load the protocol definition file robots.txt. If robots.txt does not exist or does exist but doesn’t limit crawling web page content is loaded from which embedded links are extracted. These links are added to the link queue via a sub-process as the thread returns to attempting to load the next web page in the queue.

Implementation

An early realisation by project members, Steven and Patrick, was that there was not enough time to implement a complete web crawler given the wide scope of the entire project. Thus an Off the Shelf (OTS) solution that met the requirements was sought.

After extensive research primarily by Steven and investigative testing by Patrick, the Java-based crawler crawler4j was chosen. This choice was made because crawler4j met the specified requirements, was freeware and open source with no legal implications.

Testing

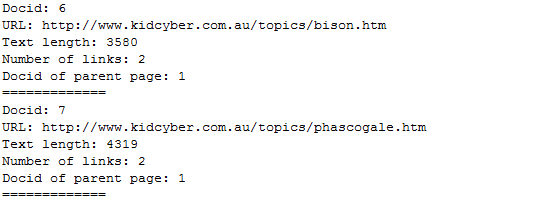

Given the OTS crawler4j provides fully functioning support, the main testing required was ensuring it met requirements listed above. Due to the OTS nature of the crawler, a Black-box testing approach was used with an exploratory slant. The requirement of a Java-coded crawler was trivially satisfied and the ability to accept seed websites was also confirmed. Investigation revealed crawler4j supported polite web crawling, obeying the robots.txt file and providing parameters to limit request rates. An in-built function in the OTS crawler that returned webpage content with all html code removed was also validated. crawler4j functioned as research indicated it should, meeting all requirements. A screenshot of the command-line web crawler running over some linked pages during the testing phase is shown in the figure below.

Testing also revealed some additional useful functionality provided by the crawler4j interface. This included parameters to limit: link depth explored, number of pages searched and domain. These features proved especially useful in the testing phase of the following System Integration section and have also been incorporated into the product for use by the customer.

System Integration

Concept

The purpose of the system integration was to interface between the pattern matcher and web crawler modules in order to create a functioning autonomous internet search application. As set out in the Extended Objectives, a goal was to provide access to this application through an intuitive GUI. The system integration also involved the design and implementation of this GUI.

Technical Challenges

System Integration introduced the following challenges:

- Multithreading thread control

- Pattern Matching generics

- Intuitive GUI design

- Internet access proxies

The access of the crawler through a GUI meant the GUI Java thread needed control of the web crawling threads, providing some multithreading challenges. Generic object use between the different pattern matching algorithms also introduced a challenge. This was solved as noted in the Pattern Matcher section with the introduction of the SearchManager Java interface.

Other challenges came from the designing of a GUI providing all necessary input areas for a user to run a web search and from internet proxy issues which were encountered accessing the web from some locations.

Design

The design of the integrated system is explained by the diagram below.

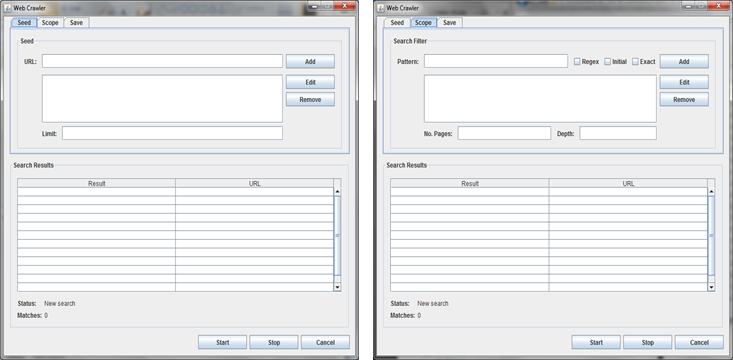

The design of the GUI for the system was primarily done by Steven using the Netbeans IDE GUI development toolkit. The requirements of the user interface included allowing the user to specify: the seed website(s), the pattern and pattern type being searched for, and a result logging location.

The design of the GUI can be seen below. The GUI design uses three separate tabs for simple viewing and operation of the software's functionality. The first tab, designated the seed tab, the second tab the pattern tab and the final tab specifies logging locations. There is a substantial area shared between each tab (the bottom half of the GUI) to which results are returned. As required, the pattern tab also allows the type of pattern to be selected via the checkboxes (Regex, Initial, Exact) shown. The third tab is not shown here.

Implementation

The integration of the pattern matching module into the web crawler module was achieved through utilising Java generics with the SearchManager interface as defined in the Pattern Matcher section. The OTS crawler4j provides a function to retrieve web page content and this was used to pass page content to the pattern matcher.

The integration of the system into the GUI was somewhat more involved. A multi-threading solution was required to enable control of the web crawler through the GUI as discussed in the Technical Challenges.

The Testing phase of the Web Crawler module revealed additional functionality provided by the OTS crawler. During the implementation phase, some of these features were integrated into the GUI to add a greater dimension of user control. These features were “Limit” on the Seed tab, which limits crawling to web page URLs starting with the specified limit; “No. Pages” on the Pattern (Scope) tab, which stops the crawler after the specified number of page downloads; and “Depth” on the Pattern tab, which defines how deep the crawler goes into a stack of links before giving up on that line of inquiry. These features can be seen in the Design images, above.

Testing

The modular testing of the pattern matcher and web crawler meant following validation of integration success, testing focussed on the GUI. The testing process used was primarily grey-box, with websites and search patterns being specifically selected. A restriction of the OTS crawler meant it was not possible to test crawls on locally stored pages and as such live websites had to be used. The testing process did reveal some issues regarding control of the web crawler through the GUI resulting in the multi-threading solution discussed above. Cross-platform compatibility was also successfully verified. Testing on University machines highlighted a proxy issue. While not catastrophic, it adds complexity to the user who must specify proxy settings. Overall, the testing process proved the system met requirements and worked as expected.

Web Crawler Investigation

Concept

The directed purpose for creating the web crawler search application was to see if it could be used to search for patterns evident in the Somerton Code. This section addresses the use of the completed system to perform a trial investigation.

Technical Challenges

The main challenges in this trial investigation were:

- Extracting relevant patterns from code

- Identifying seed websites

The choice of an appropriate pattern to search for is a key element in determining the quality of results. As such, great care should be used so as not to waste time and other resources on searches for patterns that don’t provide usable results. Similarly, the seed webpage should be well chosen. Given the excessive number of pages available on the internet, this trial investigation will examine only a miniscule proportion and therefore a relevant seed is important.

Design

The Somerton Code, shown to the right, demonstrates distinct patterns; for example there is “ABAB”, “TTMT”, and “AIA”. These patterns are bounded by letters which are hard to identify (e.g. first letter of first and second lines - W’s or M’s) or may be crossed out (such as the O in the third line).

Several test cases were designed to investigate theories regarding the code.

- Pattern Match for “ABAB”, “TTMT”, “AIA”, “TTMTSAMST”. Based on the theory of a substitution cipher. Pattern matching would be able to detect words with these patterns.

- Exact Initial Match for “RGOABAB”, “TBIMPANETP”, “MLIAB”, “AIAQ”, “TTMTSAM”. Based on the English Initialism theory.

These sequences are designed to avoid ambiguous letters evident in certain sections of the code.

Seed websites that have been chosen for this trial investigation are poem archives. The reasoning behind this is the structure of the code suggesting a four line poem is possible, along with the fact it was found in the back of the Rubaiyat of Omar Khayyam; a book of poems.

Results

Searches for Pattern matches to the shorter identified patterns (“ABAB”, “TTMT”, “AIA”) returned too many results for significant analysis in the time available. The longer pattern (“TTMTSAMST”) returned no results. In future examinations it is possible the many results for smaller patterns could be analysed to determine frequencies and likelihoods.

Searches for the initial letter sequences were more useful with fewer hits. Crawling approximately 50,000 websites returned only one significant result, a poem titled “My Love Is A Butterfly” by Katerina Yanchuk[9], for the initialism “MLIAB”. The poem can be viewed here. Analysis has revealed no other links to the Somerton code in this poem and as such the match is considered a coincidence. A screenshot of the successful match is provided in the following thumbnail.

Conclusion

The investigation with the web crawler has been extremely limited due to time and resource constraints towards the end of the project. Only a few searches were executed over a tiny portion of the internet. Other limitations included the use of a wireless internet connection meaning only 5,000 pages per hour were analysed.

The limited results do not rule out the theory that the pattern matching web crawler has the capacity to find the message behind the code. A significant pattern matching result was identified however has been assessed as a coincidence. Potential future work could look at distributed crawling using an Ethernet connection to speed up the process.

Future Development

Cipher Analysis

The cipher analysis can be expanded in future years. While as many ciphers as possible were investigated in 2011, there are many more potential possibilities. The cipher cross-off list provides a solid foundation with which the investigation can be built in future projects. If the investigation is continued there is the associated opportunity to expand the CipherGUI software. The modular structure designed by the 2011 team enables the functionality of many more ciphers to be easily integrated into the software provided the encryption and decryption methodologies are implemented in the Java programming language and conform to the established interface. Additional analysis features could also be integrated into the CipherGUI such as the implementation of a Frequency Analysis algorithm to attempt to solve substitution ciphers.

Web Crawler

There are several directions that future projects can extend the combined pattern matching web crawling software developed in 2011. A natural progression envisaged by the current project team is to further enhance the data mining capabilities of the web crawler. This could be achieved by using the distributed research computing system of the University of Adelaide. A reconfiguration of the current web crawler, which operates using a single computer, would be required. Another advantage to this proposal is that the storage capacity for search results would be greatly improved enabling more data to be collected. With an eye to the future, the rapidly increasing levels of images used on the web suggest implementing image analysis into the crawler could also be an innovative idea.

Another possible extension to the web crawler component of the project is the implementation of processing algorithms to analyse collected search data. For patterns that occur more commonly within English text this will provide an efficient method of extracting useful information from the acquired data. The code investigation process could be further streamlined by including statistical analysis capabilities into these analytical algorithms.

In regards to the use of the web crawler to investigate the Somerton Man code, much potential work remains. This year, only a brief prototype-style investigation was launched due to time constraints towards the end of the project. There remains the possibility that extensive crawling for patterns could unearth the hidden message behind the Somerton Code. As such, a more intensive data gathering investigation could be launched including a statistical analysis of results using the Web Crawler produced this year.

Project Management

Timeline

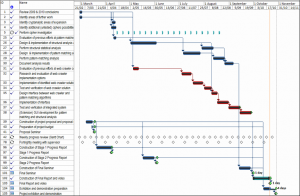

The Gantt chart for the project, as of 22/10/11, is available from the thumbnail below. Progress is on schedule for the remaining tasks. The Gantt chart has needed little revision since the Stage 2 Progress Report, with tasks and allocations progressing mostly on schedule. A list of milestones and the dates they were met is shown in the table below the Gantt chart and a discussion follows.

| Milestone | Description | Due | Met | Status |

| Cipher and Structural Investigations | ||||

| Analysis of Rubaiyat | Report on Rubaiyat links complete | 08/08/11 | 01/06/11 | Completed ahead of schedule |

| Cipher Identification | Cipher List compiled | 10/04/11 | 17/03/11 | Completed ahead of schedule |

| Cipher Investigation | Cipher List fully investigated | 16/08/11 | 30/08/11 | Completed behind schedule |

| (Extension) CipherGUI | Passes final tests | 01/10/11 | 26/10/11 | Completed ahead of schedule |

| Web Crawler Development | ||||

| Pattern Matcher module | PM successfully detects patterns and returns results | 08/08/11 | 15/07/11 | Completed ahead of schedule |

| Web Crawler module | WC self-navigates the web | 08/08/11 | 08/08/11 | Completed on schedule |

| System Integration | Software retrieves web pages and matches patterns | 17/09/11 | 17/09/11 | Completed on schedule |

| (Extension) GUI complete | Able to run searches and view results from GUI | 25/09/11 | 27/09/11 | Completed behind schedule |

| Project Management | ||||

| Proposal Seminar | Proposal Seminar presented | 18/03/11 | 18/03/11 | Completed on schedule |

| Stage 1 Progress Report | S1PR submitted | 01/04/11 | 01/04/11 | Completed on schedule |

| Stage 2 Progress Report | S2PR submitted | 03/06/11 | 03/06/11 | Completed on schedule |

| Final Presentation | Presentation presented with demonstration | 30/09/11 | 30/09/11 | Completed on schedule |

| Final Report | Final Report handed over | 21/10/11 | 21/10/11 | Completed on schedule |

| Project Exhibition and Poster | Poster complete and Exhibition given | 28/10/11 | 28/10/11 | Progress on schedule |

| Project Video | Video complete and uploaded to YouTube | 28/10/11 | 28/10/11 | Progress on schedule |

| Handover | Products and results handed over to EEE School | 28/10/11 | 28/10/11 | Progress on schedule |

Hard work from the beginning of the project saw initial milestones, including identification of ciphers to investigate and the structural and statistical analysis of the Rubaiyat, completed ahead of schedule.

The table indicates that the Cipher Investigation was completed behind schedule. Rather than being a mismanagement or miscalculation of resources and time, this was primarily due to Steven and Patrick deciding to continue identifying and investigating further ciphers than were initially set out in order to make the Cipher Analysis section of the project more comprehensive.

Regarding the Web Crawler development milestones, the Pattern Matcher module shows the milestone was achieved well ahead of schedule. This is accurate; however it should be noted that the initial pattern matching software completed on 15/7/2011 was reviewed and re-designed during the System Integration phase as additional requirements and knowledge about the system were realised.

The extension to the project objectives, the GUI for the web crawler, did run slightly behind schedule. This was mainly due to unexpected complexities encountered in Java multi-threading as identified in the System Integration section of this report. This GUI milestone was still achieved before the demonstration in the Final Seminar.

All project management milestones, including the progress reports and seminars have been met on time with no compromises in quality or any other issues. Progress on achieving all remaining milestones, including the Exhibition, Video and Handover, is on track to meet the schedule set out in the table above.

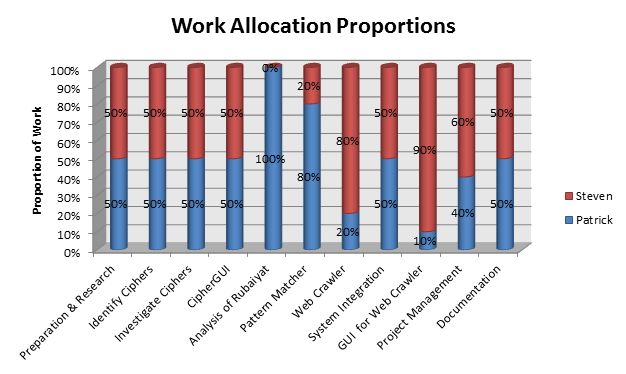

Role Allocation

During the planning phase of the project, a workload allocation plan was specified, recognising that while some areas could be worked on equally, there were distinct advantages in individual team members focusing on specialty areas. A graphical illustration of allocations is shown in the figure below, with reasoning provided underneath.

The review and research of previous work was split equally since each project member needed to have a good understanding of the entire project and its status. The workload in the Cipher Analysis section (including the CipherGUI) was also equally shared. This was due to the compartmentalised nature of the cipher investigation; individual ciphers were all separate investigations so there was no major advantage in specialising.

Recognising the complexity and distinct segments of the web crawler application, the development of the pattern matching and web crawler modules were assigned to Patrick and Steven respectively. The reasoning behind this was Patrick’s previous experience in active file-searching, whilst Steven’s overall greater exposure to University Computer Science courses was expected to give an advantage in the challenges encountered in developing the web crawling module. System integration between these modules was split equally. Steven’s GUI design experience was the motive behind being assigned the development of the Web Crawler System GUI, while the pattern matching experience made it logical for Patrick to take responsibility for the structural and statistical analysis of the Rubaiyat which involved writing statistic gathering software. Project management and documentation were both shared. Overall contribution to the project is estimated at 50% each as can be derived from the graph in Figure 16.

Review and Audit Process

The process of reviewing and auditing progress and revising group member roles was well managed throughout the lifecycle of the project. The strategy used focused on several key areas:

- Regular (internal) meetings and Gantt chart

- Project wiki page

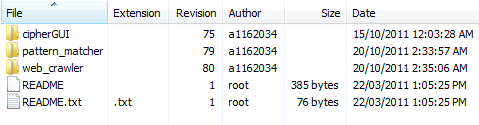

- SVN Repository

- Extensive email Communication

A minimum of one informal meeting per week was held between project members to discuss progress and ensure both Patrick and Steven were satisfied with the current status. These meetings provided a platform to discuss individual challenges encountered and brainstorm solutions to these problems. They also enabled members to query each other’s work to ensure it was at a satisfactory level of quality.

The project wiki page played two main roles. Firstly, the Weekly Progress page was updated each week by both team members. This provided both a self-check mechanism to ensure goals were being achieved and an additional update for the other group member as to their counterpart’s progress. Secondly, shared database areas, such as the Cipher Cross-off List, enabled group members to review each other’s work ensuring it was comprehensive and of high quality.

The svn repository requested from the School of Electrical and Electronic Engineering proved extremely valuable as both an auditing function and a work integration tool. The repository was used extensively throughout the project for both the CipherGUI and the Web Crawler system. The top level of the repository is shown in the image below.

Finally, a high level of communication played a key role in organising and collaborating project work. Communication was both in person and through the University email system including the Gmail chat functionality. Without this high level of communication, it is unlikely the project would have been as successful as it was, nor achieved nearly as much as it did.

It may be noted that in the Work Allocation graph displayed in the previous section, tasks allocated to one project member were not completed 100% by that member. While partially due to the team self-auditing approach resulting in both members working on sections, there was also an aspect of role re-assignment responsible. Role re-assignment was primarily due to differing workloads combining with schedule requirements. Role re-assignment was organised both through email communications channels and at the regular internal group meetings.

Budget

The final costed budget has varied from the budget projected in the Stage 2 Progress Report. Originally the budget included allocation for the printing of two additional posters for the project exhibition at a cost of $30 per poster. After reviewing the decision only one additional poster will be printed. There were no other variations to the projected budget and thus the project will remain well under the $500 allocated by the University of Adelaide.

| Item | Projected cost | Cost |

| Bay Discovery Centre Exhibition | $4.00 | $4.00 |

| Additional printing (Project Exhibition) | $60.00 | $30.00 |

| Total used: | $64.00 | $34.00 |

| Total provided: | $500.00 | $500.00 |

Risk Management

None of the risks identified in the formation stages of the project[10] have had a significant impact on the progress and results. The project has been completed on schedule, on budget and meets the objectives. This, in part, can be attributed to successful implementation of risk management strategies. The project risks and OH&S risks identified are recapped in the tables below.

| Risk | Risk Estimation | Reduction Strategy |

| Availability of personnel | High | Regular meetings with flexible schedule |

| Insufficient financial resources | Medium | Open source software and project budget |

| Software development tool access | High | Suitable personal work environment on laptop for each team member |

| Unable to maintain software development schedule | Medium | Progress management strategy |

| The Somerton Man case is solved | Low | No risk reduction strategy |

| Risk | Risk Estimation | Reduction Strategy | |

| Physical Hazards | |||

| External construction noise | Medium | University of Adelaide OHS policy | |

| Falls within laboratory | Medium | ||

| Injuries due to electrical shock | Medium | ||

| Chemical Hazards | |||

| None | - | - | |

| Biological Hazards | |||

| None | - | - | |

| Ergonomic Hazards | |||

| Work place layout | Low | University of Adelaide workstation ergonomic guidelines | |

| Radiation Hazards | |||

| None | - | - | |

| Psychological Hazards | |||

| Work related stress | Medium | Individually developed and monitored strategy | |

| Repetitive tasks | Medium | ||

Of these risks, the most prevalent were the Personnel Availability and Access to software development tools. Both Steven and Patrick had extremely busy schedules relating to outside work at stages throughout the project however, high levels of communication meant the progress didn’t fall significantly behind schedule at any point.

Access to developmental software was a particular issue given neither Steven nor Patrick had allocated workstations at the University. As the table identifies, personal laptops were used extensively to avoid this issue.

Project Outcomes

Significance and Innovations

This project has been significant, as it has contributed directly to an on-going state investigation. The software and results obtained in 2011 provide a valuable resource for anyone planning to continue the investigation in the future, at the University of Adelaide or externally.

On the cipher analysis side, a substantial contribution has been made to the ongoing investigation of the Somerton Man code. This has also led to the by-product of a pioneering cipher analysis tool; the CipherGUI. This piece of software is innovative in that it merges numerous ciphers into one centralised application while also providing the aid of real-time frequency analysis and interactive keyword functionality. The CipherGUI possesses both educational and cryptanalytical potential.

The development of a freely available pattern matching web crawler application provides a new tool with which to search the web in ways that traditional search engines such as Google do not allow. The flexible pattern matching algorithms, particularly the implementation of a Regular Expression option, combined with the development of an intuitive GUI frontend to the product sets it apart from other publicly available web crawlers.

Strengths and Weaknesses

The Cipher Analysis section of the project has strengths exceeding that of previous attempts. The comprehensive analysis has included a broad range of ciphers (over 30) with each being profoundly investigated. The cipher analysis tool strengths lie in the large number of ciphers (18) it merges into a single educational application, whilst providing analysis features and interactivity.

The primary weaknesses from the Cipher Analysis section revolve around the source material. Assumptions regarding ambiguous letters and the language of the underlying message had to be made. The sample size of the source material (44 letters) also limited the ability to make conclusions.

The only identified weaknesses of the CipherGUI is the ciphers that cannot be (or have not been) implemented. These are mainly number-based or symbol-based ciphers.

The pattern matching web crawler system’s strengths are numerous. It is able to accept a wide range of user-determinable patterns, with flexible Regular Expression capabilities. The OTS solution to the web crawler module of the system has allowed all web crawling requirements to be met, namely abiding by the Robots Exclusion Protocol ethical policy as well as additional features that add usability. It also provides for robust error recovery whilst the open source nature of the crawler also allows modification of the underlying module if required. The intuitive GUI design ensures a broad range of users are able to access the tool.

Weaknesses of the system from a pattern matching perspective are the omission of certain special cases. The OTS choice for the web crawler module introduces the weakness of a lack of understanding of some of the internal workings of the code, hindering development. The dynamic nature of the web crawler implies there is no capability for data re-examination, also meaning searches are download intensive and more suited to rare or once-off searches.

Conclusion

The Cipher Cracking 2011 project has been a successful venture. All objectives have been met and surpassed with the addition of new and innovative extensions. The products of the project are fully functional, produced on budget and on schedule, meeting all specified requirements.

The Cipher Analysis section of the project has been both comprehensive and informative, providing a significant contribution to the ongoing investigation into the Somerton Man case and code. The product from this section is a Cipher Cross-off List; a database of ciphers and reasoning as to whether they can be ruled out from being used in creating the Somerton code.

A by-product of the Cipher Analysis section is the CipherGUI, an innovative software tool merging numerous ciphers into one easy-to-use interface that also provides keyword interactivity and analysis tools. Applications of this software are both educational and cryptanalytic.

The Web Analysis section of the project has produced a valuable web search tool capable of independently browsing the web in search of flexible patterns defined by the user. Pattern matching functions include the implementation of the powerful Regular Expression definition of String patterns and an intuitive graphical user interface has been designed for the system to ensure a wide range of users are able to take advantage of this product.

It is expected this web crawler will be extremely useful in searching for distinct patterns evident in the Somerton Man code, however the flexible pattern matching algorithms ensure it has uses outside this scope, for example in web statistic gathering and market research.

The software products of this project, namely the web crawler and the CipherGUI, have been made freely available on the project’s website. Project team members hope they can be put to continued good use, making the most of the many hours that have been put into creating them.

These software products will be made available in the See Also section of this report from 31/10/2011.

References

- ↑ 1.0 1.1 1.2 Tamam Shud Case, Wikipedia Foundation Inc, http://en.wikipedia.org/wiki/Taman_Shud_Case

- ↑ 2.0 2.1 Inside Story, presented by Stuart Littlemore, ABC TV, 1978.

- ↑ 3.0 3.1 3.2 Final Report 2009, Bihari, Denley and Turnbull, Andrew, 2009, https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_report_2009:_Who_killed_the_Somerton_man%3F

- ↑ 4.0 4.1 4.2 Final Report 2010, Ramirez, Kevin and Lewis-Vassallo, Michael, 2010, https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Final_Report_2010

- ↑ 5.0 5.1 5.2 5.3 The Code Book, author Simon Singh, The Fourth Estate, 2000.

- ↑ Lewand, Robert, English Letter Frequencies, http://pages.central.edu/emp/LintonT/classes/spring01/cryptography/letterfreq.html

- ↑ 7.0 7.1 Rodham, Ken, Web Crawler, Brigham Young University Computer Science, http://faculty.cs.byu.edu/~rodham/cs240/crawler/index.html

- ↑ Regular Expression, Wikipedia Foundation Inc, http://en.wikipedia.org/wiki/Regular_expression

- ↑ Yanchuk, Katerina, My Love is a Butterfly, http://www.poemhunter.com/best-poems/katerina-yanchuk/my-love-is-a-butterfly/

- ↑ Johnson, Patrick and Maxwell, Steven, Stage 1 Design Document 2011, https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Stage_1_Design_Document_2011

See also

- Glossary

- Cipher Cross-off List

- Stage 1 Design Document 2011

- Stage 2 Progress Report 2011

- Final Seminar Recording 2011 Part 1

- Final Seminar Recording 2011 Part 2

- Final Seminar Recording 2011 Part 3

References and useful resources

- Final Report 2010

- Final report 2009: Who killed the Somerton man?

- Timeline of the Taman Shud Case

- The taman shud case

- Edward Fitzgerald's translation of رباعیات عمر خیام by عمر خیام

- Adelaide Uni Library e-book collection

- Project Gutenburg e-books

- Foreign language e-books

- UN Declaration of Human Rights - different languages

- Statistical debunking of the 'Bible code'

- One time pads

- Analysis of criminal codes and ciphers

- Code breaking in law enforcement: A 400-year history

- Evolutionary algorithm for decryption of monoalphabetic homophonic substitution ciphers encoded as constraint satisfaction problems